Today, many searches end inside ChatGPT, Perplexity, or Google AI Overviews. Users read the answer, make a decision, and move on, without ever visiting a website. And if your brand isn’t named, it doesn’t matter how good your rankings are.

But, keyword rankings, search volume, and traffic data don’t show whether your brand appears in AI-generated answers or how often it’s referenced across platforms. As a result, brands can influence decisions without any obvious signal in analytics.

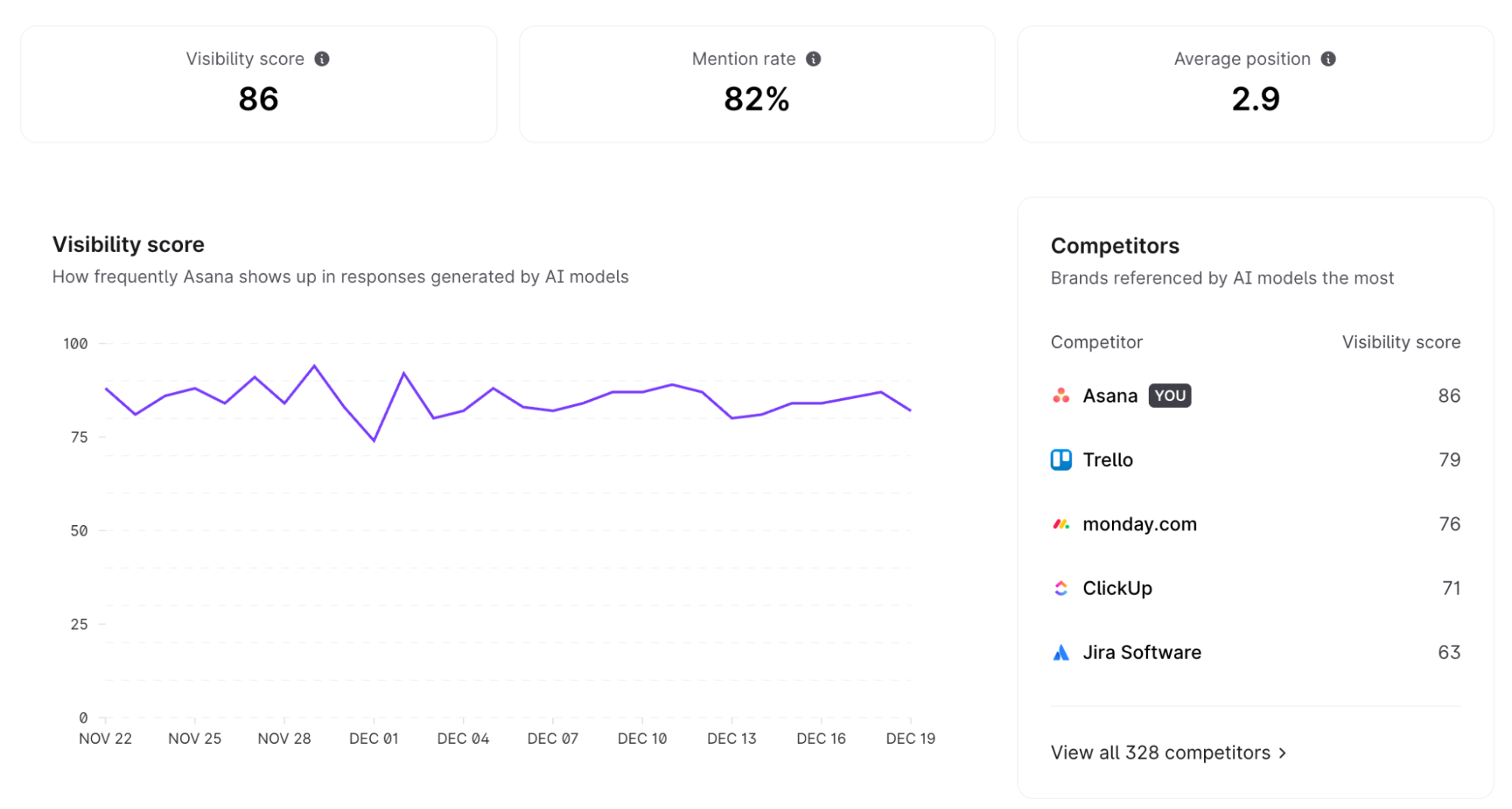

A visibility score captures that missing layer. It tracks how frequently your brand is mentioned across AI-generated responses, alongside traditional search surfaces, giving a clearer picture of real-world discovery.

What you will learn

- What a visibility score means in the context of AI search

- How AI search visibility differs from traditional SEO visibility and keyword rankings

- What a good visibility score looks like today—and how to interpret it correctly

What is a visibility score?

A visibility score shows how often your brand appears in relevant AI-generated answers across prompts, topics, and AI models.

In the context of AI SEO, an AI visibility score measures how visible a brand, website, or product is within AI-generated responses across large language models. It combines how often an entity is mentioned with its average position or prominence in those responses to indicate overall exposure in AI answers.

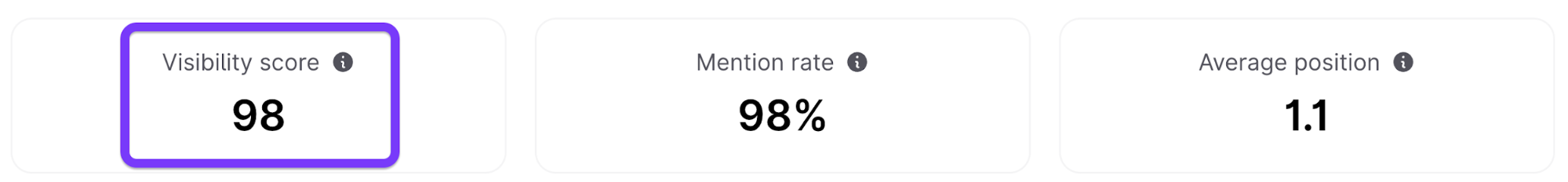

For example, Surfer's has a visibility rating of 98 for content optimization topics.

The visibility score is an overall score while mention rates and average positions are singular metrics.

Measuring visibility scores are also part of a broader trend towards measuring brand exposure in a zero-click environment. The score helps teams track, compare, and benchmark their presence across different prompts, topics, and AI models over time.

This includes responses generated by platforms like ChatGPT, Perplexity, Google AI Overviews, Gemini, Claude, and Microsoft Copilot.

This is fundamentally different from how traditional SEO defines visibility.

Traditional SEO visibility focuses on:

- Keyword rankings for a target keyword

- Positions in search engine results

- Click-through rates and organic traffic

AI visibility scores focus on something else entirely:

- Whether your brand appears inside AI responses

- How frequently your site or domain is referenced as a source, driving visibility

- How AI models perceive your authority on a topic

This creates what many teams now struggle to track: invisible influence.

A user might first discover your brand through an answer in ChatGPT or another LLM. Later, they search for your brand by name, visit your site directly, or convert through another channel.

In analytics, that visit shows up as branded search or direct traffic, not as AI-driven discovery.

Google Analytics doesn’t attribute that journey to AI answers, which makes traditional tracking methods blind to how often LLMs introduce users to your brand in the first place.

In short, search engine visibility tells you where your pages rank. AI search visibility tells you whether your brand is actually being seen and mentioned in the answers users consume today.

How are visibility scores calculated?

A visibility score is calculated by measuring how often your brand appears in relevant AI-generated answers, compared to the total number of answers analyzed for a defined set of queries.

At its simplest, the logic looks like this:

AI answers where your brand is mentioned ÷ total relevant AI answers analyzed.

The goal isn’t to track rankings or clicks, but to understand how visible your brand is across AI platforms when users ask questions related to your category, product, or expertise.

Unlike traditional SEO, AI search doesn’t offer stable keyword rankings or reliable search volume data. Users phrase questions differently, follow up conversationally, and often get slightly different responses even for the same prompt.

Because of this variability, visibility can’t be measured by individual keyword tracking alone.

Instead, visibility scores rely on representative query sets:

- Hundreds of high-intent questions tied to your audience and use cases

- Queries grouped by topic or intent, not treated as isolated keywords

- Queries run repeatedly across major AI platforms and different AI models

While AI responses may change, patterns become clear when results are aggregated over time. Within each AI response, systems track a small set of key metrics that signal real visibility:

- Whether your brand is mentioned in the answer text

- Whether a page or domain is cited as a source

- How often your brand appears compared to competitors across the same query set

When this data is collected consistently, it becomes possible to calculate share of voice, spot gaps in brand visibility, and see which competitors dominate specific topics in AI search.

This aggregation step matters because AI responses are probabilistic.

Individual answers vary, but repeated sampling smooths out noise and produces stable estimates of visibility. For that reason, visibility scores are directional by design. They’re meant to show trends, relative performance, and competitive position, not precise rankings.

Tools like Surfer AI Tracker apply this sampling approach at scale.

By monitoring consistent query sets across AI platforms, Surfer can track how visibility changes over time, which prompts surface your brand, and where competitors gain or lose ground.

The focus here is on measuring presence, not predicting traffic.

That distinction is important. AI-driven discovery often doesn’t show up cleanly in analytics, but visibility scores still capture whether your brand is part of the answers users actually consume.

In practice, that makes visibility score a leading indicator, reflecting how AI models perceive your brand before changes appear in traffic or conversions.

What is a good search visibility score?

There isn’t a single number that defines a good visibility score (at least not yet).

Unlike traditional SEO visibility scores, AI visibility metrics are still emerging. In classic SEO, a 40% visibility score might have a fairly established meaning based on specific keyword rankings, search volume, and search engine results.

But, in AI search, that kind of universal benchmark doesn’t exist. A good AI visibility score is relative as it depends on:

- The specific AI platforms and AI models you’re tracking

- The keyword groups or topics you are tracking

- How visible your competitors are for the same prompts

For example, appearing in 30% of relevant AI-generated responses may not sound impressive on its own. But if your closest competitors only appear in 10% of those same answers, your brand visibility is strong within that context.

Visibility, here, is about comparative presence, not absolute dominance across search.

It’s also important to recognize that visibility isn’t evenly distributed. A brand might show up in 70–80% of AI answers for one cluster of queries, while appearing in just 5–10% for another.

That’s why tracking the right prompts matters more than chasing a single aggregate score. A high visibility score tied to irrelevant queries doesn’t drive meaningful visibility or business impact.

Another factor shaping what “good” looks like is concentration.

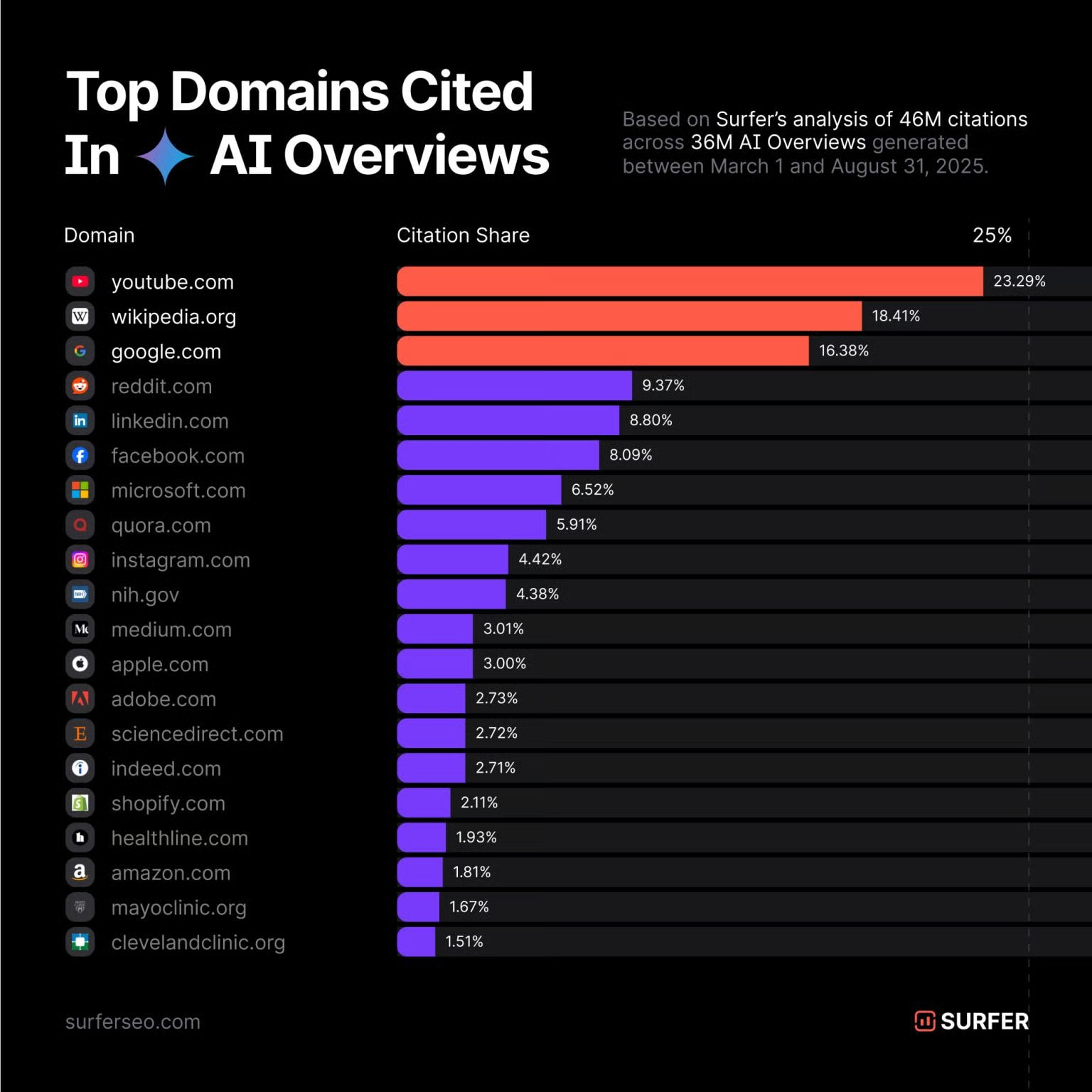

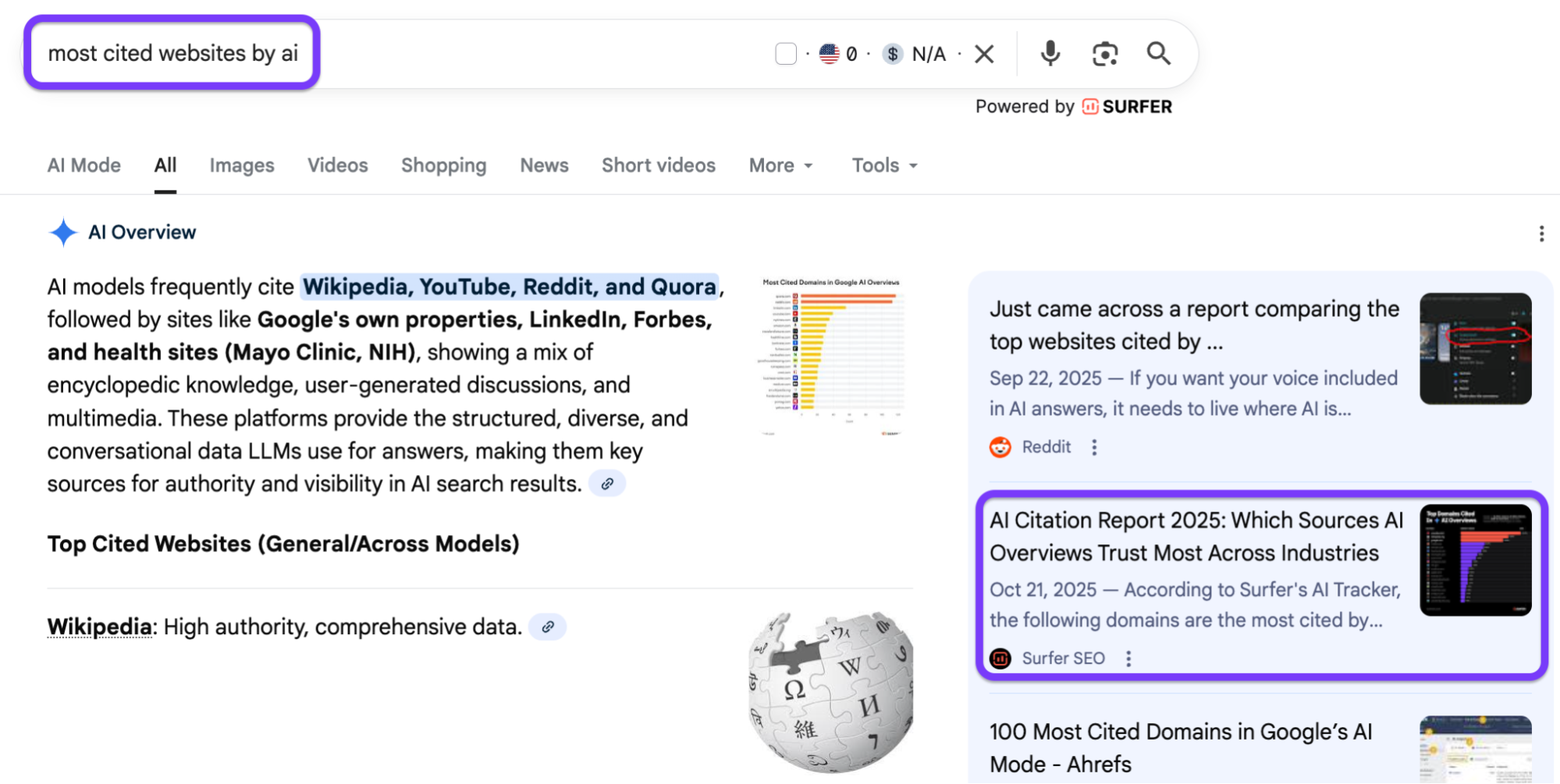

Research from Statista shows that a relatively small group of domains capture a disproportionate share of AI citations.

In many categories, nearly one-third of all citations come from the same limited set of sources—often large, authoritative sites like Wikipedia, Reddit, or major publishers like Healthline.

Competing in this environment means you’re not just trying to be visible, but trying to break into a very tight rotation of trusted sources.

There’s also a disconnect between AI visibility and traditional rankings.

Only 12% of URLs cited by ChatGPT, Perplexity, and Copilot rank in Google's top 10, while 80% of LLM citations don't rank in Google's top 100.

That means a brand can have modest search engine visibility and still perform well in AI-generated answers, or rank well in search while barely being mentioned by AI models.

Because of this, the most useful way to judge whether your visibility score is “good” is to look at trends and comparisons over time:

- Is your share of voice increasing relative to competitors?

- Are you being mentioned more frequently across different AI models?

- Do you see growth in branded search or direct traffic as your AI visibility improves?

Visibility scores are especially valuable as a leading indicator.

They often move before changes show up in traffic, conversions, or revenue. Teams that start tracking early can establish baselines, identify gaps, and adapt faster as AI citation patterns become more entrenched.

In short, a good visibility score shouldn’t be a fixed target. It’s a measure to show how your brand is consistently visible, competitively positioned, and increasingly present in the AI responses your audience is actually consuming.

6 strategies to increase your visibility score across AI search

Increasing your visibility score is all about making your content easier for AI systems to extract, understand, and reuse when generating answers.

Here are some strategies that help increase the visibility of my content, and patterns I’ve observed across major AI platforms and different AI models.

Each tactic is practical, repeatable, tried and tested using my own content, and directly tied to how brands get cited, mentioned, and optimized for large language models.

1. Optimize content structure for AI extractability

Large language models scan pages for clear, well-structured answer blocks they can lift and reuse. If your content is difficult to parse or loosely organized, it’s far less likely to be cited, even if the information itself is accurate.

This is largely a foundational SEO best practice, but it has a much bigger impact in AI search because structure directly affects whether your content can appear in AI-generated answers.

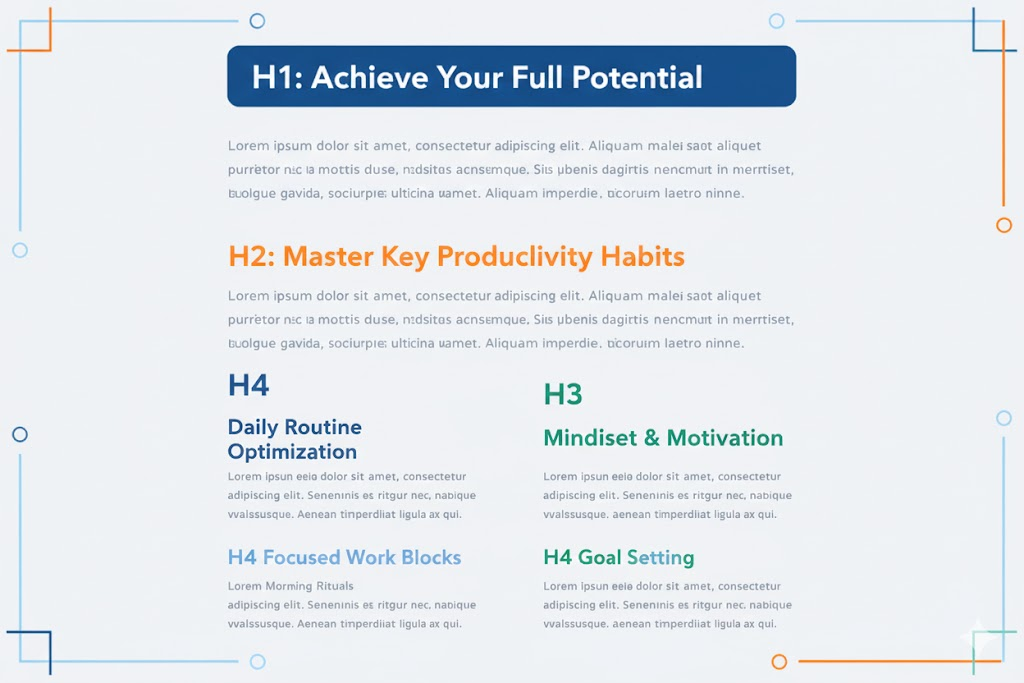

Start with a clear hierarchy:

- One H1 that states the primary promise of the page

- H2s that break the topic into major ideas or questions

- H3s that support each idea with focused explanations, comparisons, or examples

Here’s what that looks like:

Within that structure, use content chunking deliberately. Break information into self-contained sections where each chunk:

- Answers one specific question

- Can stand on its own without surrounding context

- Is clearly tied to a heading that explains what the chunk covers

AI models prefer extracting complete, coherent chunks rather than stitching together sentences from different parts of a page. According to research from NVIDIA, page-level chunking provides the highest average accuracy for AI retrieval systems.

This means, well-chunked content makes it easier for AI systems to reuse your answers accurately and consistently.

Each chunk should follow an answer-first approach:

- Lead with a short, direct response (roughly 30–50 words)

- Expand with context, supporting data, or examples

This format works well for both AI extractability and human readability.

Question-based headings also help reinforce chunking. Turning headings into real questions users would ask, rather than generic labels, aligns each content block with how people phrase prompts in AI search tools.

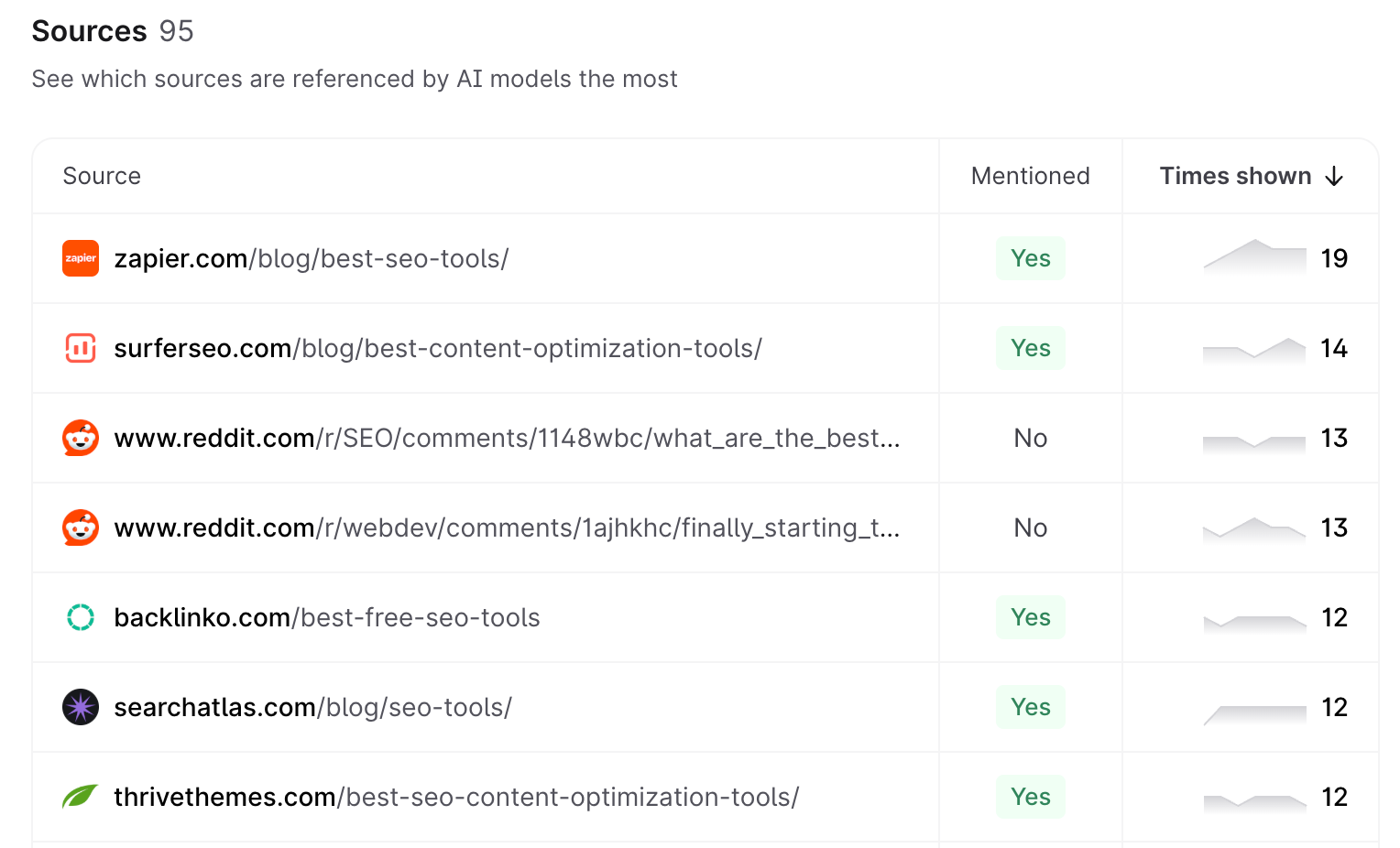

Listicles and comparison-style pages are also being cited frequently right now, especially “best tools” content.

However, they only perform well when they’re balanced and credible. AI platforms tend to favor sources that:

- Acknowledge limitations

- Provide fair comparisons

- Include real pricing and trade-offs

Overly promotional or one-sided listicles are less likely to earn consistent mentions.

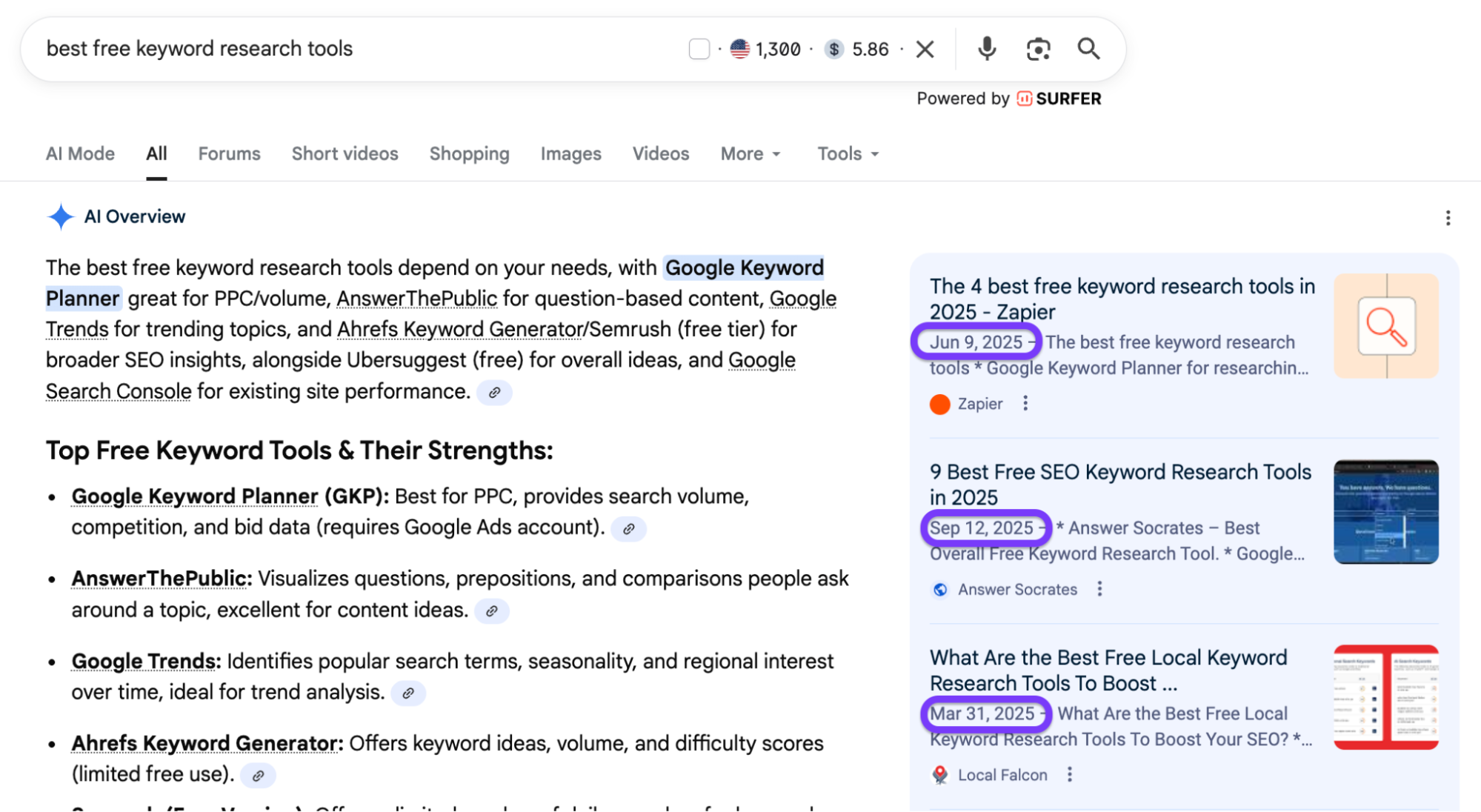

2. Keep content fresh with regular updates

Multiple studies show that recent content dominates AI citations.

Pages updated within the last 2–3 months appear far more often in AI answers, while outdated content is frequently skipped, even when it still ranks in traditional search results. This pattern is strongest in Google AI Overviews and Perplexity, where recency is a clear filtering signal.

ChatGPT behaves a little differently. Data shows that around 29% of ChatGPT’s citations come from content published in 2022 or earlier. That doesn’t necessarily mean ChatGPT prefers outdated information.

Many of those older citations likely come from sources ChatGPT already trusts, such as Wikipedia or long-established publishers. The study doesn’t distinguish clearly between content age and source preference.

For most brand-owned websites, that distinction matters.

Brands don’t benefit from the same built-in trust as Wikipedia or major reference sites. As a result, freshness becomes a much stronger signal for whether content gets reused in AI answers.

The takeaway isn’t to publish constantly. What works better is a minimum viable refresh approach that signals relevance without rewriting everything.

In practice, effective content refreshes usually include:

- Adding 2–3 new statistics or data points

- Including a recent case study or real example

- Updating the Last Modified date clearly on the page

- Adding one new FAQ section based on current questions

- Updating the title to include something like “Updated March 2025”

AI systems don’t need the whole page to change. Updating a few key chunks is often enough to improve how that content gets reused in AI responses.

Refresh timing also matters. A simple rule of thumb works well:

- Review important content quarterly in fast-moving fields like AI, SaaS, or marketing

- Optimize twice a year for slower-changing industries

In AI search, freshness isn’t about novelty. It’s about showing that your content still reflects how things work right now, and that directly affects whether AI systems choose to surface it.

3. Build brand visibility across third-party sources

For AI visibility, what others say about your brand matters more than what you say on your own site.

LLMs don’t rely on a single source when generating answers. They compare information across many domains and give more weight to brands that show up consistently across trusted third-party sites.

Research into LLM tracking shows that models strongly favor multi-source reinforcement over single-source references.

One brand page ranking well is rarely enough. Repeated mentions across different domains carry far more weight.

This is why third-party citations and brand mentions play such a big role in whether your brand appears in AI-generated answers. LLMs treat them as confirmation signals.

The more often your brand is mentioned across credible sources, the more confident AI systems become in surfacing it.

Our AI citation report also found that the types of sources LLMs cite are usually consistent:

- Wikipedia

- Reddit threads with real user discussion

- Review websites

- “Best-of” and comparison guides

- Industry publications and news outlets

If your brand isn’t present in these places, AI has far fewer inputs to work with.

And this is exactly what LLM seeding is about. Instead of trying to influence AI directly, you focus on getting your brand mentioned in articles and pages that AI systems already trust and cite.

Here’s an example:

Search Engine Land, one of the most authoritative publications in SEO, published “8 Must-Have SEO Tools Every Marketer Should Use in 2025.” In this article, Surfer is mentioned alongside other leading SEO tools.

Because Search Engine Land is a source LLMs already rely on, that single mention does more than just reach readers. When users ask AI platforms questions like:

- “Best SEO tools”

- “SEO tools for content optimization”

- “Surfer alternatives”

AI systems often pull from the same trusted comparison articles. One placement creates visibility across dozens of related query variations, without needing to rank for each one individually.

Here’s how you can implement LLM seeding or third part brand mentions for your website:

- Identify pages and domains already being cited by LLMs in your category using tracking tools

- Focus on reviews or guides that actively compare products like yours

- Reach out to authors with real value — original data, expert insights, or concrete use cases

- Make sure mentions clearly reinforce entity association (brand name, category, and use case)

When done well, one strong third-party mention can outperform dozens of self-published blog posts. AI systems learn from patterns. Repeated mentions across trusted sources create those patterns.

If your brand only exists on your own site, AI has very little reason to surface it.

4. Create citation-ready content with data and statistics

Content that includes specific data points shows up far more often in AI-generated answers.

Our own in-house analysis on AI search behavior shows that pages with clear facts, statistics, and measurable data are 30–40% more likely to be referenced in LLM responses compared to content that relies on general explanations alone.

This pattern holds across major AI platforms, regardless of industry.

This is because LLMs work best when they can quote or reference something concrete. It’s easier, and safer, for an AI model to reuse a number, a finding, or a study than to generate an abstract explanation from scratch.

This is why content that feels “boring” to humans often performs better in AI answers.

Citation-ready content has a few clear characteristics:

- Data shows up early: Put facts, statistics, and research near the top of the section. Don’t hide them mid-paragraph. They should appear early in sections, clearly stated, and easy to extract.

- Each paragraph stands alone: Write in self-contained blocks (around 60–100 words) that still make sense when read on their own.

- Context-independent language: Avoid references like “as mentioned above” or “later in this section.” AI often pulls content in isolation.

- Trusted sources are clearly referenced: Mention or link to credible sources such as Harvard Business Review, Pew Research, government data, or well-known industry studies.

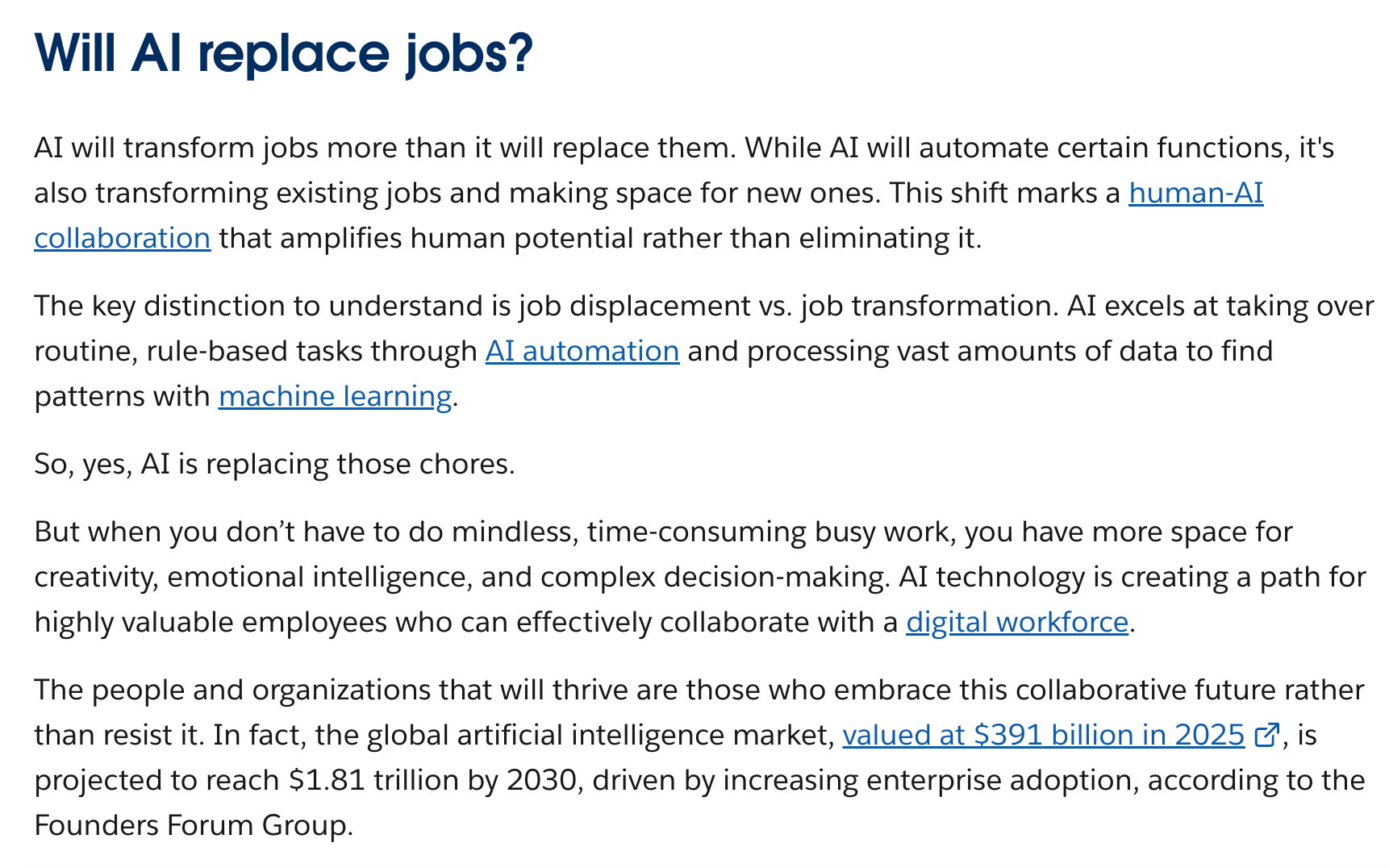

For example, this blog by Salesforce on “Will AI Replace Jobs?” covers many authentic data sources, helping it rank in Google’s AI Overviews for the same phrase. You’ll also notice how each section of the blog provides some value on its own, ensuring context-independent language.

These references signal credibility and reduce uncertainty for AI models deciding what to surface. And credibility compounds over time.

Original research works even better.

Running surveys, compiling internal data, or creating proprietary frameworks gives AI systems something unique to reference.

When the data doesn’t exist elsewhere, your content becomes the default source.

That’s why original studies, benchmarks, and structured frameworks often show up repeatedly across AI responses, even months after publication—just like this AI citation report we published.

5. Implement structured data and schema markup

Schema is not a direct AI optimization tactic. But it helps your content surface in Google, and many AI platforms still rely on traditional search engines as part of how they retrieve and validate information.

Schema, or structured data, tells search engines what your content means, not just what it says. It removes ambiguity by clearly defining things like questions, answers, articles, products, and organizations.

The most effective approach to implement this is a dual setup:

- Add visible FAQs on the page for users (what AI systems tend to quote).

- Apply FAQ schema markup to those same questions for search engines (makes the same content easier to discover, classify, and reuse).

Schema works best when paired with semantic HTML. Using meaningful tags like <header>, <nav>, and <article> helps systems understand content structure. Generic <div> tags don’t carry that meaning. Most CMS’ already follow this by default.

LLMs rely on these structural signals to:

- Identify sections and intent

- Extract answers cleanly

- Summarize content accurately

A few schema types consistently matter most for AI visibility:

- FAQ schema for frequently asked questions

- Article schema with proper metadata (author, publish date, last modified)

- Organization schema to reinforce entity associations

- Product schema for e-commerce and SaaS pages

The same signals that make content easier for screen readers to navigate—clear structure, labels, and hierarchy—also help AI systems understand and interpret pages.

Finally, schema only helps if it’s implemented correctly. Always validate markup using Google’s Rich Results Test to confirm it’s readable and error-free.

6. Target pages AI models already trust as citation sources

AI systems reuse sources they already trust. If a page appears often in AI answers, it’s far more likely to appear again. Getting your brand mentioned on those pages gives you access to that existing trust.

This creates a multiplier effect—similar to how Google’s query fan out works.

When a user asks a single question, LLMs don’t treat it as one isolated prompt. They internally expand it into multiple related queries, variations, follow-ups, and adjacent intents.

For example, a question like “best SEO tools” can fan out into:

- “best SEO tools for content optimization”

- “SEO tools for beginners”

- “alternatives to X”

- “tools similar to X”

- “What should I use instead of Y”

This happens because AI answers fan out from the same source across multiple prompt variations. You don’t need to win every query. You just need to be present in the sources AI already pulls from.

The key is knowing which pages those are. Start by identifying citation patterns in your category:

- Use LLM tracking tools like Surfer to see which pages and domains appear most often in AI answers.

- Compare results across ChatGPT, Gemini, Perplexity, and Google AI Overviews.

- Look for the same articles or sites showing up repeatedly.

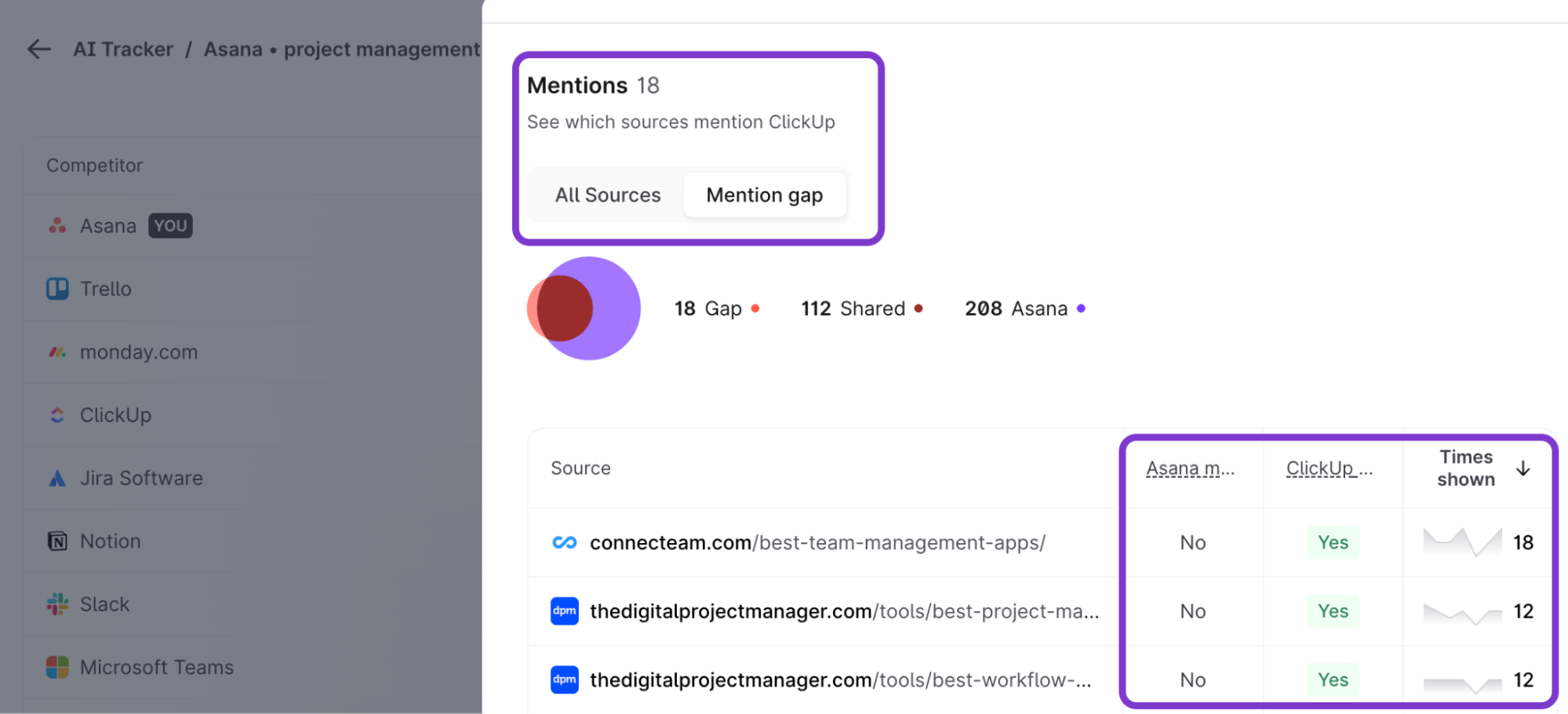

Next, audit competitor visibility.

In many cases, competitors dominate AI answers even when their SEO rankings are weaker. That’s usually a signal they’re being cited on trusted third-party pages.

Surfer’s AI Tracker can help surface these blind spots by showing where competitors appear in AI responses and you don’t.

Once you know which pages matter, the strategy is straightforward:

- Prioritize articles, comparisons, and guides AI already cites

- Reach out to those sources directly

- Offer something useful: original data, a clear use case, or expert input

- Make sure the mention clearly reinforces entity association (brand name, category, use case)

Wikipedia is also one of the most frequently referenced sources across LLMs. That means, having a verified, accurate presence there, can significantly improve how often your brand is surfaced indirectly through AI answers.

If your brand keeps appearing in the same trusted places, AI starts treating it as part of the answer set.

Measure your AI visibility score with Surfer

AI visibility is harder to measure than traditional SEO performance.

There’s no fixed ranking system and no single position to track. The same question can surface different answers depending on phrasing, platform, or timing. That makes classic SEO metrics incomplete when discovery starts happening inside AI answers.

At the same time, AI search hasn’t replaced traditional SEO. It still depends on it.

Platforms like ChatGPT and Perplexity pull from content that already performs well in search. That means traditional SEO influences what AI systems can see, while AI visibility determines what users actually discover.

This is why Surfer brings search engine visibility and AI visibility together, so you can see how one feeds the other instead of tracking them separately across different tools.

Surfer helps you track:

- Visibility Score, showing how often and how prominently your brand appears across ChatGPT, Perplexity, and Google AI Overviews.

- Mention Score, which shows how frequently your pages are referenced in AI answers.

- Prompt-level visibility, so you can monitor the exact questions that surface your brand.

This unified view also helps teams spot impact earlier.

AI-driven discovery often shows up first as branded search, not conversions. Users discover a brand through AI answers, then return later through Google. By the time they convert, the original discovery source is invisible in analytics.

Vercel saw this firsthand.

As their content appeared more often in ChatGPT answers, they tracked spikes in branded search traffic in Google Search Console, confirming that AI mentions were driving discovery before conversions followed.

That’s exactly why tracking visibility score matters now. If AI answers influence how people decide, measuring visibility is no longer optional.

To wrap up:

- AI answers are changing how people discover brands, often without clicking through to websites.

- Visibility score measures how often your brand appears across AI platforms, not just in search results.

- AI visibility depends on both traditional SEO signals and how AI systems select and reuse sources.

- Brand mentions, fresh content, structured data, and citation-ready pages all influence visibility.

- Tracking AI visibility early helps teams spot demand before it shows up in traffic or conversions.

.avif)