While we're still figuring out the rules of AI visibility, one thing's for sure—we need to rethink monitoring and shift from tracking page rankings to being included in AI conversations, be it as a source, mention, or recommendation.

In this guide, I'll show you how to see if your brand shows up for valuable and business-relevant prompts. I'll also explain what to do with the data you get and how to use it to improve your content strategy.

What is prompt tracking?

Prompt tracking is the practice of testing a defined set of prompts in AI systems and recording how your brand, product, or topic appears in the responses over time. At a high level, the process is simple:

- Find relevant prompts that you want your business to appear for

- Run them across AI platforms (ChatGPT, Perplexity, Claude, etc.)

- Check whether your brand appears in the response and keep an eye on the key data (competitors, narrative, etc.).

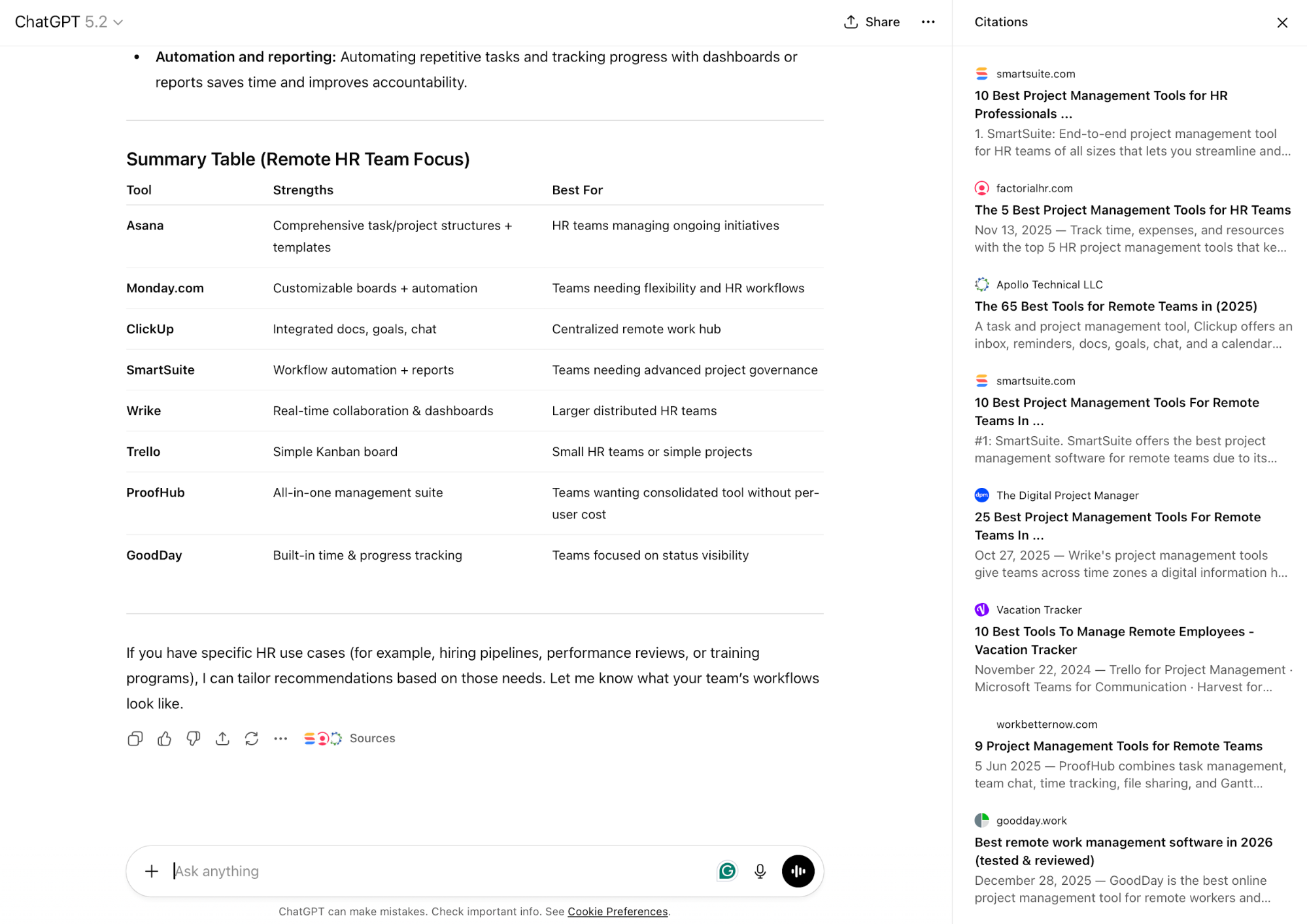

Say you run a project management tool and want to see if AI mentions you when someone searches for one. In this case, the prompt you'd track could look something like this:

"What are the best project management tools for remote HR teams?"

You'd run the prompt in an AI tool like ChatGPT and see what comes up.

As you look at the response, you'd ask yourself questions like:

- Is your brand mentioned?

- If not, who is?

- If yes, is it cited as a source or recommended directly?

- How is your brand or product presented (“best for startups,” “enterprise-grade,” “budget-friendly,” etc.)?

You'd then gather the responses, repeat the process for all the relevant prompts, and draw conclusions (more on that a bit later).

While tracking prompts is pretty simple, it may give you a headache or two in the beginning because large language models (LLMs) don’t have rankings in the traditional SEO sense. With standard Google search, we're used to results being:

- Ordered

- Stable enough to track daily or weekly

- Tied to a page position (e.g., position #3)

However, LLMs don't work in the same way. They generate answers from their training data, or extract snippets of information from web pages, leading to a high degree of volatility in AI answers.

For better or worse, AI answers aren't ranked but generated, which means:

- Your brand might appear in a paragraph, a bullet list, a comparison table, or not at all

- The same prompt can provide different answers each time

- There's no universal “position #1” equivalent across all answers

This calls for a major shift in how we approach performance tracking. But it also gives us entirely different reasons to focus on tracking in the first place.

Why brands need to monitor prompts and AI mentions

Brands need to monitor prompts and AI mentions because users increasingly rely on LLMs for high-intent decisions, so AI answers directly shape demand capture, brand preference, and conversions.

Unlike Google searches, AI queries behave like late-stage search signals, where users are actively considering options or looking to solve a highly specific problem.

Let me go back to the project management tools as an example.

In my prompt, you can see that I'm looking for the best options specifically tailored to HR professionals working remotely. All of these are context cues that LLMs pick up on.

A Google search for a similar keyword will show me a list of pages that discuss project management tools and are well-optimized for that keyword. Meanwhile, an AI tool like ChatGPT will directly recommend options, which makes it more likely that I'll check them out.

Most users think like this, so it doesn't surprise me that AI traffic tends to convert better than organic.

This major trend in traffic sources and performance gives us many reasons for LLM prompt tracking, most notably:

Capture demand

LLMs are increasingly the point of first contact in buyer journeys, so not showing up for the relevant prompts can make you miss out on a big chunk of leads.

Competitive intelligence

Prompt tracking shows you how you stack up against competitors in AI responses. When an LLM suggests a competitor instead of you for the same prompt, it shows how the model perceives relative authority and relevance. Understanding these patterns helps you prioritize where to improve content, clarify positioning, or strengthen evidence signals.

Monitoring reputation

LLMs generate their responses based on patterns learned from massive amounts of content, including outdated, inaccurate, or even negative pieces. If left unchecked, these inaccuracies can silently shape what users believe about your brand.

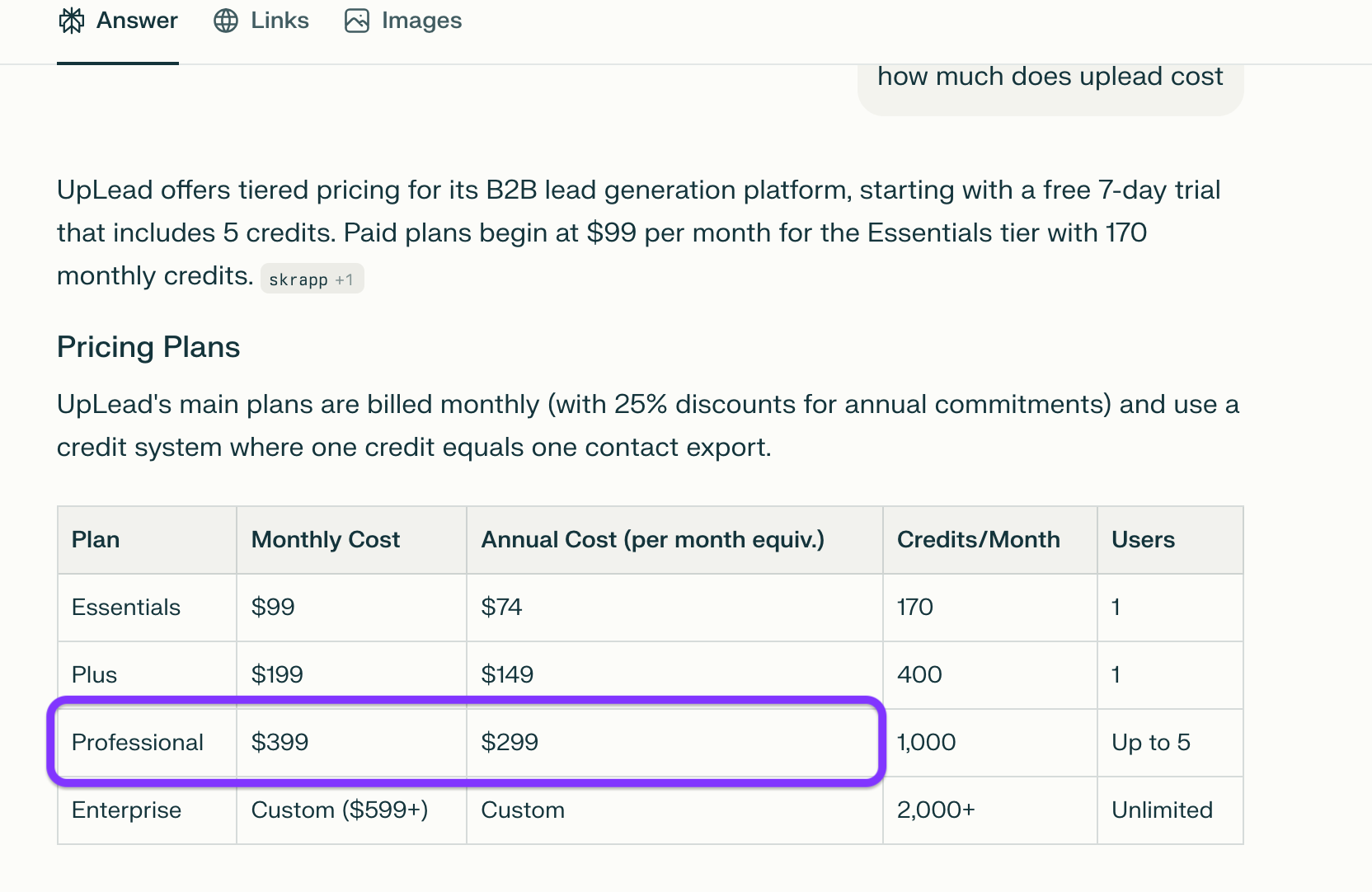

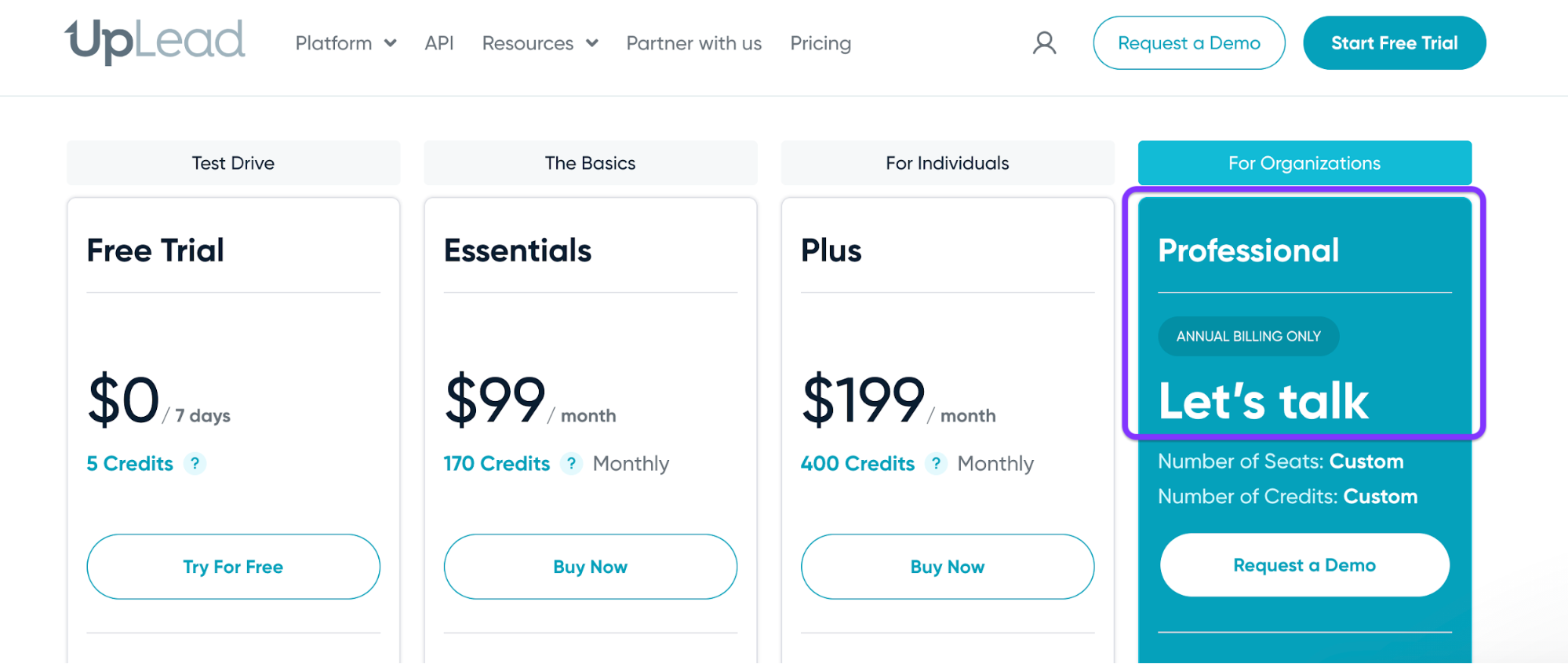

A small but notable example of a brand’s misrepresentation is Perplexity’s response to UpLead pricing. It told me the Professional plan comes at $399 per month or $299 with annual billing.

Meanwhile, UpLead’s pricing page shows that the Professional plan only offers annual billing and that pricing is available upon request.

While this may not be a critical error that could lead to someone not signing up for UpLead, it could set distorted expectations and impact the purchase decision.

What should you monitor when tracking prompts?

While monitoring prompts, you should track three types of signals:

Brand mentions

A brand mention happens when an AI tool includes you in its response, and can indicate what AI models know about your brand or product.

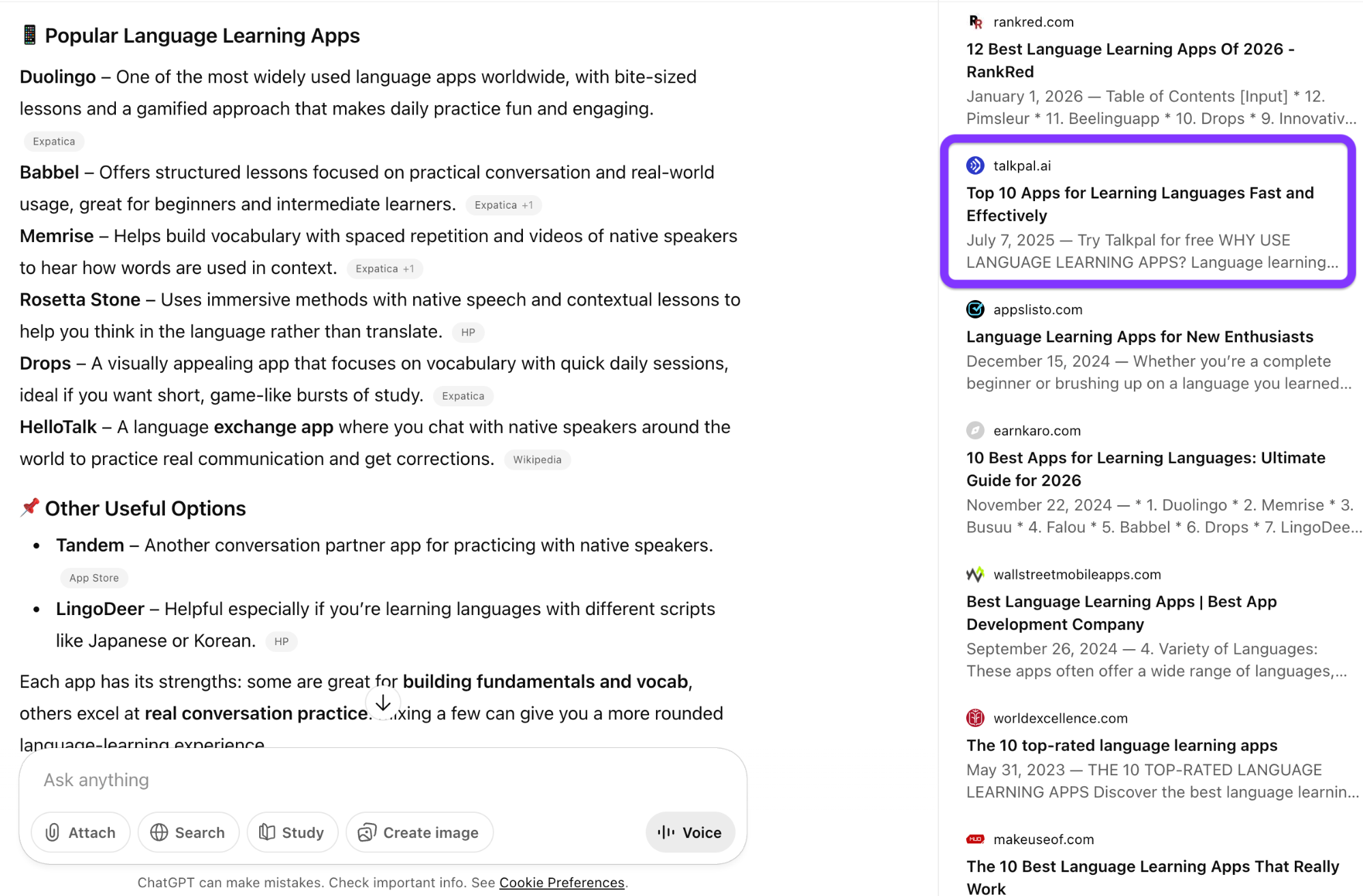

For example, when I talked to ChatGPT about using apps to learn a language, it briefly mentioned Duolingo and Babbel as popular options.

A mention can be:

- Recommendation: "Your product is recommended when someone is seeking advice."

- Incidental: "Other tools include X, Y, Z…" (no details, no rationale)

- Weakly positioned: "Also worth considering…" (soft language)

- Negative: "X is known for being expensive/limited/outdated…" (harmful framing)

- Misclassified: You’re mentioned, but in the wrong category ("CRM" when you’re a support desk)

While tracking brand mentions is valuable to understanding your overall visibility, you need to explore the sentiment to gain context behind each mention for more impactful tracking.

At the most basic level, you should track which prompt type produced it (non-branded, branded, comparison) and how your brand was framed.

Citations

When an LLM cites you as a source, it considers you reputable and credible. That's why citations are typically more impactful than just brand mentions—they show you have some influence on the model's response, which is a strong foundation for future optimization.

For example, when I asked ChatGPT to directly recommend language learning apps, it mainly cited independent listicles and sources like the App Store. But among them, it also included Talkpal, an actual app that it considers credible.

Now, not all citations are equally valuable. You should track three different types for effective monitoring:

- Owned citations (your website/docs/blog): Great for accuracy and product truth

- Earned citations (independent reviews, reputable publications, etc.): Often carry higher persuasive weight and signal category leadership

- Community/UGC citations (Reddit, forums, social media): Can be influential but carry a higher risk if negative narratives dominate

Besides seeing where you show up as a source, you should know which citations are involved in a discussion of your brand.

For example, when someone asks whether your brand is GDPR-compliant, you'll want to see an official compliance page, security documentation, or a reputable third-party audit mention instead of a random blog post as a source.

Recommendations

Being recommended by an AI platform means you're already doing something right both in terms of your content strategy and overall brand reputation. A direct recommendation is the strongest AEO signal, so it's what you're going for.

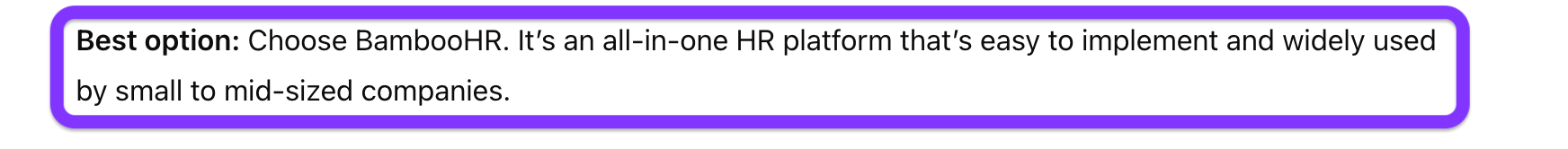

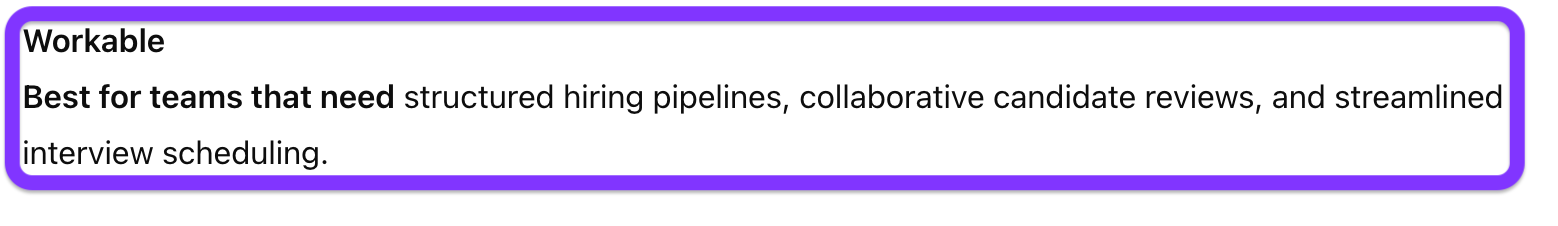

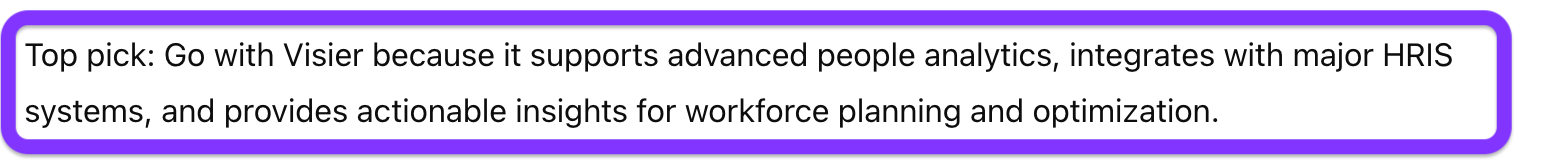

In most cases, a recommendation includes at least one of the following:

- Directive language: "Choose/go with / best option / top pick"

- Fit language: "Best for teams that need X"

- Justification: Reasons tied to user criteria ("because it supports X, integrates with Y, and has Z")

When tracking recommendations, you should focus on how strong the endorsement is. For example, an AI platform positioning you as a top pick while explaining the reasoning behind its decision carries far more weight than being shortlisted or mentioned in passing.

More importantly, you need to know you're being recommended for the right reasons based on accurate data.

For instance, an AI platform might recommend you for a misstated feature or a wrong pricing model, in which case the recommendation does more harm than good. If that happens, you need to center your content strategy around updating and/or correcting any mistakes.

How to track prompts in 3 steps

Prompt tracking doesn't have to involve any elaborate steps or frameworks. You can find all the insights you need in three steps:

1. Build a prompt list

You have limited bandwidth to monitor AI search visibility, so tracking dozens of random prompts will only make you waste time.

A much more effective approach is to strategically select prompts that fall into three categories:

Uncovering these prompts requires a blend of common sense and a bit of research.

In my project management tool example, it's reasonable to assume that one of the starting points should be an intent-driven, business-relevant prompt about the best options.

Many times, the response will instantly give you additional prompts to consider.

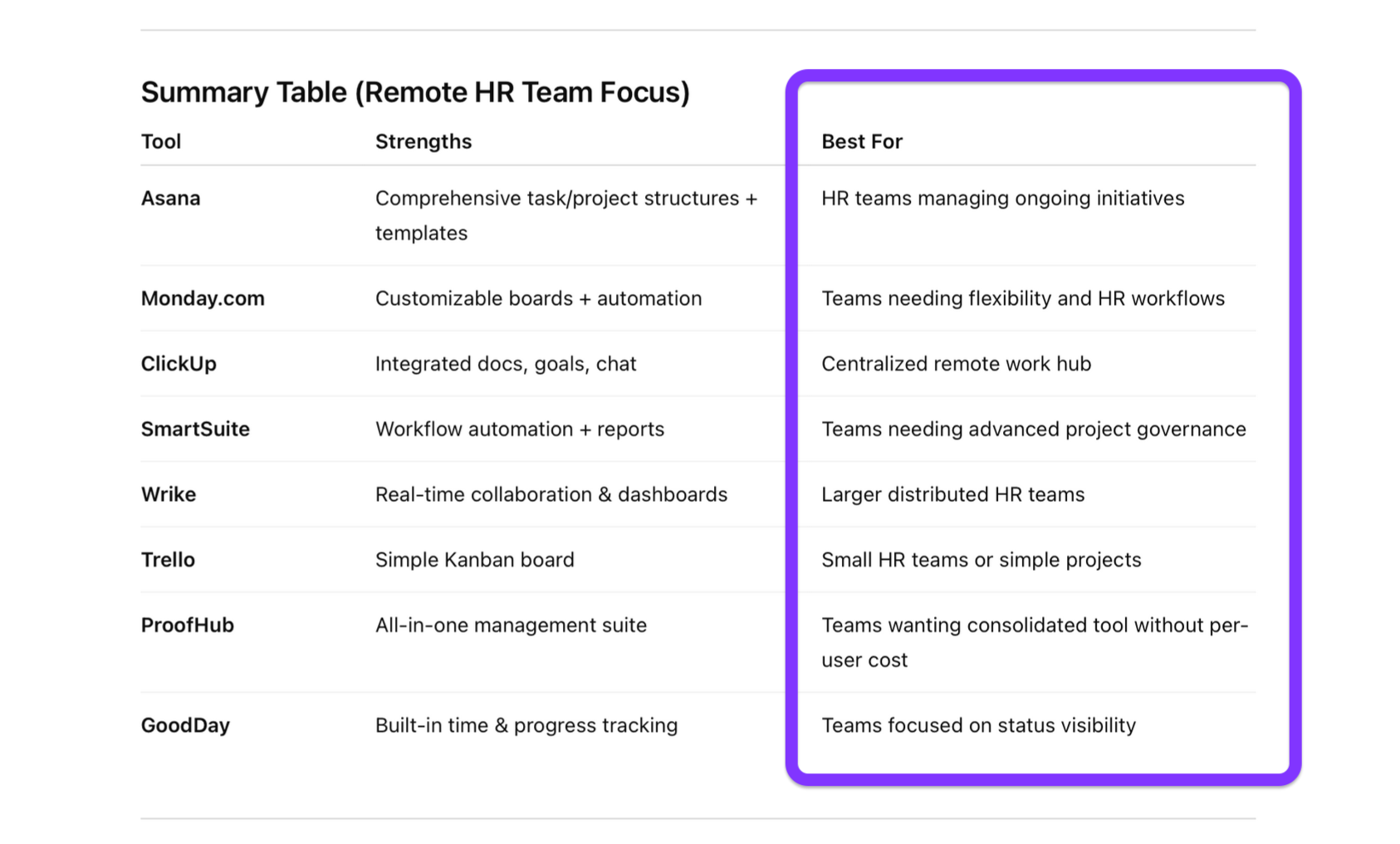

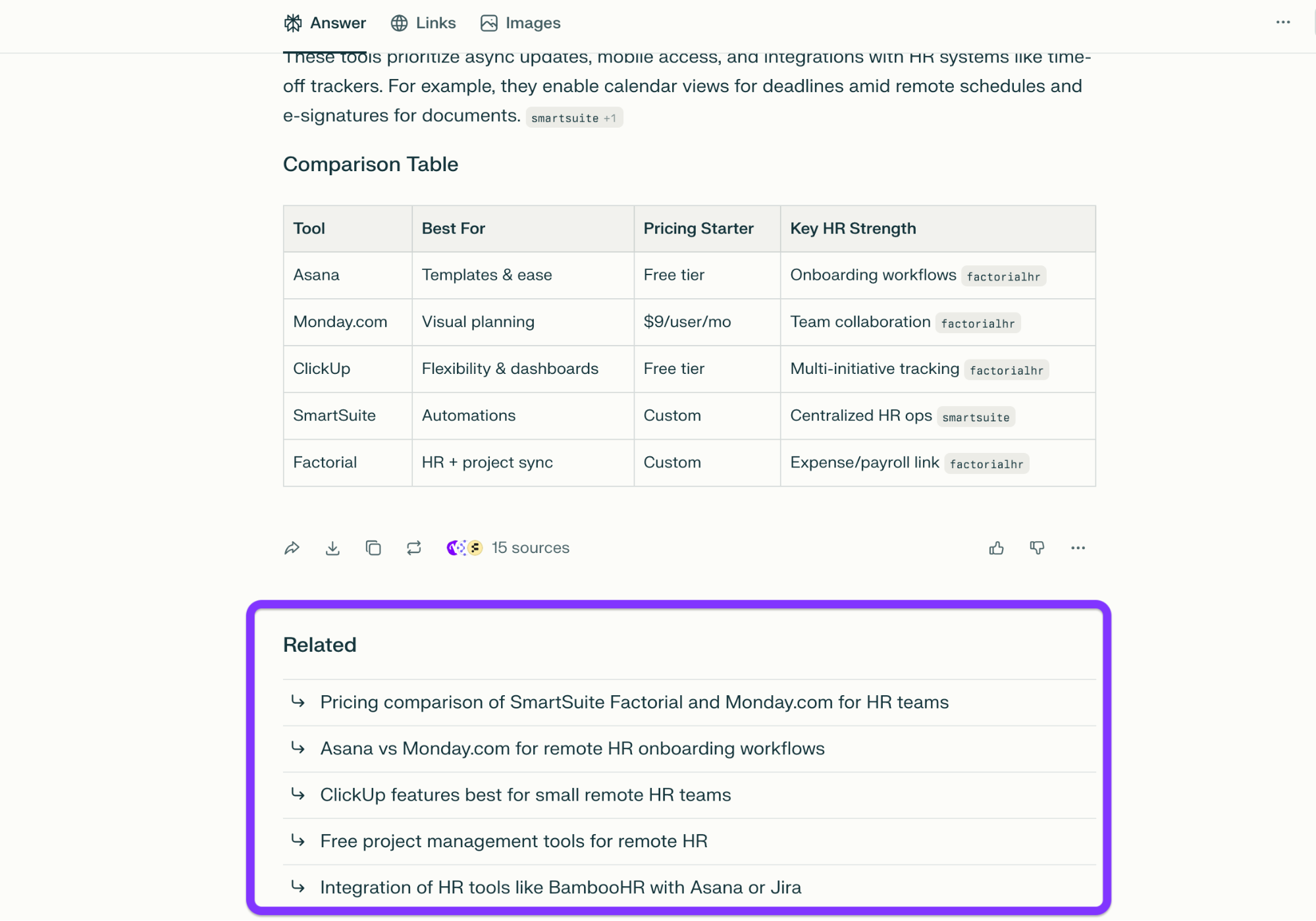

If you look at ChatGPT's response to the prompt I used above, you'll see it indirectly gave me a bunch of ideas for use-case prompts through the "Best for" column:

Some AI platforms might suggest potential prompts even more directly.

For example, Perplexity always includes a set of related prompts similar to Google's People Also Ask section, so it gave me a few prompt ideas when I asked about the best tools.

Seeing as we (still) don't have first-party data from LLMs as we do for tracking keyword rankings and other relevant data (e.g., Google Keyword Planner), choosing a starting point for building a prompt list might involve a bit of experimenting.

To avoid too much guesswork, use your business experience to find prompts based on questions like:

- What questions do prospects ask before buying?

- What misunderstandings do we constantly have to correct?

- What comparisons or objections come up in sales calls?

- Which problems occur the most in our customer support tickets?

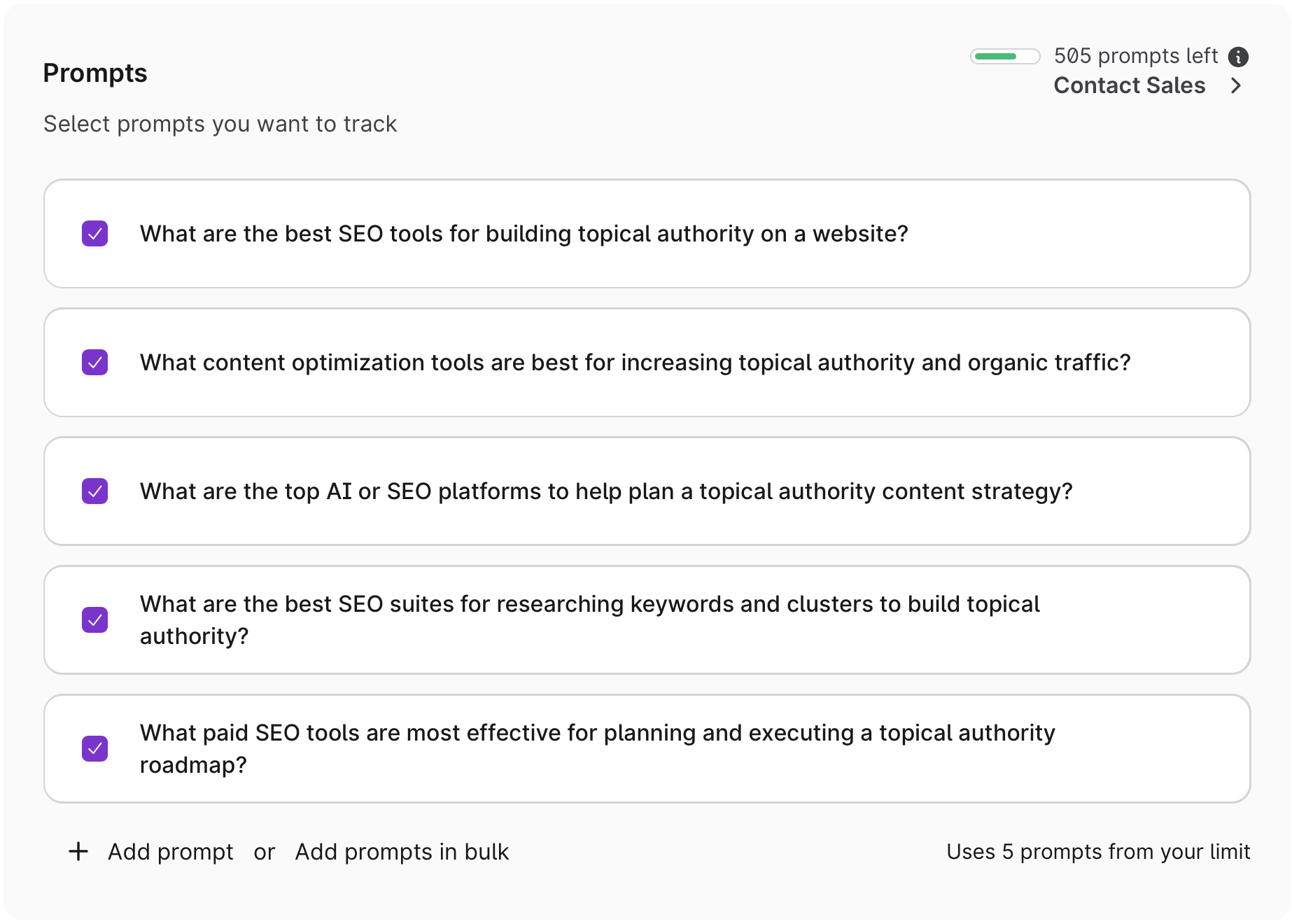

An easier way to do this is to use Surfer's AI Tracker.

- Enter the name of your brand

- Add a topic you'd like to track prompts for

- Select your preferred audience

Surfer will generate a list of prompts to get you started with, that you can then edit to your liking.

As for the number of prompts, focus on 20–30 prompts per category or core topic.

The list will evolve as you track their performance and your overall LLM visibility, so update the list as you spot new optimization opportunities.

2. Capture AI generated responses and mentions

Once you've made the prompt list, the rest of the tracking process depends on your tech stack. You can go down the manual route and:

- Run a prompt through different models

- Check the AI-generated responses for key data (mentions, sources, etc.)

- Enter the data into a spreadsheet

While this might work for a while, I don't recommend it because it's not practical. Do you really want to sign up for 5 different tools, then enter prompts everyday till you run out of credits?

Even if you do, this isn't scalable. You can do it for a handful of prompts, but you'll end up wasting a ton of time (and money) when you get to 50 or 100.

Besides, we know that LLMs provide different answers for the same prompts, so you’d need to run a prompt dozens or even hundreds of times to spot any patterns.

In an experiment, Rand Fishkin had the same prompts tested almost 3,000 times to measure for consistencies in the responses.

This means that to track prompts effectively, you need to scale the monitoring process so that the results converge to a select few.

Doing this manually isn’t feasible, so I'd suggest using AI tracking tools to eliminate laborious work and focus on decision-making.

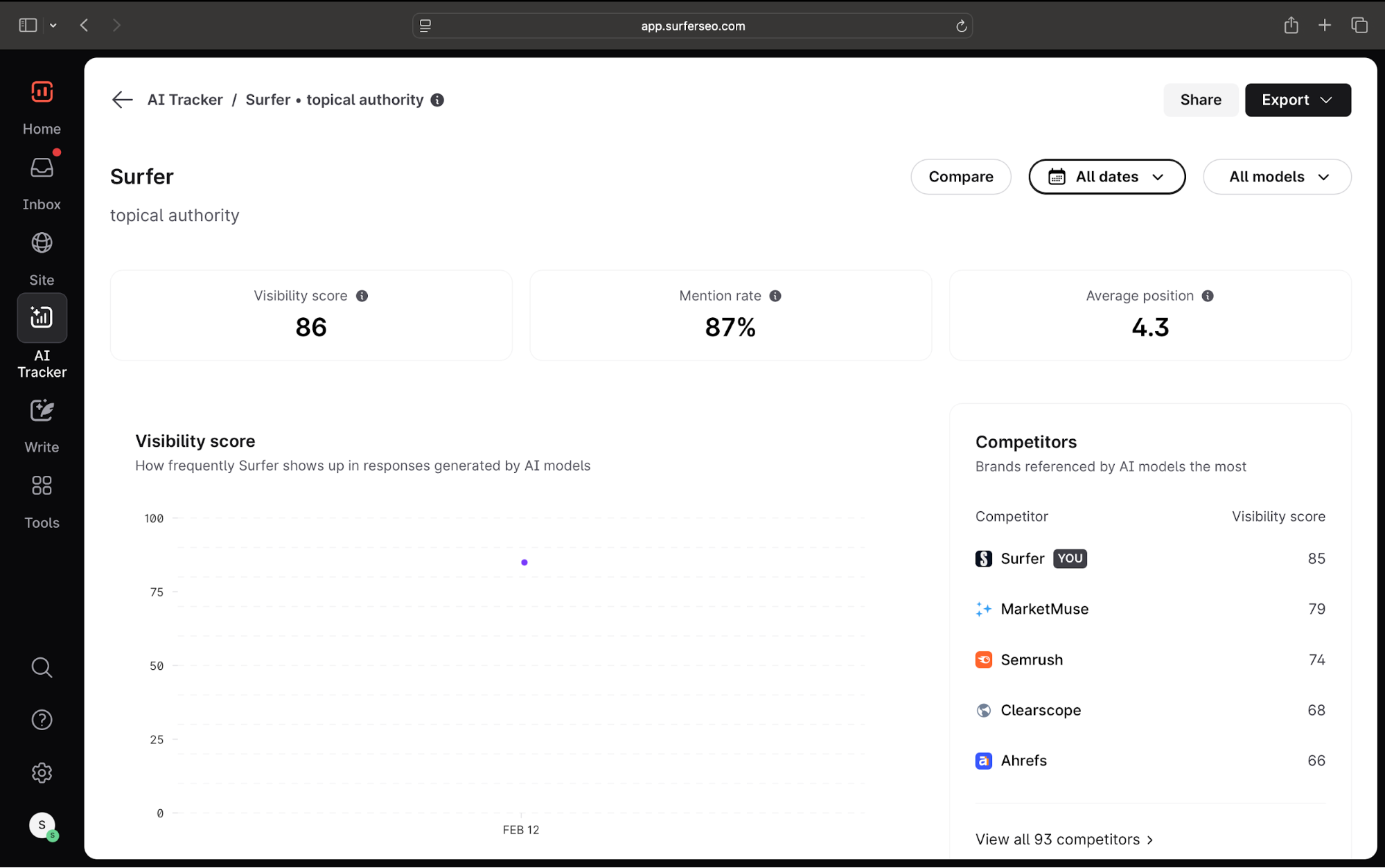

This won't come as a surprise, but Surfer's AI Tracker tells you pretty much everything you need to know about your brand's presence across AI models.

AI Tracker runs your prompts across major AI platforms to give you a bird's-eye overview of your AI visibility.

In the dashboard, you'll see high-level data like your brand's mention rate and average position in AI-generated answers. This data is aggregated into your overall visibility score, which simplifies monitoring across prompts and models.

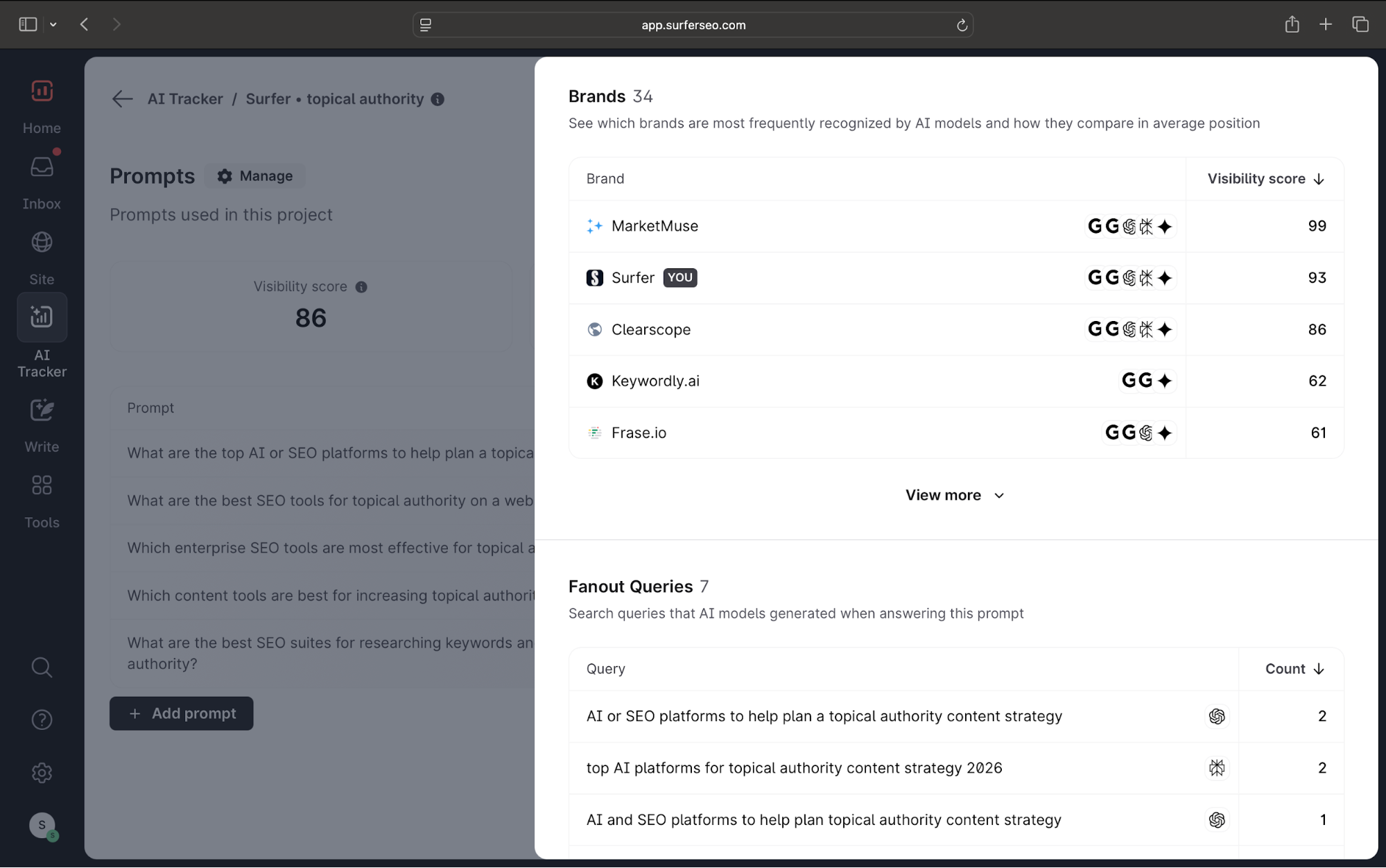

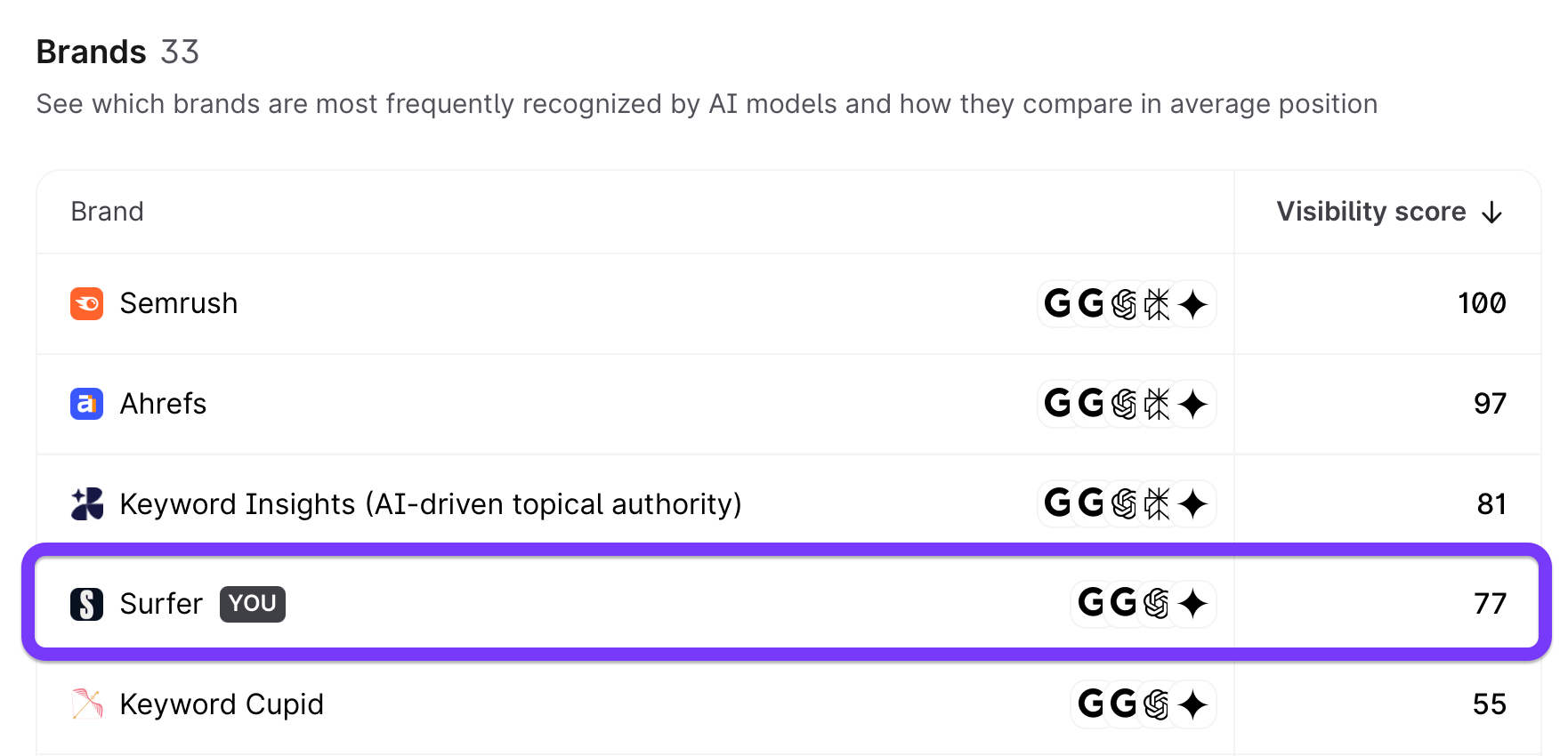

For more details, check out the Prompt report which tells you which queries you show up the most (or least) for. Dig in further to find other brands that AI tools mention so that you can check your standing against competitors.

For example, below you can see that Surfer is often accompanied by Clearscope and MarketMuse, two competing tools.

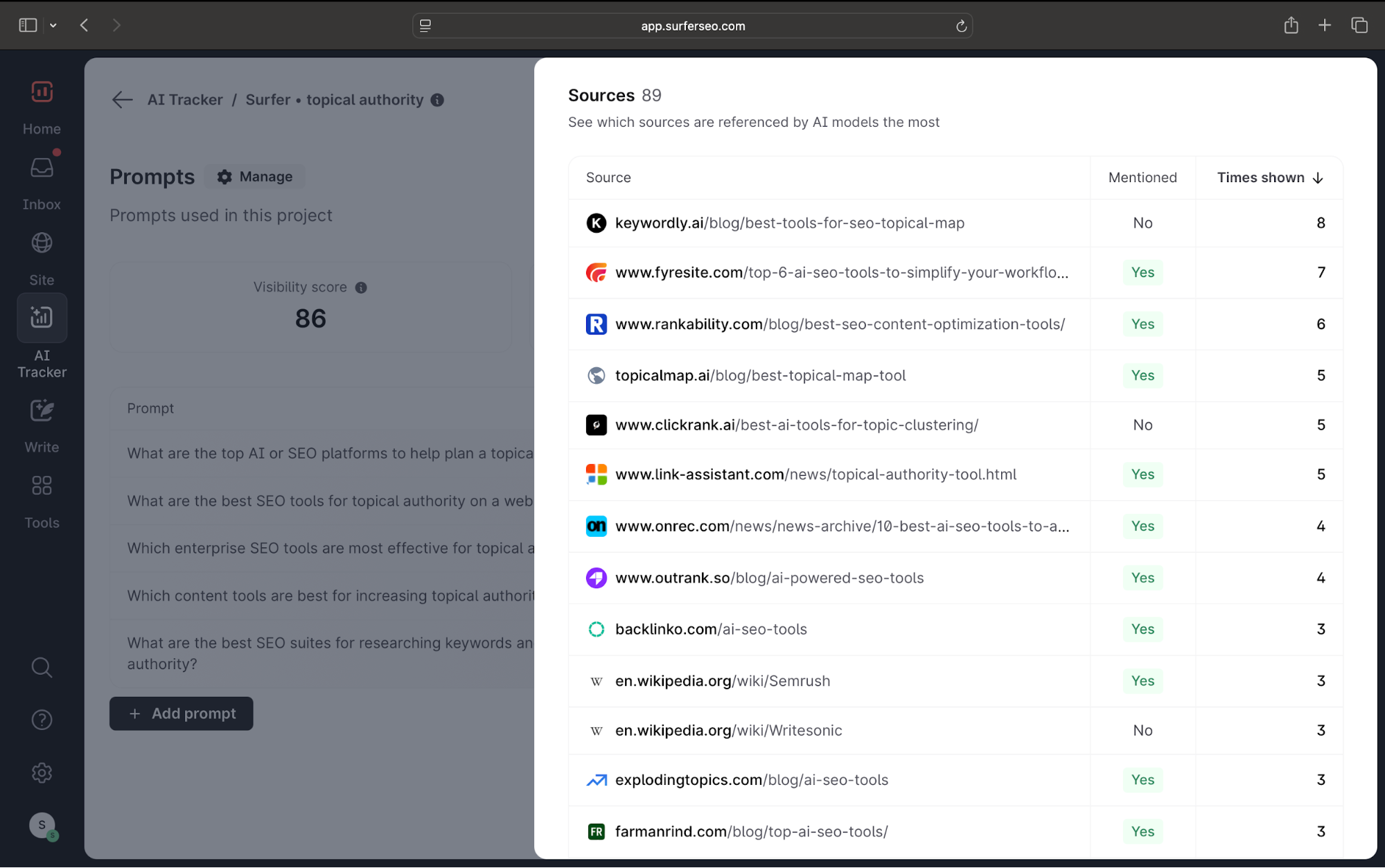

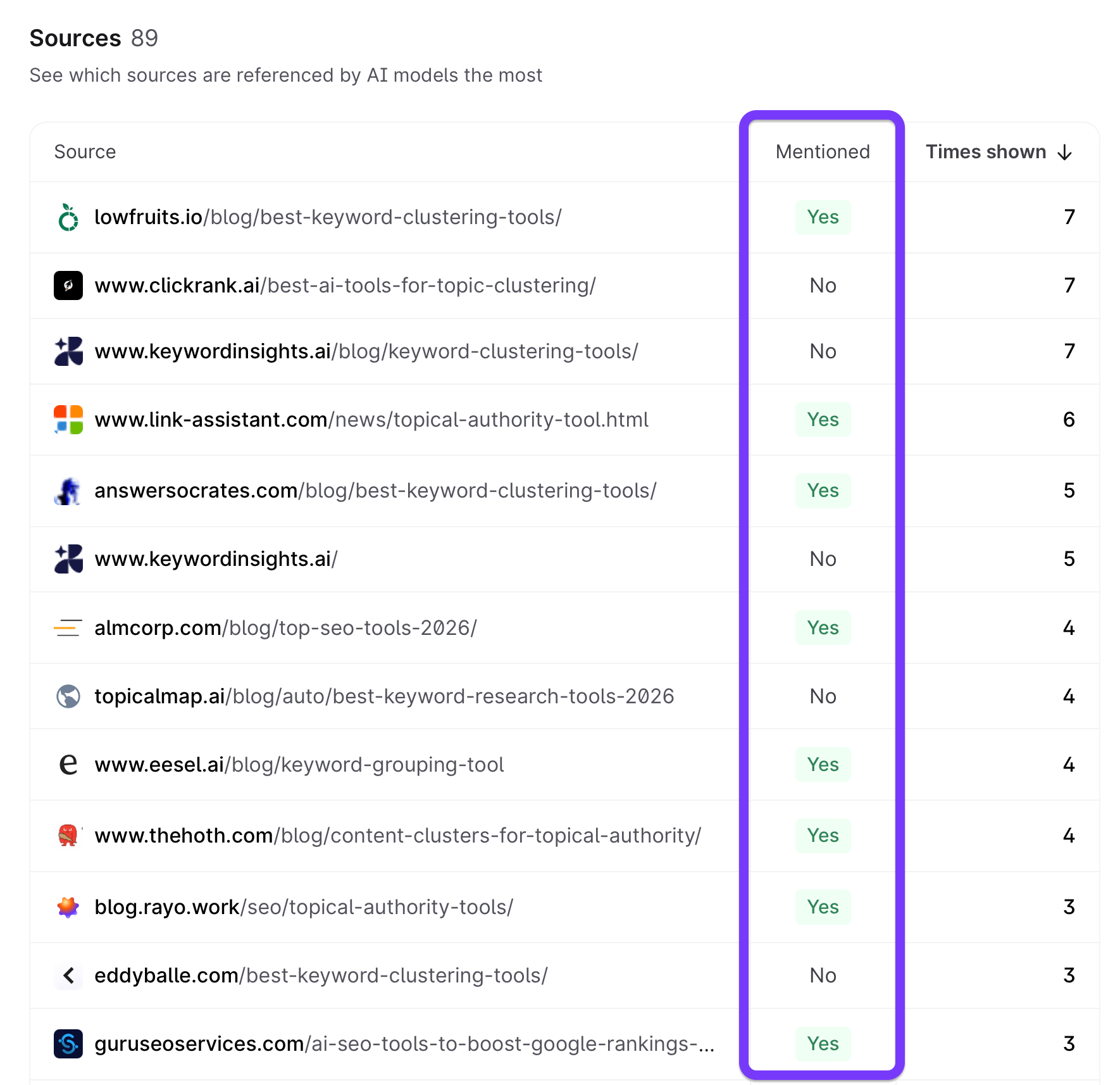

Probably my favorite feature of the report is the Sources section because it shows you which pages AI answers are drawing information from and whether my target brand is mentioned.

The AI Tracker updates data daily, so you can keep a close eye on your visibility.

As I said, we don't have first-party data from LLMs, so you'll probably go through an experimentation phase before you find the right prompts.

That's why I suggest avoiding taking the initial results to heart. Give it some time and test a few variations of similar prompts before relying on the results.

3. Turn findings into content improvements

While it's useful to get a general idea of your brand's performance in AI search, the true value of prompt tracking lies in the specific takeaways you'll pick up and the related content changes. When you look at a tracking report, you should know exactly what your next steps should be.

Let me give you some examples:

The exact action you'll take depends on the issues you encounter. But here's the thing: the tracking results won't depend as much on your process or the tools you use as they will on the prompts you track. If you miss the mark there, you won't get an accurate picture of your brand's current visibility, so all your future efforts might go in the wrong direction.

Here's what this looks like in practice.

Sorting Surfer's Prompt report by least visibility, I found a prompt that has a visibility score of 77. Not bad to be fair, but how can we do better considering these other tools have better scores.

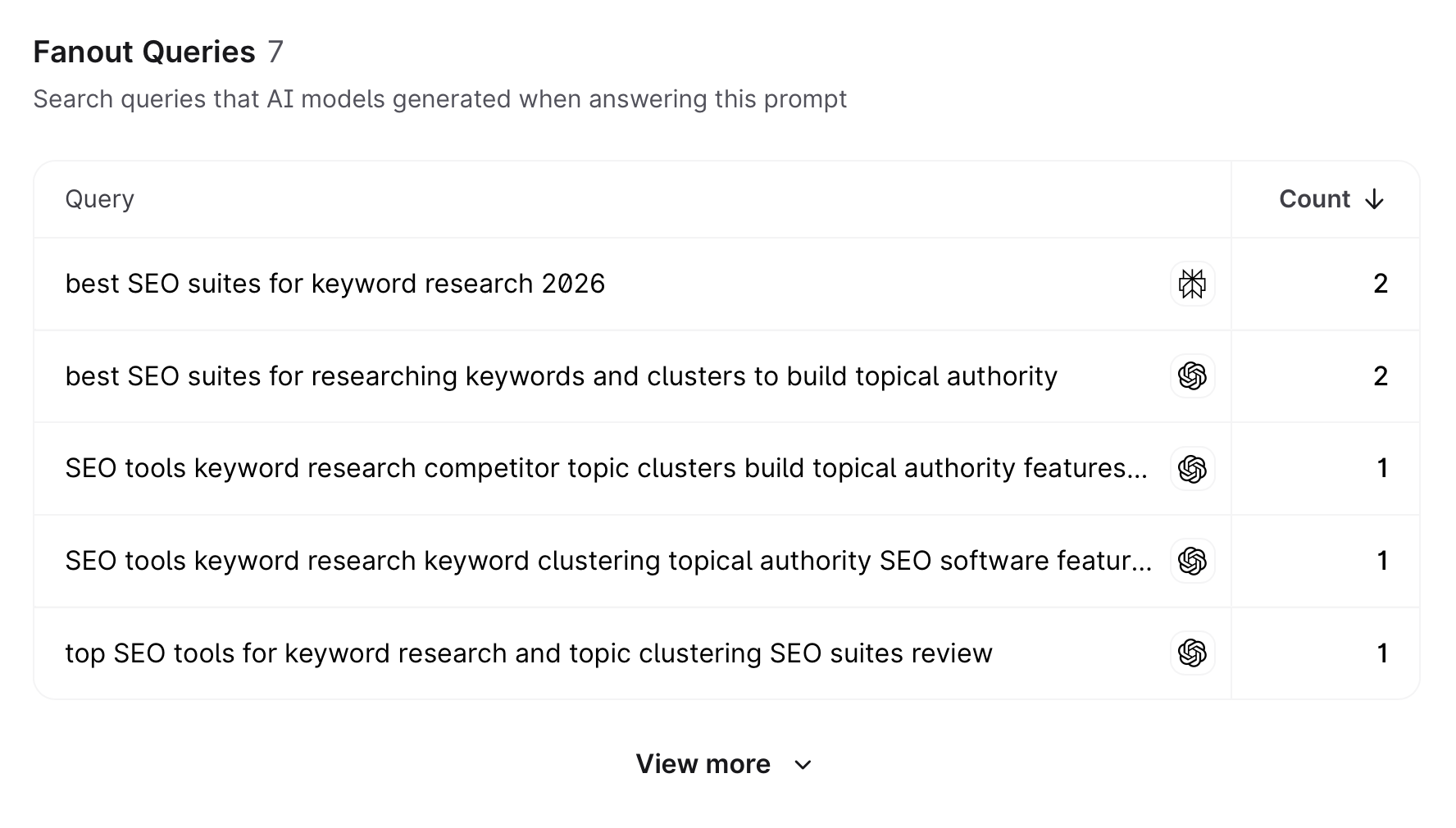

For one, the fan-out query report points me to relevant searches that I can research results for. Do the resulting web pages mention Surfer?

I can already tell that we're probably not as frequently mentioned as Ahrefs and Semrush because they've been known as keyword clustering tools for longer.

But the Sources report is where the magic happens. You can see that Surfer isn't mentioned in 2 out of the top 3 sources.

I could then reach out to them to be included in their articles. Of course, this is a long-term strategy and won't show results overnight.

This brings me to one aspect of tracking that might cause some confusion.

Why do answers vary between LLMs when the prompt is identical?

Answers vary between LLMs because they're probabilistic, generative engines instead of databases that pull fixed information from a defined set of sources.

As you track prompts, you'll inevitably run into differences in:

- Response structure and content (including brand mentions)

- Cited sources

- Recommendations and overall sentiment toward different brands and products

Variances in AI model design play a major role here. One model may be more influenced by technical documentation and structured sources, while another may lean more on general web content, reviews, or conversational patterns. Some may rely heavily on pre-trained knowledge, while others leverage patterns learned during training.

There's not much you can do about this, so think of it as a natural part of AI search behavior. But there's another factor that you can watch out for—personalization.

AI systems can use:

- Previous messages in the same conversation

- Stored user preferences

- Memory features that retain context across chats

If a model "knows" you’re in a certain industry, region, or role based on your interactions, it may tailor responses accordingly. For example, if you keep talking to ChatGPT about your brand, it might mention it more frequently in the responses and give you a distorted idea of your objective AI presence.

That's why for manual prompt testing purposes, I would avoid running prompts through AI tools you're signed into or use frequently. Instead, either use them while you're logged out or use a third-party tracker for complete objectivity.

Implement prompt tracking to optimize for AI search results

Prompt tracking is relatively new to all of us compared to traditional search monitoring, but we're starting to find some patterns that help us optimize content for AI search. I'll keep you posted as we learn more about how different models think, but until then, the steps you saw here should be enough to give you a solid idea of where you stand and what to improve.

Remember that it all comes down to careful prompt selection, so give it time and experiment. Pair the right prompts with the right tracking processes and tools, and you can develop an informed AIO/GEO strategy without wasteful shots in the dark.