As AI-generated answers take up more space in search, one question matters for SEOs and content teams alike: What separates the pages AI chooses to cite from the ones it ignores?

To explore this, we analyzed 57,000+ URLs across 1,591 keywords, comparing the information inside those pages with the sources referenced in Google’s AI Overviews.

The goal was to look for consistent patterns in the content that shows up most often.

One idea we wanted to test was simple: Do the pages cited in AI Overviews tend to include more of the key facts needed to answer the topic?

This study breaks down how we approached that question and what we found along the way.

Methodology

To uncover what makes some pages more likely to be cited by AI, our Data Science team analyzed 1,591 keywords and 57,253 URLs, comparing what AI-generated summaries include with what human-written pages publish.

Here’s exactly how those numbers were created:

- For every keyword, we pulled the top 30 organic Google results, which produced around 45,000 URLs across all queries.

- Then we collected every URL that appeared inside that keyword’s AI Overview, which added about 12,000 additional URLs.

- After removing duplicates, the final dataset contained 57,253 unique pages.

This is the full set of URLs we used for the analysis, not just top-ranking results, but anything AI Overviews relied on.

In our comparison of cited vs. non-cited pages, we focused on facts: the concrete, verifiable statements that make up a page’s actual substance.

For example, in an article about vitamin D benefits, one fact might be “Vitamin D supports immune system function,” while another might be “Vitamin D is produced naturally when skin is exposed to sunlight.”

We focused on facts because AI Overviews don’t copy sentences. They reconstruct answers by pulling factual statements from multiple sources.

- Wojciech Korczynski, Data Scientist

To compare pages consistently, we calculated Fact Coverage—the percentage of a topic’s key facts that a page includes. If a topic contains 50 key facts and a page includes 15 of them, its Fact Coverage is 0.30 (30%).

Across all pages, we analyzed 1.85 million fact appearances, giving us enough volume and consistency to identify clear, repeatable patterns in how AI chooses which pages to cite.

AI Overviews consistently cite content that covers more facts

Pages cited in AI Overviews consistently cover more facts than those that aren’t.

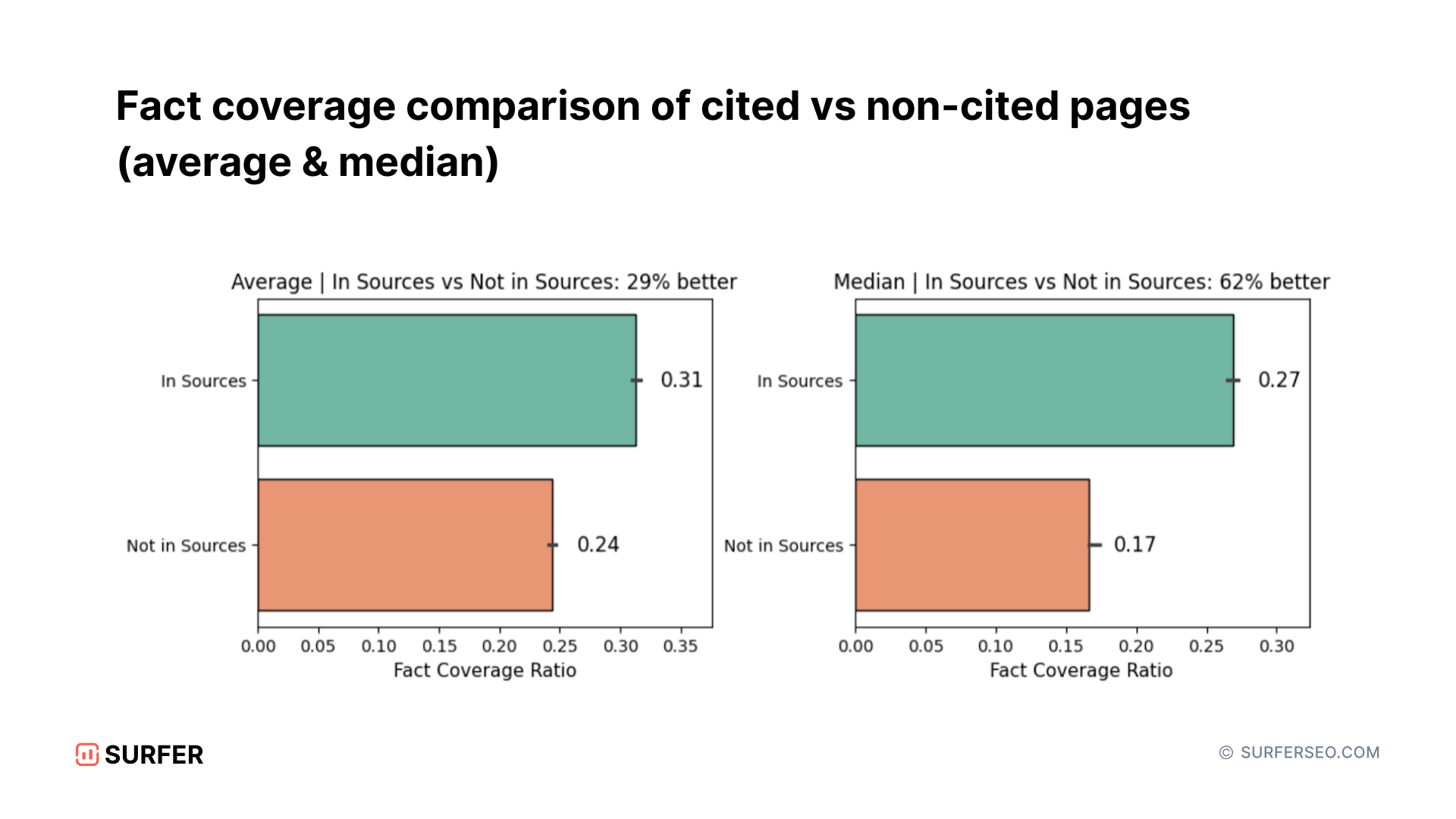

On average:

- Cited pages: 31% Fact Coverage

- Not cited: 24% Fact Coverage

That’s a 29% increase in topical completeness for the pages that AI chose to reference.

Looking at the median makes the contrast even sharper: The typical AIO-cited article covers 62% more facts than the typical non-cited one.

So it’s not just a few exceptional sites skewing the average. The majority of cited pages are genuinely more complete.

The most complete pages cover 2× more facts

Some pages don’t just get cited once; they’re cited every time an AI Overview is generated for a keyword. We call these core sources.

In this part of the analysis, based on a smaller sample of 110 keywords, we compared three types of pages:

- Pages cited in every AI Overview for a keyword (core sources)

- Pages cited occasionally (non-core sources)

- Pages never cited (non-sources)

The difference was striking.

- Core sources covered 42% of the key facts for their topic

- Non-core sources covered 34%

- Non-sources covered 23%

The most frequently reused sources showed nearly twice the Fact Coverage of the pages that were never cited.

These comprehensive pages go beyond surface points, connect related ideas, and give readers the full context.

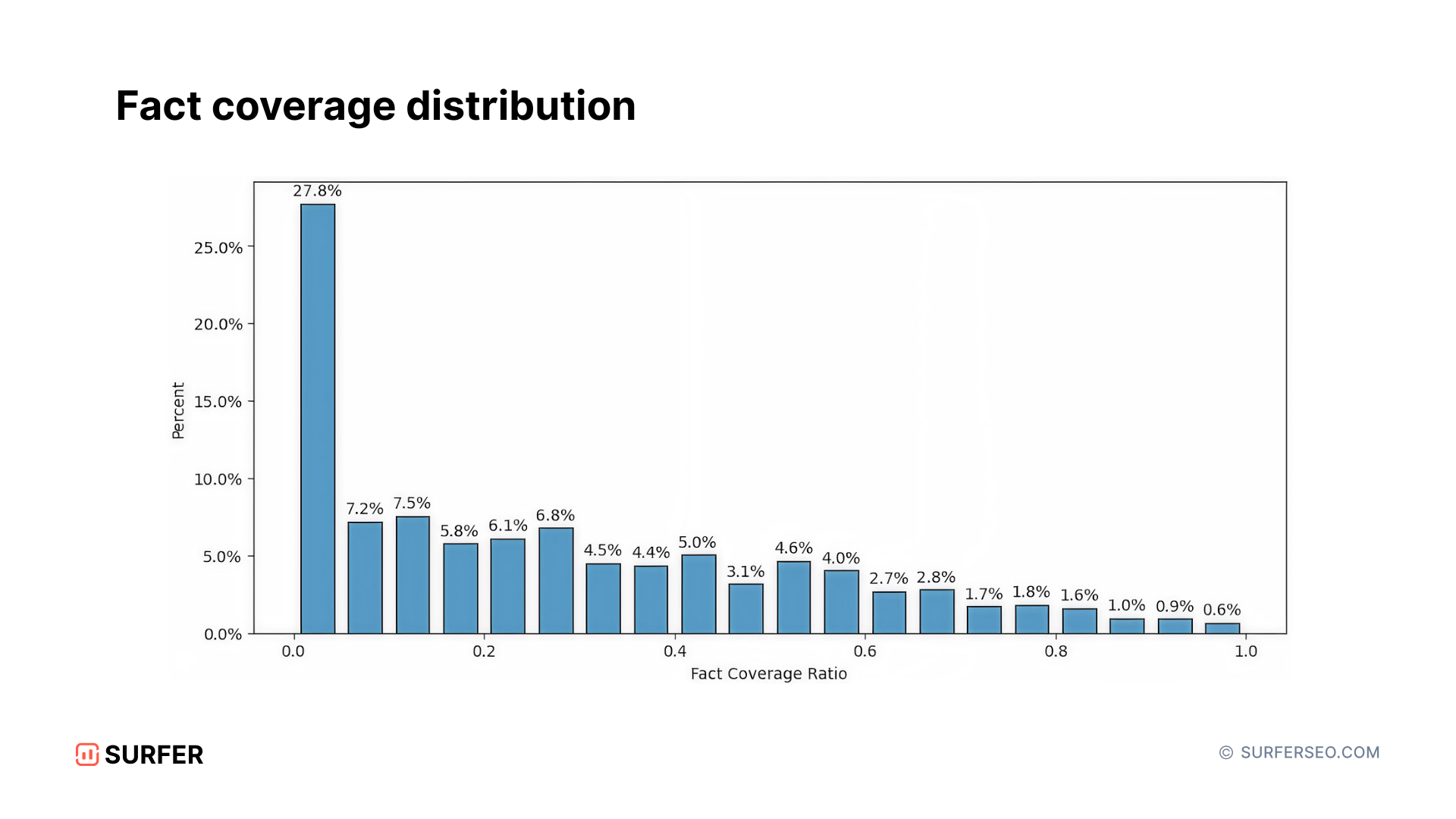

Most content still misses the majority of key facts

Despite these patterns, high completeness is still rare.

When we looked at all 57,000+ URLs, 28% of pages covered almost none of the key facts identified in their topic’s AI Overview.

Only a small fraction reached high coverage levels, and those were the same pages most likely to be cited.

The distribution tells a simple story:

Most content scratches the surface. Only a few pages explain topics thoroughly enough to earn trust from AI systems.

Even among top-ranking results, most articles leave significant factual gaps: missing context, entities, or subtopics that AIOs consider essential for a complete answer.

That gap is what creates opportunity.

How to increase your chances of being cited in AI Overviews

Most pages still leave major informational gaps. That gap is your opportunity to stand out.

Here’s how to act on what the data shows:

1. Include the essential facts your page is missing

AI Overviews consistently cite the pages that cover more of a topic’s key facts.

Not longer content. Not fluff. Not surface-level explanations. Facts.

To increase your chances of being cited:

👉 Add the key facts your competitors miss

👉 Support claims with specific, verifiable information

👉 Cover the essential points needed to fully answer the topic

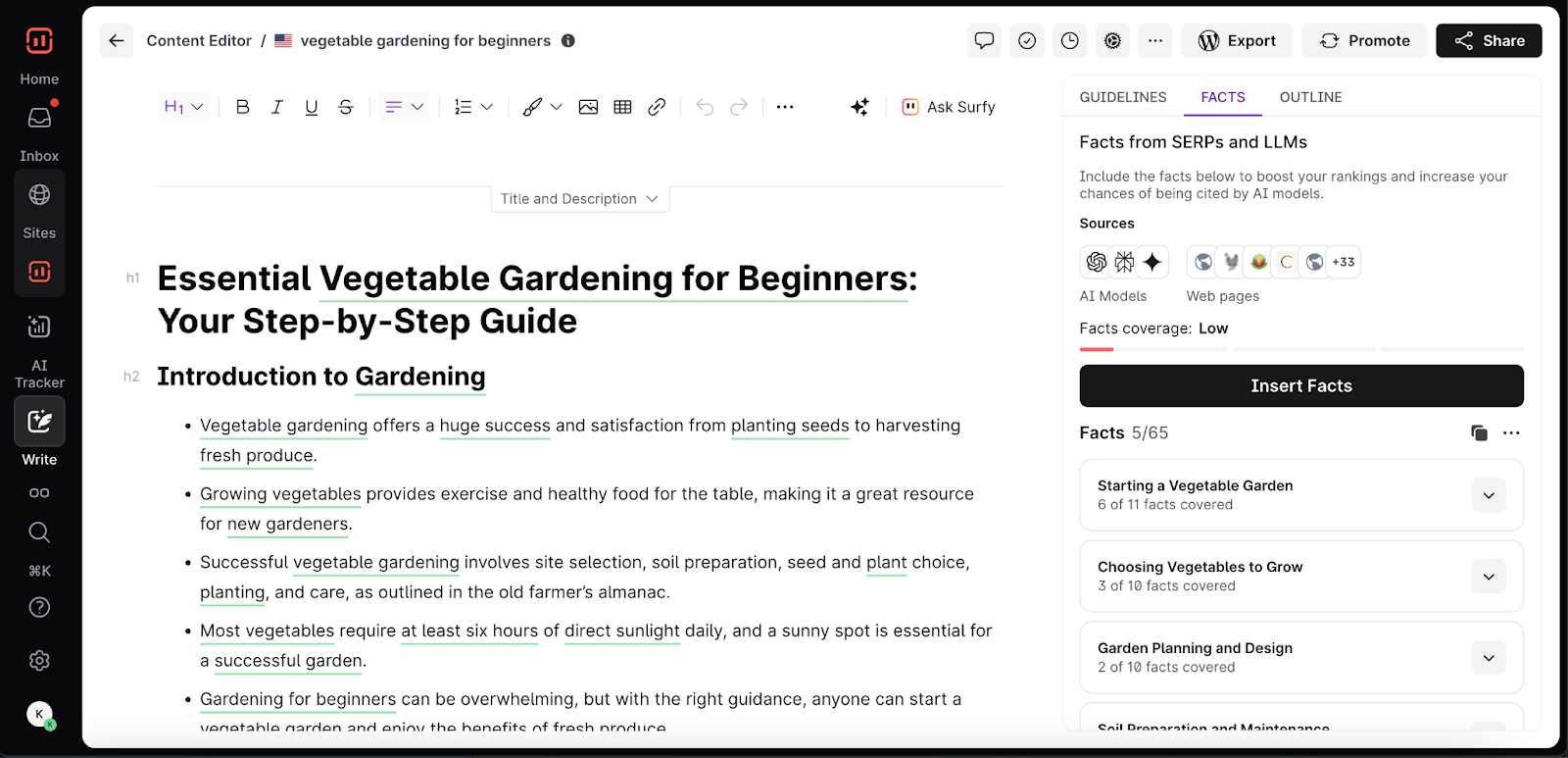

With Surfer’s Facts feature (inside Content Editor), you can make this process data-backed.

It highlights which facts, key points, and entities top-ranking and cited pages include that your content doesn’t, helping you update existing pages or create new ones with full topical coverage.

So instead of “writing more,” you write what matters: the factual building blocks that make your page complete in the eyes of both readers and AI systems.

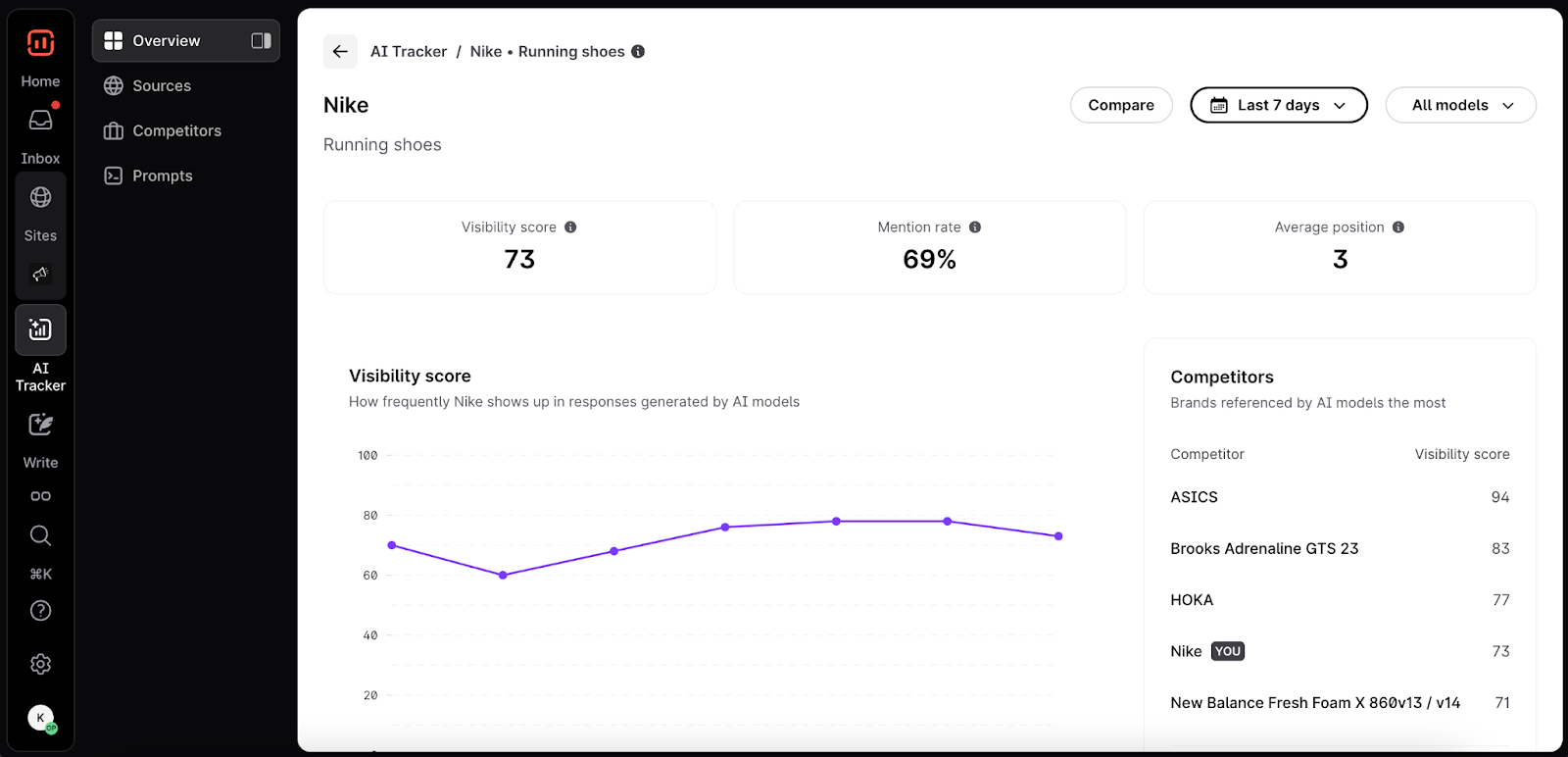

2. Track and maintain your AI visibility

Completeness isn’t static.

New facts emerge, competitors update their content, and AI models adjust which sources they reuse.

To stay visible, you need to know:

- where your pages appear in AI Overviews

- which of your competitors are being cited more

- which URLs AI is pulling information from

- where your content stops being referenced

Surfer’s AI Tracker shows how often your pages or brand appear in Google AI Overviews, ChatGPT, and Perplexity, and which sources each system relies on.

If your content isn’t showing up, those are clear signals to update, expand, or strengthen coverage around the topic.

Final thoughts

Across tens of thousands of pages, one pattern showed up again and again: the pages cited in AI Overviews tend to cover a larger share of a topic’s key facts.

Whether this happens because they are more complete, because they already rank higher, or because both signals overlap, the outcome is the same: the most fact-rich pages are the ones most likely to appear in AI-generated answers.

This is good news for content teams.

You cannot directly control where your page ranks or how AI models weigh different signals. You can, however, control how thoroughly your content covers the essential facts of a topic.

And with Surfer, completeness is no longer guesswork.

You can see which facts top-ranking and AI-cited pages include, identify the gaps on your own pages, and strengthen them with the context and details AI tends to reuse.

If you want to increase your chances of being cited, whether by humans, search engines, or AI systems, the path is clear: build pages that leave fewer factual gaps.

.avif)