Let’s get straight to the point. Here’s a brief summary of the whole situation:

- Hubspot’s blog (located in a subdomain: blog.hubspot.com) lost 81% traffic from organic results in Google y/y.

- They were losing rankings in Google since the March 2024 Core Update and Spam Update, which were released at the same time.

- The drop sparked discussions in the community and marketers started to complain about Google. Some even announced the end of SEO.

- In my opinion, most skipped data analysis and jumped straight into unfair, logically unjustified conclusions that simply match their worldview and/or agenda.

- There are actually many issues with blog.hubspot.com that could have led to this gradual demotion. They relate to both on-site and off-site factors. I analyzed them below.

This is not an uncommon situation. If it wasn’t Hubspot, but a “no name” website, no one would be surprised.

In this article, you will also find other examples of December Spam/Core Update losers and winners.

The Hubspot traffic drop timeline

It didn’t happen overnight.

Hubspot's estimated organic traffic in the beginning of 2024 was around 10M visits monthly. In September 2024 it already decreased to circa 7.5M. From the end of September the numbers are falling down almost constantly.

But the biggest drop was yet to come during the December Core and Spam updates.

As a result, Hubspot's estimated traffic at the end of January 2025 is below 1.9M. This is according to Ahrefs data.

I also double-checked this data using another tool. According to Semrush, the traffic dropped from 14.8M in January 2024 down to approximately 2.8M in January 2025.

Regardless of the nominal values, the scale of lost traffic according to the readings from both tools is clearly visible and surprisingly results in the same number - 81% y/y!

Sistrix’s Visibility index shows a similar story: 76% y/y drop.

The data comes from global stats or the US market, where Hubspot has the biggest exposure and its biggest market in terms of organic traffic acquisition.

Why am I bringing this up?

In this article I explain what actually happened with this sensational (for some) and loud Hubspot blog’s organic traffic drop.

Why do I do this?

I’m surprised with the scale of misunderstandings, wrong conclusions and shallow opinions without any data to support them on LinkedIn and within the marketing community.

It's not just about pushing to prove who's right to feel better. It’s about defending a certain stance - the data driven approach when it comes to interpreting events in marketing.

The direction of this discussion leading some people to conclusions about the death or decay of organic search traffic as a channel is simply harmful for the whole industry.

Disclaimer: It is not my intention to offend anyone. I am simply appealing to reason and providing answers backed by data.

Also, I am the last one to judge anyone within Hubspot’s team and to blame anyone for the drop. Who knows, maybe I’d do the same.

SEO is about growth hacking and driving business results by building organic exposure.

If you don’t try hard enough, you will not win. If you try really hard - you risk crossing the line.

This is the SEO game. So let’s see what could have happened based on the most important SEO factors analysis.

By the way - thanks to the Surfer’s team, especially Michał, Tomasz and Satya for giving me this space to say things loudly :)

Carl Hendy made a valid point here:

But Ivan Palii published an even better (in my opinion) response:

So… let’s learn something.

SEO perspective on Hubspot’s traffic loss

As I mentioned earlier, the estimated traffic loss of blog.hubspot.com in Google amounts to circa 81%.

That's a lot, isn’t it? Actually, it’s not that straightforward.

In fact, we don’t know how much traffic Hubspot has really lost. I’m sure that they’ve lost some serious exposure, but it’s definitely not because users moved to other channels as some indicate.

It’s because of the drop in rankings, especially during the March, November and December Spam and Core Updates.

Why did Hubspot lose the traffic?

Let’s take a look at these two charts combined.

One presents organic traffic from Google and the other one organic keywords in 1-3 and 4-10 positions.

The simple and most obvious answer to the question why they lost traffic is: because their ranking dropped.

This traffic hasn't evaporated. It just moved to the other websites that filled the gaps.

Like Tim Soulo suggested, you can easily verify who is actually ranking on those keywords now:

How big was the actual drop?

Over the last year they have dropped from 138K to 30K keywords in top3 results! It’s 78%!

Of course their SV vary from 10 to tens of thousands, but still - (quite surprisingly) we get again to the similar scale.

But, despite the volume thing, does each keyword hurt the same? Of course not.

Like in this situation, where they totally lost their top1 position:

If we check up the SERP’s it turns out that they still rank high with another subdomain, offers.hubspot.com.

Ok, what about other popular queries they’ve lost…? Like “quotes” with 223K search volume?

Here’s the bigger picture on the top words they totally lost:

I’m really not surprised that Canva benefited in this situation as many of these keywords are image or graphic related.

Did it really hurt Hubspot’s business?

I heavily doubt it. But it heavily impacted the chart that electrified the marketing and SaaS community.

Years ago, the blog subdomain was responsible for 75% of their traffic. Their total traffic was around 13M (or 18M if you prefer Semrush as an estimated data source).

Now it’s 5.3M, so Hubspot (considering all subdomains) lost not over 80, but “only” 60% of traffic. And it’s mostly traffic with informational intent. Obviously, it’s a blog.

Out of 1,987,920 keywords that their blog ranked for a year ago, 479,021 were classified as transactional or commercial.

Now there are 305,145 commercial or transactional keywords that they rank for. And still, many of those didn’t promise any conversions for Hubspot.

Let’s also remember that many of those keywords may bounce back and Hubspot’s blog may recover.

I also think that the time perspective on the chart caused a big thrill:

Look at the same data, but with just last 6 months perspective:

Another important lesson - graphic charts help us understand what’s going on, but sometimes they may be misleading. First chart looks far more dramatic, doesn't it?

Why Google penalized Hubspot’s blog?

There are several possible reasons I want to suggest. Despite tons of well crafted content, link authority and topical authority in certain topics, Hubspot’s blog isn’t above the law.

In my opinion, they simply crossed the line in a few areas and triggered systems built in Google’s core algorithm and anti-spam systems.

Google’s definition of spam is quite broad and a big part of the system relies on automated triggers.

I’ll show you three main issues I detected on Hubspot’s blog and related systems that may have been triggered.

Theory no. 1: Outgoing links

This was my first thought and I have been discussing this issue many times recently. It was the center of my talk in Brighton on data driven link building and again with SEO folks in Chiang Mai where I presented this topic during the Linkhouse’s meetup.

Let’s just look at pure numbers (based on ahrefs):

- 28,446 domains linked from hubspot.com.

- 28,306 domains linked with dofollow links.

- 125,034 groups of similar outgoing links.

- 124,999 groups of similar dofollow outgoing links (other *.hubspot.com subdomains excluded).

There’s no clear limit to how many outgoing links you can have.

But the more links you have, the bigger the risk that some anti-spam systems will be triggered.

Take a look, how this snowball was growing:

And here’s the accumulated view that lead to over 28K linked domains:

What makes an outgoing links profile look spammy? I’d say that's in relation to the website’s authority and size.

In this case, authority is not a problem, because the blog has approximately 147K referring domains. But size?

Let's compare this with the number of subpages.

- 19,200 pages (based on site:blog.hubspot.com search operator in Google).

- 12,975 pages (based on ahrefs “top pages” report).

19200 vs. 124999 ratio is quite alarming.

But still, it doesn’t have to imply that it’s agains Google’s guidelines, right?

The problem lies within the nature of many of those links. I was offered to buy links on Hubspot’s blog a few times. It wasn’t actually an offer made by anyone from Hubspot, to be clear.

It was offered by middlemen, link builders, outreachers etc.

You can still find offers like that on platforms like Upwork.

Or on Legiit:

So, as you can see it’s not a made up accusation I’m talking about.

I made a comment on that topic on LinkedIn that resulted in nice exchange with Adrian Dąbrowiecki:

In short, he made a valid point that Hubspot didn’t sell links directly. It was probably just a part of popular practice of link exchanges.

My reply was simple: a paid link is a paid link. No matter how you pay for that, with money, service or another link.

And it’s not about my opinion or moral stance, but very clear in Google Guidelines:

It’s time to wake up guys! Link exchanges aren’t white hat either.

Don’t get me wrong. I don’t want to blame the “link buying/selling” thing. I’m opting for a pragmatic approach and believe that almost any link is paid in this or another way.

Just remember, if you’re violating Google guidelines, just do that in a less schematic, obvious way.

This is one of the slides from my talk in Brighton, which indicates that the number of outgoing links on certain blog posts grew over time:

Here’s the whole topic explained.

It must have grown if in the last two years the number of linked domains grew by 10K and it’s not correlated anyhow with increasing number of pages:

What to do to avoid this kind of penalty?

- Remove as many unnecessary links as possible.

- Verify relevance.

- Use different anchor texts (numbers for reference, here, source etc.) so it doesn’t look suspicious.

- Use nofollow or other ways of PR sculpting to avoid accusation that you put those links to manipulate rankings.

- Don’t put too many outgoing links per article. Watch link ratio and density.

I don’t know if Hubspot received any message from Google Search Console about violating spam policies in this field.

Even if they haven’t, if I were responsible for organic traffic in Hubspot, I’d really investigate that issue deeper and focus on cleaning that up.

Theory no. 2: Loss of focus and diluted topical authority

I really think they went too broad with topics on their blog which finally led to losing topical authority.

It’s also not a thing that happened overnight. Read a comment by Bianca Anderson (“former HubSpot SEO who worked extensively on the blog” as she introduced herself on LinkedIn):

As Bianca mentions, ranking systems evolve. Helpful Content System is a site-wide system for instance which is already built in the core algorithm.

Recent leak also confirmed that Google developed other site-wide metrics and indicators like siteAuthority and ChardScores.

We can’t be sure how they are exactly calculated, but it’s reasonable to assume that the more unrelated content you have, the bigger the risk it’s gonna affect your rankings negatively.

Maybe they just crossed the line with HCS. Maybe it’s because they didn’t update their content (freshness is more important than ever).

One thing is certain: articles on quotes, small business ideas, emojis, free images weren’t that much related to their core product.

Publishing content that is loosely relevant to the business is not purely a spam tactic. It’s just leading to dilution of topical authority.

That’s why their rankings on many relevant keywords decreased and they’ve lost rankings for unrelated queries that didn’t match core intent, weren’t relevant for core target audience, and didn’t have direct connection with Hubspot’s field of expertise and offer.

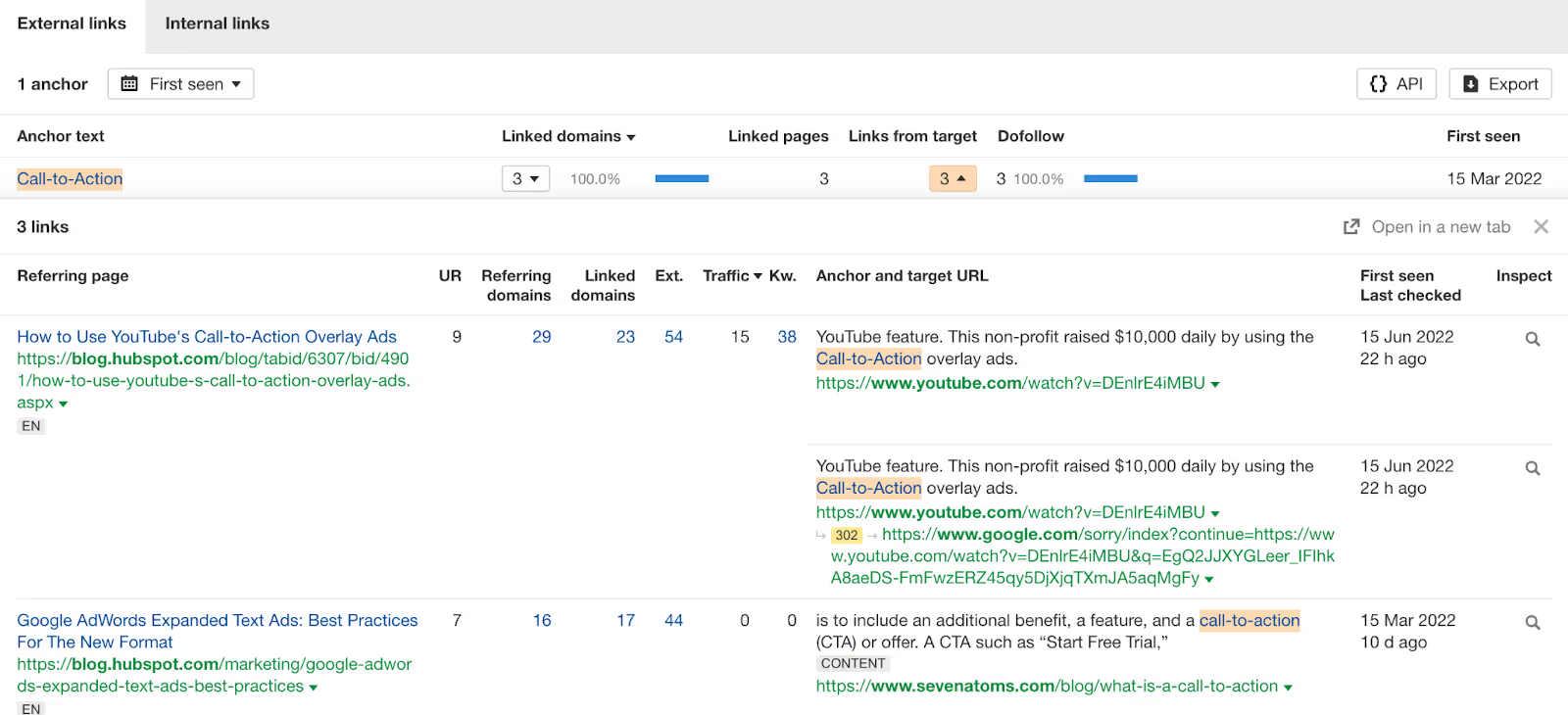

There are also other problems within the website's information architecture like cannibalization and lots of old posts that should be merged, refreshed or removed. It seems like Hubspot’s blog has 43 articles on “call-to-action”.

Many of them target the same intent:

It’s also worth taking a look at the pages without traffic or close to zero traffic. There’s quite a lot of them.

Topical authority is inevitably connected to a logical and clear website structure. That leads us to internal linking and anchor texts used in the process. We already discussed the problem with outgoing links, but the internal ones also need attention.

Cannibalization is inevitable within such a big structure, but still, it’s important to monitor rankings in terms of internal anchor texts. There’s a healthy rule for keyword targeting: one anchor-text should indicate just one URL.

There are 51 links within blog.hubspot.com under the anchor text “call-to-action”

The problem is, they point to different target URLs like:

- https://blog.hubspot.com/marketing/what-is-call-to-action-faqs-ht

- https://blog.hubspot.com/marketing/call-to-action-optimization-ht

- https://blog.hubspot.com/marketing/call-to-action-examples

Simultaneously, Hubspot authors also link to external resources using this anchor text:

Algorithms may get confused by these contradictory directions.

The same situation with “content marketing” anchor:

- https://blog.hubspot.com/marketing/content-marketing

- https://blog.hubspot.com/marketing/measure-content-marketing-roi

- https://blog.hubspot.com/marketing/marketing-examples-online-resources

Or “market research”:

- https://blog.hubspot.com/marketing/market-research-buyers-journey-guide

- https://blog.hubspot.com/marketing/market-research-definition

- http://blog.hubspot.com/agency/market-research-tools-resources

Or important keywords like “lead generation” which is like… in Hubspot’s DNA?!

- https://blog.hubspot.com/marketing/beginner-inbound-lead-generation-guide-ht

- https://blog.hubspot.com/marketing/lead-generation-ideas

- https://blog.hubspot.com/marketing/b2b-lead-generation

I kid you not:

Content pruning needed badly here on several levels!

What solutions could help cure the situation?

- Calculate site embeddings.

- Cluster them by cosine similarity.

- Decide (based on traffic, impressions, target keywords) which should be merged, deleted, refreshed.

- Delete irrelevant content that don’t engage users and don’t support business goals.

- Create groups to collect similar pages into hubs (pillar pages + satellites) if possible.

- Establish logical internal linking structure with strict anchor text selection (without confusing algorithm by using the same anchor to different target URL - within the domain or outside of it).

It’s all about semantics and calculating similarity between subpages.

You can easily do this using Surfer's Topical Map. After connecting your domain, you can see which topics are relevant to your niche and how to group them.

The closer a topic is to the center of your map, the more relevant it is.

By focusing on clusters that are relevant to you, you can build sustainable topical authority.

Theory no. 3: Content quality (sic!)

I believe that the 1st and 2nd theory are the most possible explanations and due to Occam’s razor, we could end it here.

However, when you need to rearrange the structure of your website and possibly cut off some articles, paragraphs, and outgoing links, I think it’s worth doing something more.

Content optimization is a must here. Hubspot’s blog is a true behemoth and needs some taming.

I wonder if the irrelevant content will be just removed or rewritten. Maybe it will be clustered and moved to a separate section or even subdomain?

I just wonder if any of Hubspot’s content was produced with AI. Again - don’t get me wrong - I’m not a purist and I really enjoy using technology and AI tools in SEO. But let’s be honest, it’s easy to cross a line.

There’s a debate if Google detects AI or not, but according to leaked documentation, Google calculates “effort” used to create article. Even if it’s potentially helpful, but it’s just a synthesis of generally available information, the effort score can be low.

And this might be a thing with articles (especially listacles) on Hubspot’s blog. In most cases, content editors aren’t B2B sales advisors or marketing practicioners. They collect information, create a list and that’s it.

Also, their listacles with a large number of outgoing links could trigger a review system, even if those articles weren’t created directly for affiliate reasons.

This recalls the site reputation abuse policy update in 2024.

This penalty can be related to outgoing links (unmarked properly) and writing on irrelevant topics and can be triggered by calculation of siteEmbeddings and SiteFocusScore. Sounds familiar?

There’s also a thing called “Scaled content abuse” and it’s related to scaling content with AI just to target popular keywords and drive traffic.

I can’t say for sure if the content was created by AI. If so, it may have not been properly humanized.

It's never 100% conclusive with detection tools. I’ve encountered some false positives in the past, but I think that it could also be the case here... At least to some extent

Look at Surfer’s AI detection:

Other tools like Originality, show the same:

What’s the scale of automated content creation? Hard to say at the moment, but it’s worth further investigation.

To everyone saying they were so surprised by this situation. Hubspot’s strategy could trigger different systems like site reputation abuse, scaled content abuse and it clearly violated link spam policy on a large scale.

Theory no. 4: A kind of a conspiracy theory

It was brought up in several discussions so I decided to add this here as well.

Alphabet Inc. (company that actually owns Google) tried to acquire Hubspot in the 1st half of 2024.

They failed. After that Hubspot started losing traffic.

Don’t be surprised or suspicious

Some specialists, entrepreneurs and marketing gurus blamed Google for scaring off their users. Others were simply surprised and called it the end of a certain era in SEO.

While I believe anyone can be surprised by anything, I’m kind of triggered when people explain the situation by saying it’s due to Google’s alleged unpredictability, ads, and shallow answers that can’t stand AI’s competition.

Sure, Google is far from ideal, but that's not the case here.

Also, there are voices like Brendan Hufford’s who underlined that maybe there is a loss of interest on those keywords.

“Those graphs don't tell us that google decreased their rankings and that is what led to the traffic drop. We see it in correlation, but they might've lost a ton of low volume KWs and the real issue is that search volume dropped 80% for most high traffic terms. Lots of variables in there outside of rankings.”

We can’t be cherry picking the arguments. If someone assumes that traffic loss may be due to people stop searching on Google, then he should also assume, with the same seriousness, that organic traffic and ranking improvements also could be just an unrelated correlation and MAYBE 10x more people came to Google.

We can actually check that.

I examined the top 1000 pages within Hubspot's blog that lost traffic in the US in the last 6 months, exported their main phrases and checked the trend. Those that had a negative growth rate (actually lost search volume) were responsible for a total of 1.7M searches and there were 301 of them.

Those with a positive growth rate were responsible for 2.4M and there were 571 of them.

Of course this topic is much broader and needs deeper analysis but first observations are contradictory to the idea that users are flying aways from Google.

It’s not the end of the era. It’s not the end of SEO. It’s not a maturation of the channel that drives users away from Google organic results.

If someone misinterpreted recent rumours about Google going below 90% of the global market share in search and thinks that Google is losing users and traffic… here’s the explanation why this logic is wrong.

Actually there are even examples that show ranking drops (2-3 positions) and simultaneous increase of traffic due to bigger interest in certain topics!

Look at the position change and traffic change for these keywords:

I agree that we should be sceptical! It’s healthy. But negation without verification isn’t rational scepticism and it often leads to misunderstandings and conspiracy theories.

Hubspot is not the only one

I would also like to recall the statement by Larry Kim from WordStream, who would probably love to see the death of SEO since he’s a PPC guy.

He stated that SEO is dead since 2024 :)

Surfer team members decided to comment that - like Jakub Sadowski:

or Tom Niezgoda:

Larry’s alleged disappointment with SEO also captured my attention so I decided to check how his website’s doing in Google recently. It all became clear…

Wordstream also lost significant amount of traffic during the recent December 2024 Spam Update.

Less people visit Wordstream’s website from Google organic search results because it dropped in rankings and nobody is looking at page 2,3,4…

Take a look at some of the Wordstream’s URLs which dropped in rankings and resulted in traffic loss:

- wordstream.com/blog/ws/2022/11/28/christmas-holiday-greetings-wishes

- wordstream.com/blog/ws/2020/04/09/instagram-bios

- wordstream.com/blog/ws/2021/11/08/business-name-ideas

- wordstream.com/blog/ws/2021/12/06/small-business-ideas

Does it look similar?

I just wonder why they keep using date format in the URL directory?

This URL also captured my attention:

https://www.wordstream.com/blog/ws/2015/05/21/how-much-does-adwords-cost

Content team could at least change the URL and set up 301 redirects, or simply delete dates in URLs and refresh it. Google Adwords in 2025? Seriously, you blame Google for classifying this as irrelevant?

Outgoing links are also worth auditing. Wordstream links to 3411 domains (3409 dofollow) and there are 12,294 groups of similar outgoing links. Meanwhile, site:wordstream.com shows 3240 indexed pages in Google.

Ahrefs shows 2582 organic pages. I know that those aren't precise numbers, but you can see the scale and where it's going, right? :)

And doesn’t it look similar to Hubspot’s situation?

Authority that websites like Wordstream or Hubspot have is inevitably of great value and to some extent they are allowed to make some mistakes that small pages would regret.

But at some point, their actions are also triggering ranking systems like any others. Why would they grow constantly by publishing irrelevant articles?

In conclusion

I really believe Hubspot will be totally fine! Firstly, their rankings didn’t disappear totally. They lost tons of traffic, but the great majority of it wasn’t converting and probably didn’t even support any major business goals.

Secondly, I think they will be fine because instead of complaining about the death of SEO, they got to work… and do SEO!

I examined the most obviously irrelevant URLs like:

https://blog.hubspot.com/marketing/shrug-emoji

https://blog.hubspot.com/sales/famous-quotes

And you know what?

They redirect to https://blog.hubspot.com/marketing/hubspot-blog-content-audit :) Smart move.

Also it’s worth noting, that when they lost traffic, they invested in other channels and types of campaigns like influencer marketing.

During the whole of 2024 their brand was searched globally in Google (according to Google Trends) more than ever before:

In terms of business, they are doing great.

So, let’s just summarize it:

Hubspot is alive, Google is alive and SEO is still alive. We can get back to our work.

.avif)