Your content ranks on Google, but ChatGPT and AI Mode aren't citing you.

That's because AI search doesn't work the way you think it does. While you're optimizing for one keyword, something else is happening behind the scenes: the query fan-out. And if your content doesn't account for it, your chances of getting cited and mentioned in AI fall off a cliff.

But the good news is this: once you understand what it is and how it works, it’s pretty easy to optimize for.

What is query fan-out?

Query fan-out is when an AI chatbot breaks down a query into multiple sub-queries and searches the web for them. It then scrapes the top pages for each sub-query and merges the information into a single generated response.

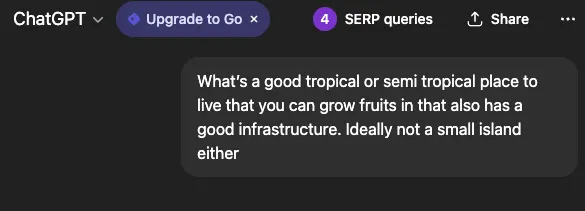

For example, here’s a search I recently did in ChatGPT:

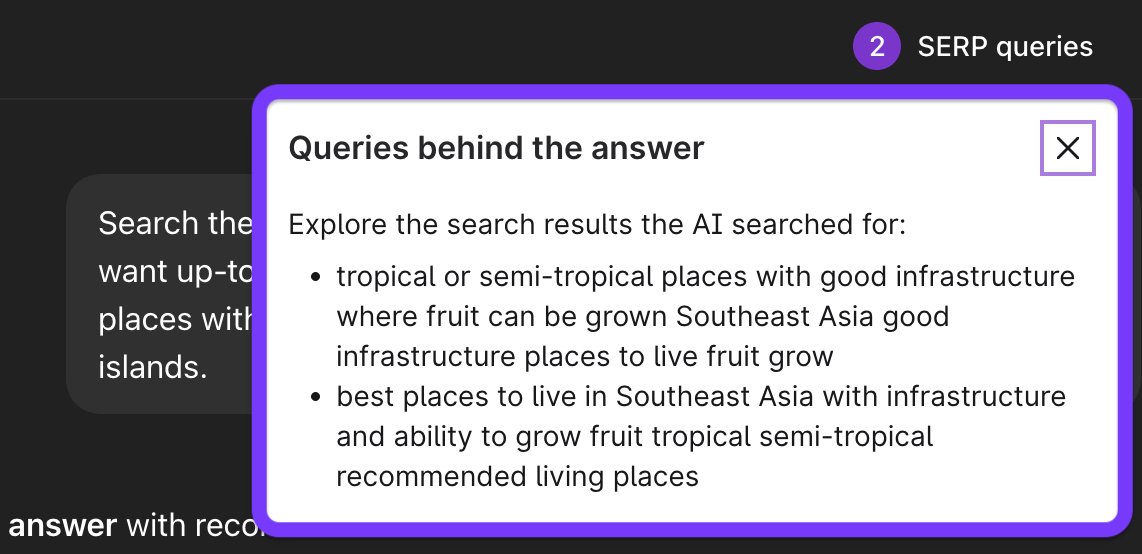

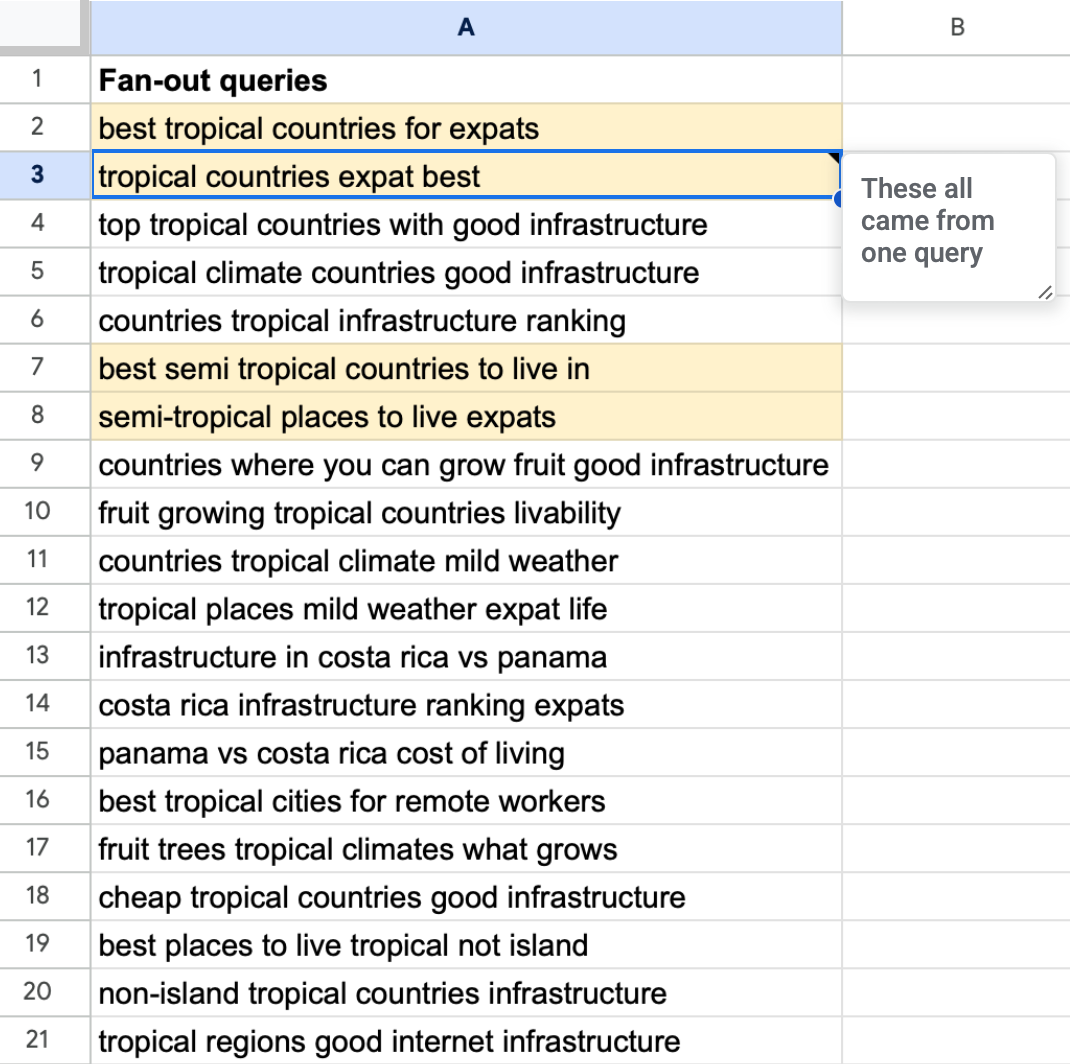

Using the free Keyword Surfer extension for Chrome, I can see which fan-out queries ChatGPT searched for:

You can see that they’re a mix of infrastructure checks, country comparisons, and cost-of-living angles. These are basically the things a human would end up searching anyway, just compressed into one step.

ChatGPT then merges all the information it gathered from all of these fan-out searches into a single generated answer (with cited sources).

This is something that Google's AI Mode also does, as announced at Google I/O 2025 by Head of Search Elizabeth Reid:

AI Mode isn’t just giving you information—it’s bringing a whole new level of intelligence to search. What makes this possible is something we call our query fan-out technique. Now, under the hood, Search recognizes when a question needs advanced reasoning. It calls on our custom version of Gemini to break the question into different subtopics, and it issues a multitude of queries simultaneously on your behalf.

Why does AI search use query fan-out?

AI search uses query fan-out because people ask much more complex questions in ChatGPT than they do in traditional search engines.

You’re not just typing “best tropical countries” anymore. Instead, you’re writing full sentences or paragraphs, asking about climate preferences, infrastructure needs, lifestyle constraints, and a side quest about fruit trees.

A single user query can’t answer all of that.

So AI turns the original query into multiple sub-queries, evaluates each angle, and recombines them.

This enables AI systems to generate far more specific responses—often good enough that users don’t feel the need to click away.

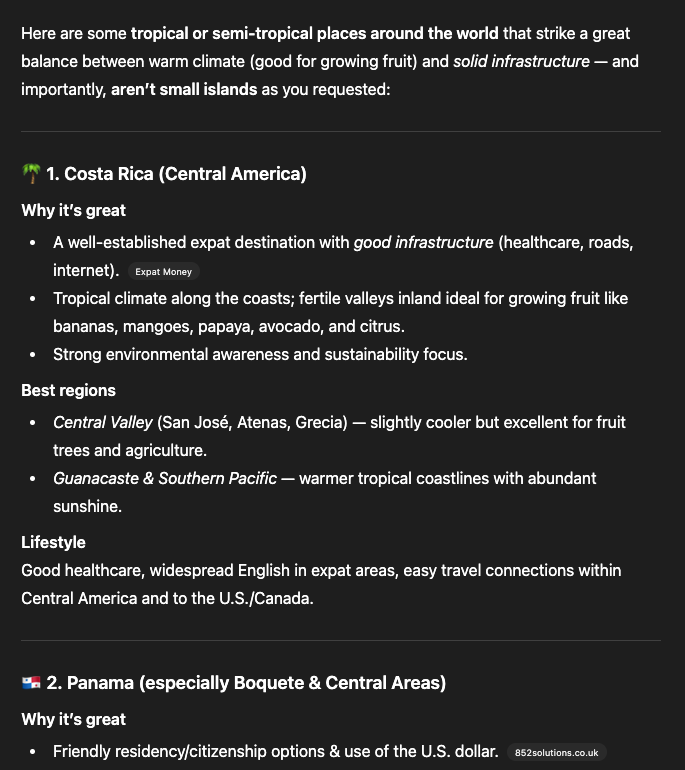

For example, here’s the response ChatGPT generated to the query above:

You can see that it’s super detailed and, honestly, covers everything I wanted to know. There’s no need for me to click away.

If I were using traditional search to research and answer this query myself, it would be a different story. I’d probably have to run quite a few Google searches, starting with something like "tropical countries with good infrastructure.” Then after checking out a few listicles that all mention, say, Costa Rica, I’d search for "what fruits can you grow in Costa Rica" or something similar.

ChatGPT uses query fan-outs to do all of this research in one go, then uses generative AI to turn it into a comprehensive answer.

Why do query fan-outs matter for AI SEO?

Query fan-out matters for AI SEO because it means that if you want AI to cite and mention you, then you'll stand a better chance of that happening if you rank not only for the main query, but for fan-outs as well.

How do we know?

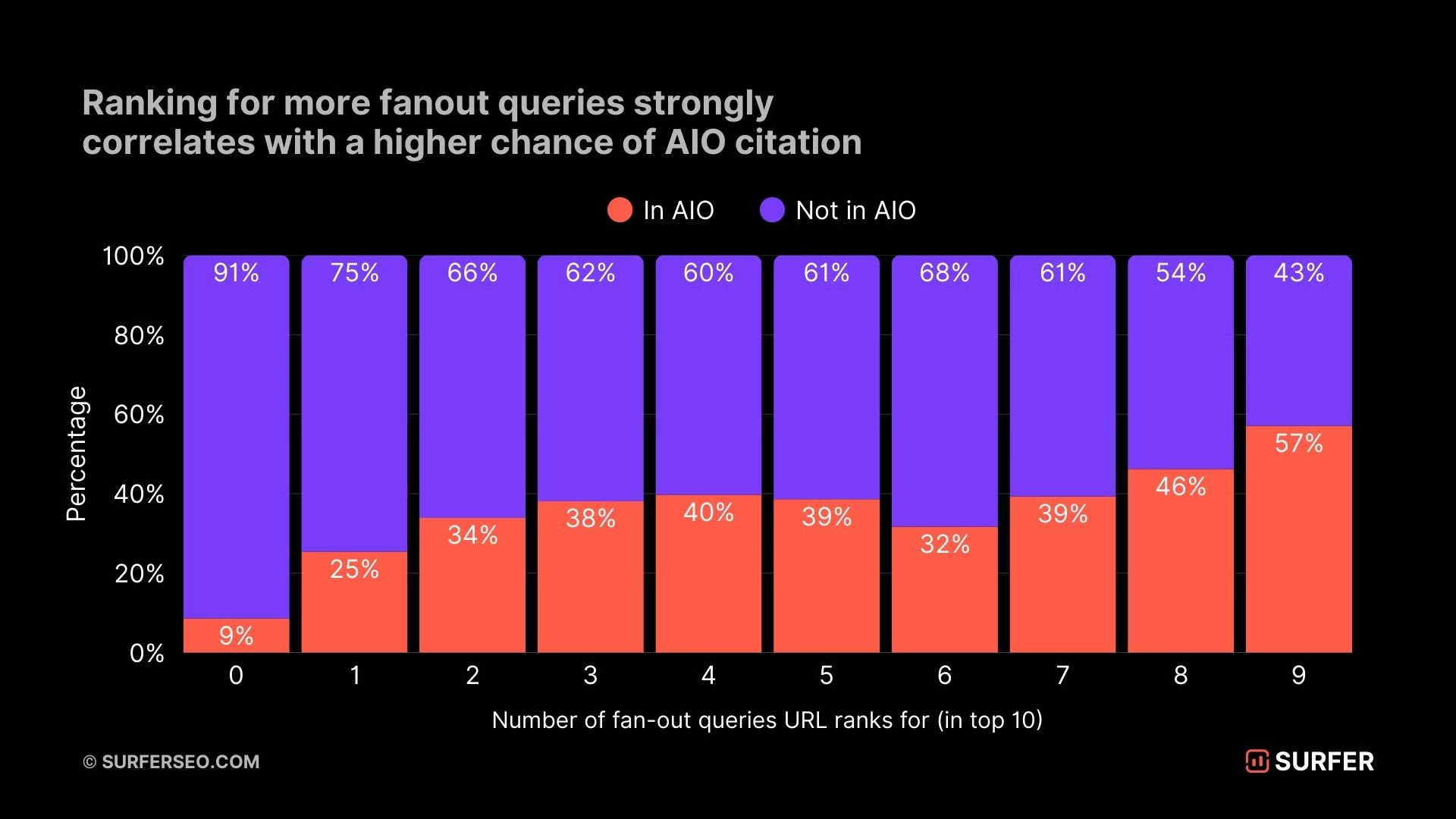

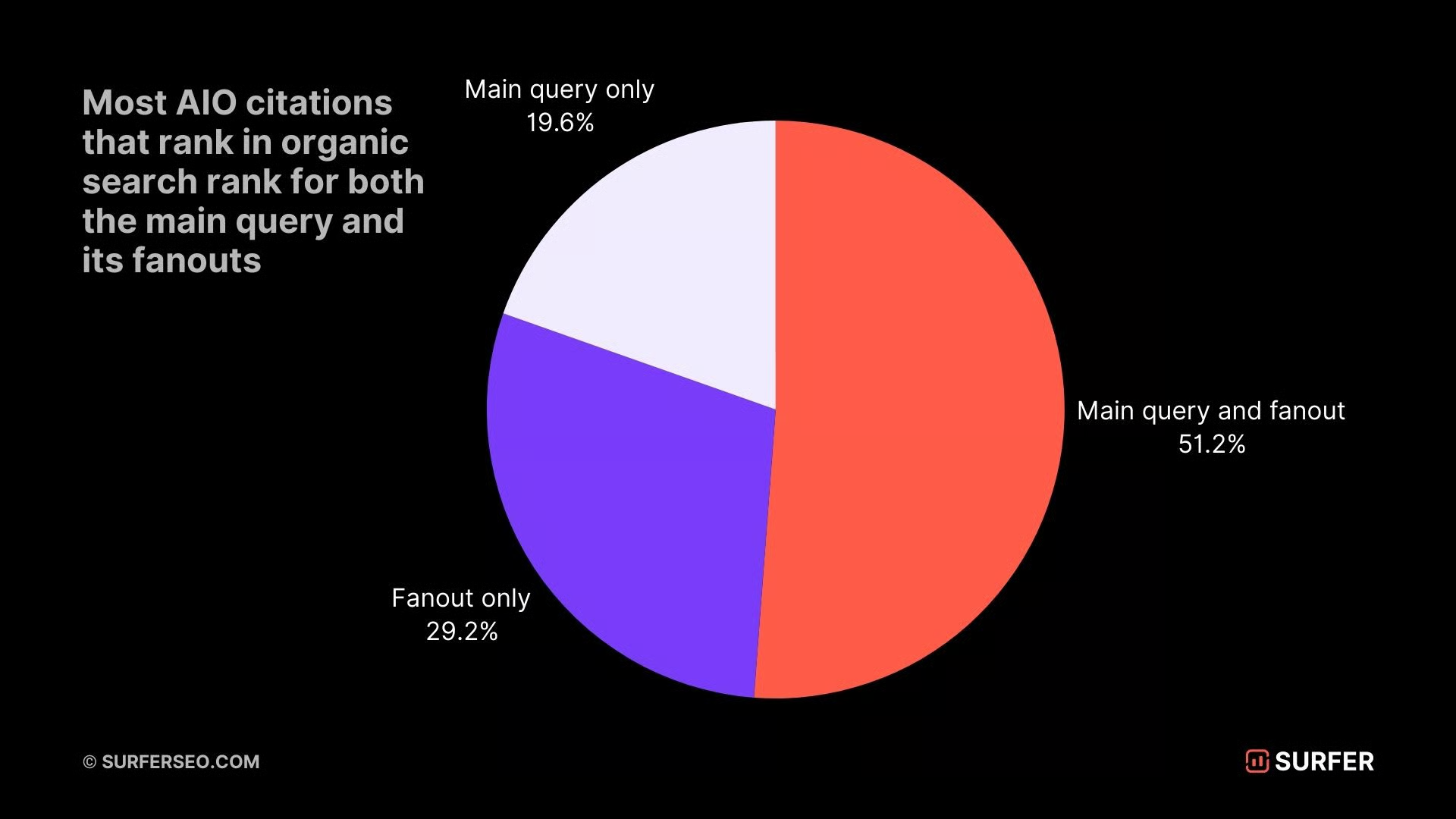

We analyzed 173,020 URLs and found a very predictable pattern: the more fan-out queries a URL ranked for, the more likely it was to show up in Google’s AI Overviews:

In fact, we found that you're 161% more likely to get cited in Google's AI Overviews if you also rank for fan-outs:

How to optimize for query fan-outs

Your first instinct might be, “Great, I’ll just pull all the fan-out queries for my keyword and rank for those, too.”

Please don’t. That way lies spreadsheets, and fan-outs are messy in practice.

Pulling them manually for dozens of topics is painful enough; doing it for hundreds will break your will to live. You’ll mostly end up staring at a chaotic list of near-duplicates and half-phrases that don’t map cleanly to real content.

And even if you could tidy that up, fan-outs have another problem: they’re inconsistent.

ChatGPT, Google’s AI Mode, and Perplexity all generate different sub-queries, and we found that only about 27% of them stay stable across repeated searches.

Trying to optimize for every possible angle is like trying to hit a moving target while the target argues with itself.

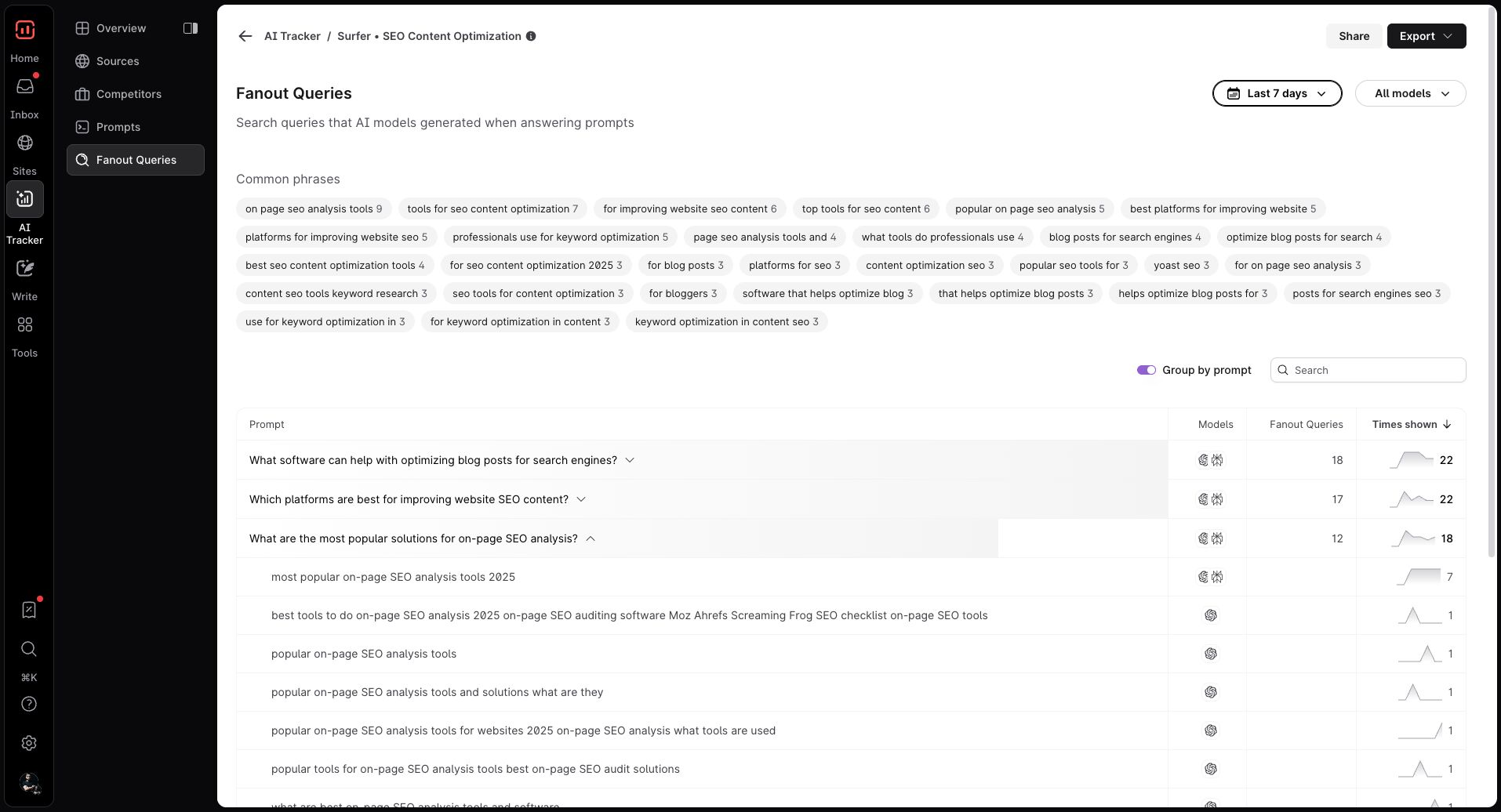

For critical keywords, sure—go ahead and pull the actual fan-outs using Surfer’s AI Tracker:

But when it comes to your broader content strategy, there’s a far better approach.

1. Build content clusters around important topics

Let’s say you want to show up in AI search for “best _______ software.”

Sure, you could pull every fan-out query across ChatGPT, AI Mode, and Perplexity, run them multiple times to account for instability, cluster the outputs, and try to reverse-engineer the “true” subtopics.

But unless you enjoy chasing moving targets, there’s an easier—and far more durable—approach: build topical authority.

When your site covers a topic deeply, you naturally answer the majority of the questions AI systems tend to fan out into. In other words, if your content spans the whole topic, AI can pull from you no matter which sub-query gets triggered.

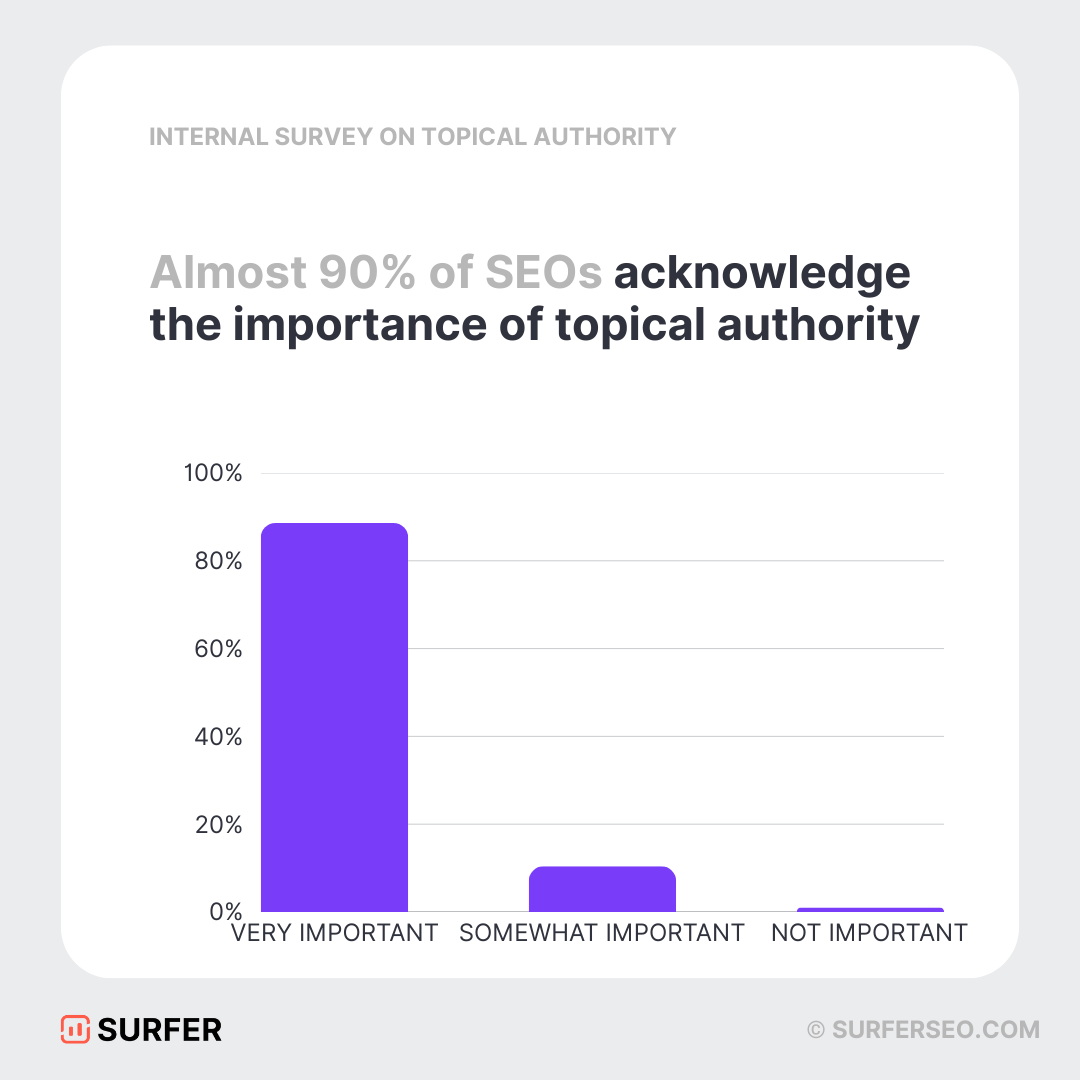

Plus, as a bonus, you'll also improve old-school SEO and get more traffic because you rank for more stuff. This is probably why 90% of SEOs agree that topical authority is important for SEO.

So how do you actually build this?

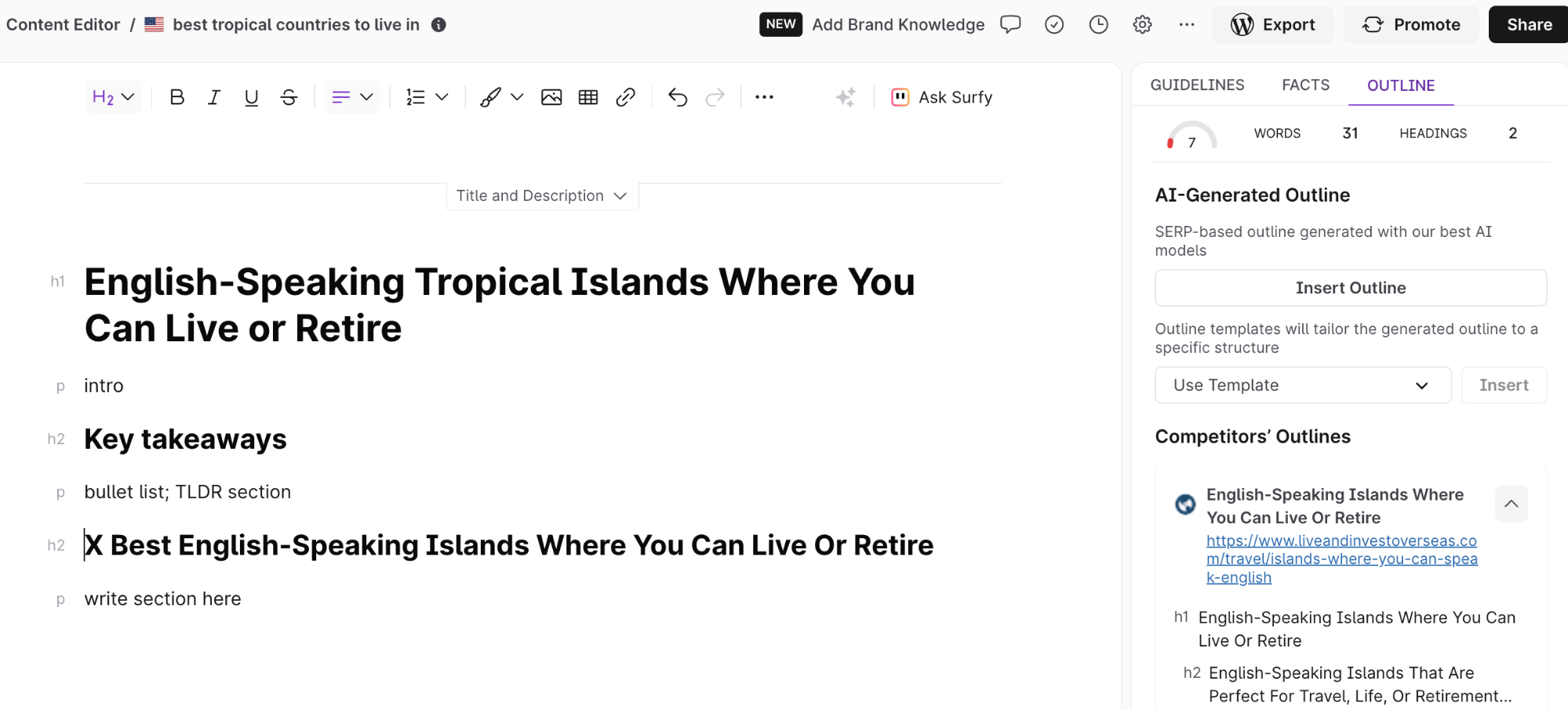

Start with Surfer’s Topical Map.

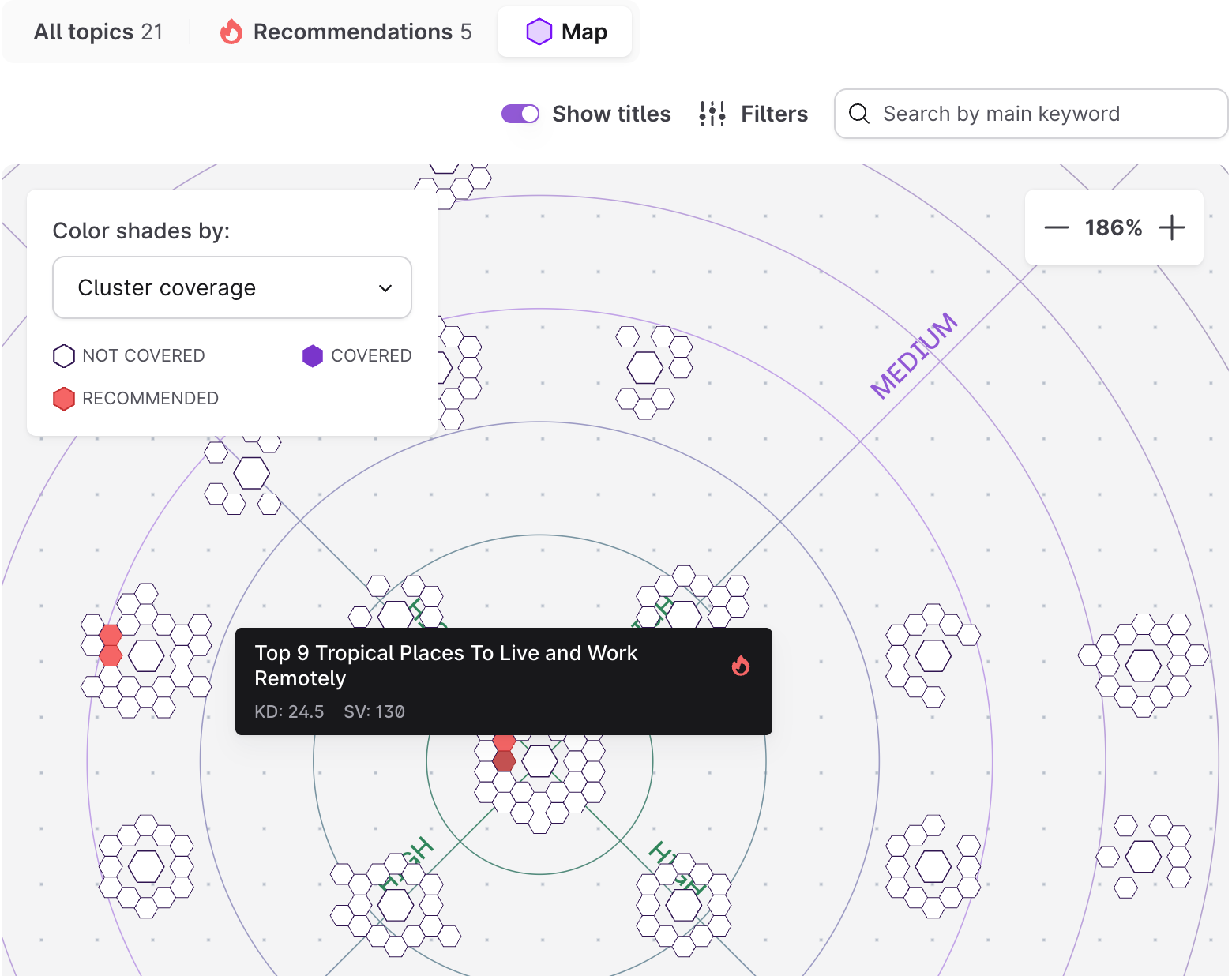

It takes a site or topic, finds all semantically-related keywords, clusters them into themes, and shows you exactly where your content gaps are.

From there, you can work through the gaps in clusters you care most about—drafting new content or optimizing what you already have.

A smart shortcut: filter for low-difficulty subtopics in Topical Map and go after those first:

By stacking these wins, you can tiptoe your way into topical authority without fighting uphill from day one.

2. Publish comprehensive, well-organized, fact-dense content

Comprehensive content is still the easiest way to rank for multiple search queries—and by extension, multiple fan-out queries.

But no single page can (or should) cover everything. The trick is knowing which subtopics belong on the page and which should live elsewhere.

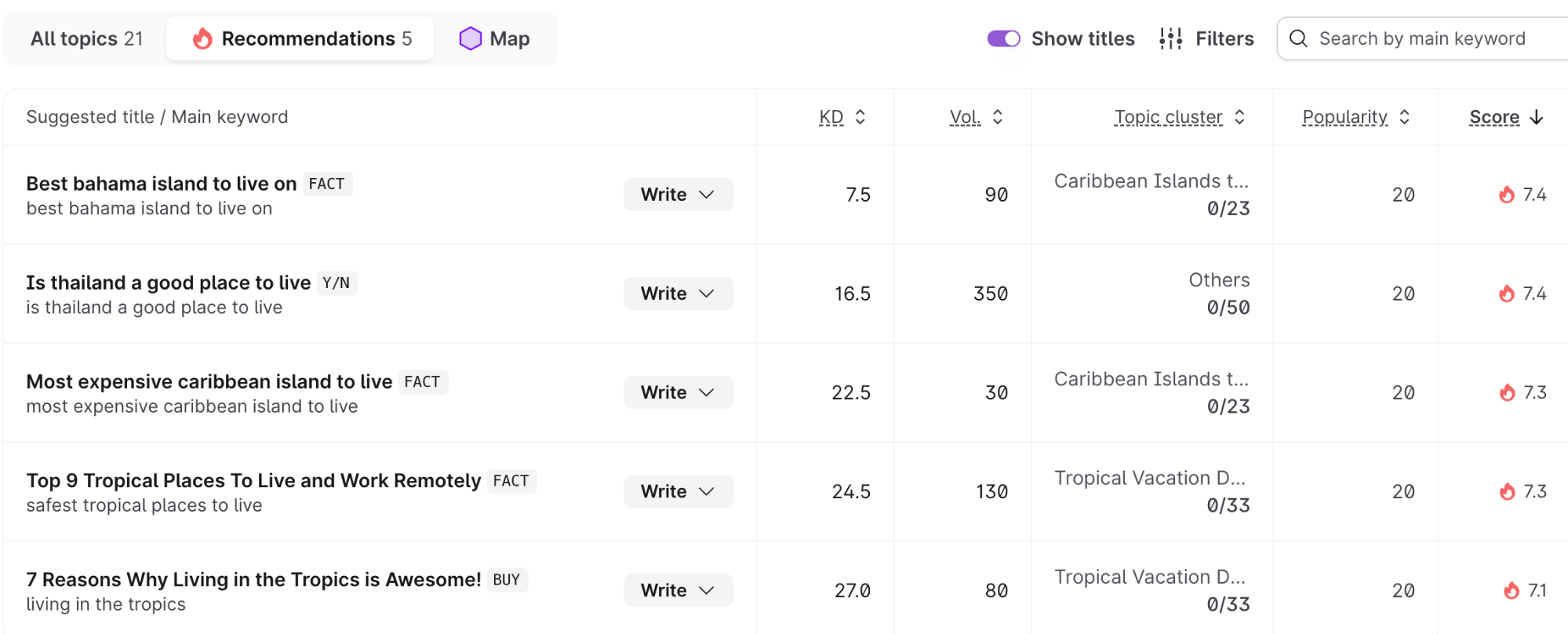

This is where looking at top-ranking pages still helps.

You don’t need to reinvent the wheel; just cover the same core subtopics the leaders already agree on.

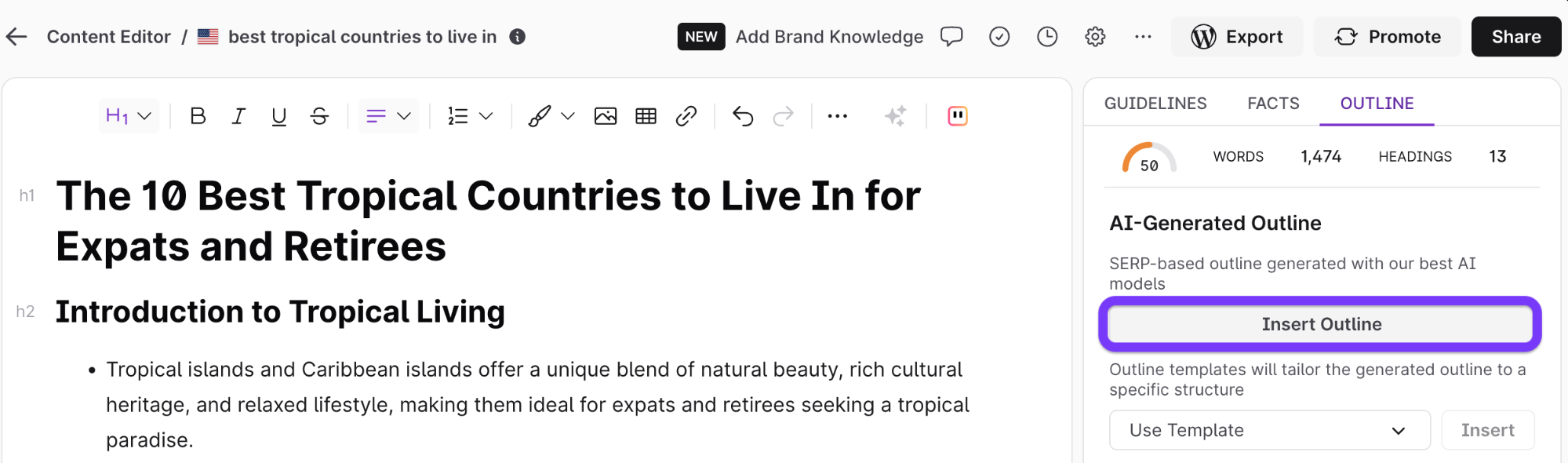

Surfer makes this painless by pulling all headings and subheadings from competing content so you can see the common structure instantly.

From there, you can drop the missing pieces into your Content Editor draft:

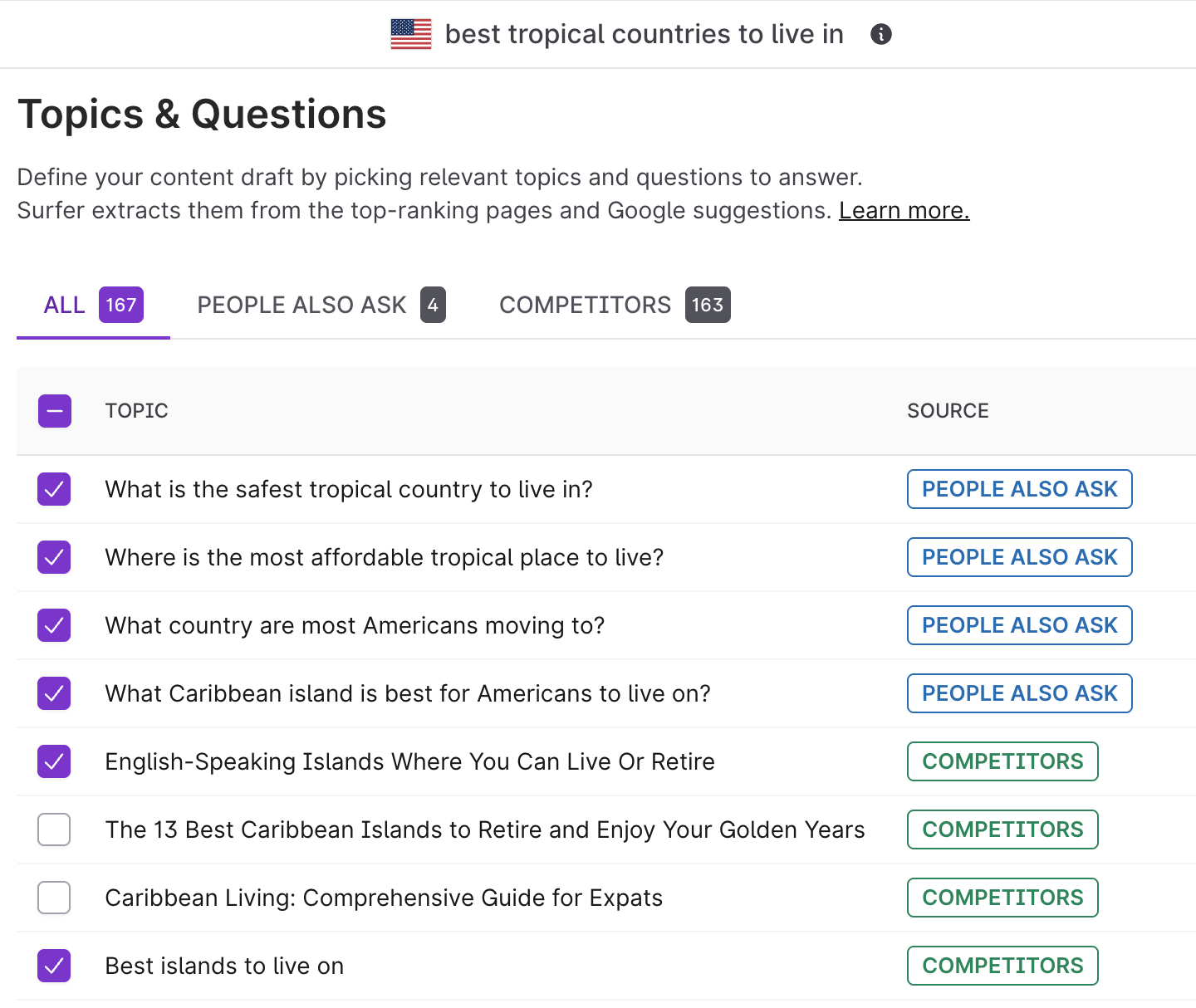

Surfer also surfaces topics and questions that real searchers ask around your keyword.

These make excellent additions for capturing related queries and potential fan-outs.

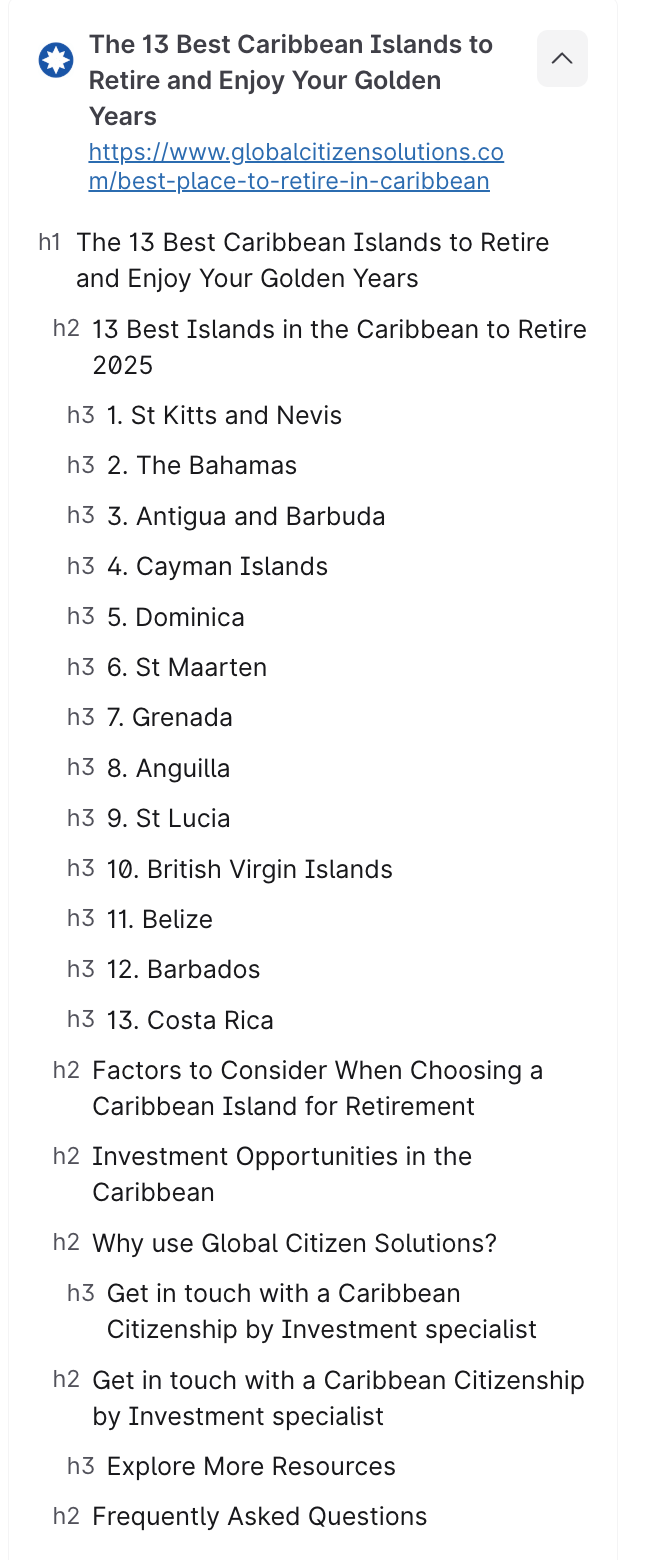

If you want a quicker start, the Insert Outline button will generate a solid first-pass structure:

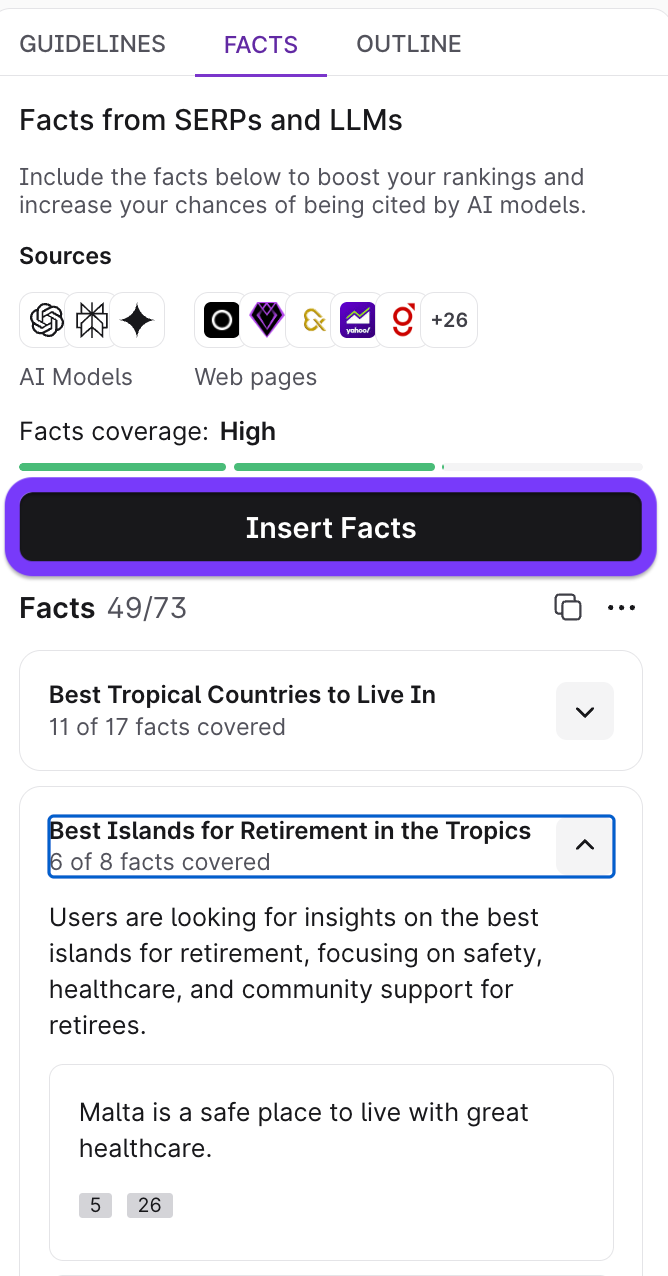

Don’t forget the Facts tab.

Our data shows that including facts can boost your visibility in AI responses by up to 25%, especially for queries that require accuracy. In Surfer, you can insert these manually from the facts we pull or in one click with the “Insert facts” button:

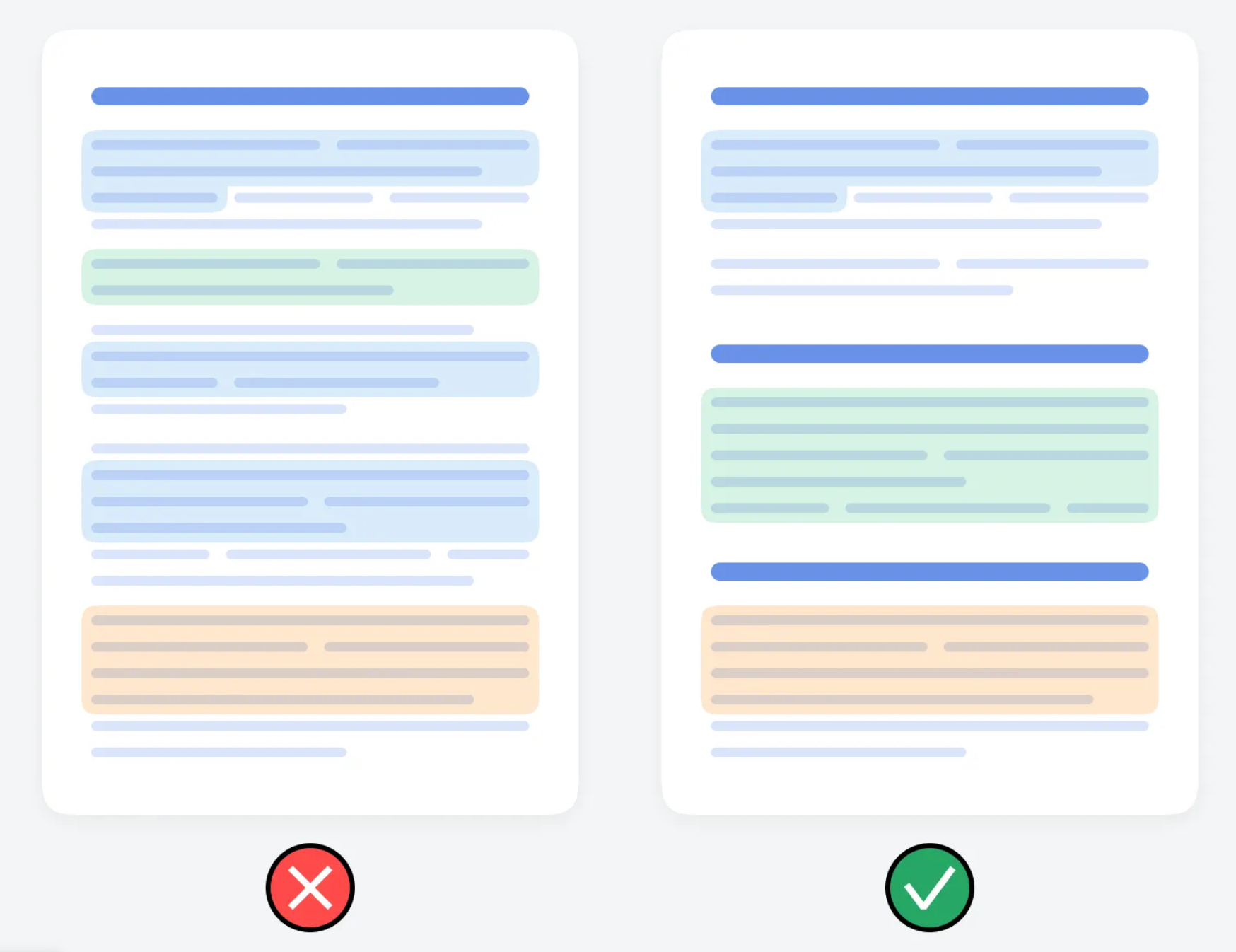

Finally, make sure your page is organized into clean, self-contained sections—not scattered insights woven through paragraphs.

LLMs chunk content to efficiently retrieve subtopics.

This is where “writing for retrieval” matters. AI systems don’t read pages the way humans do—they extract discrete chunks.

Sections that lead with a clear answer, followed by supporting detail or evidence, are far easier for models to reuse during fan-out queries.

When pieces of the same idea are scattered across different places, models struggle to use your content in fan-outs.

If AI can’t easily pull the right section, it probably won’t cite the page at all.

3. Get featured on pages AI is already citing

AI citations don’t come from a single query. They’re the result of the entire search process, including all the fan-out queries happening behind the scenes.

That means there’s a shortcut most people overlook: if you can get mentioned on pages AI already trusts, you’re effectively optimizing for multiple fan-outs at once.

Instead of guessing which queries AI might run next, you can look at where it’s already pulling answers from.

The easiest way to do that is with Surfer’s AI Tracker.

Set up a project by entering:

- your brand name (e.g., Nike)

- a topic or keyword related to your brand (e.g., “running shoes”)

- the location you care about most (e.g., United States)

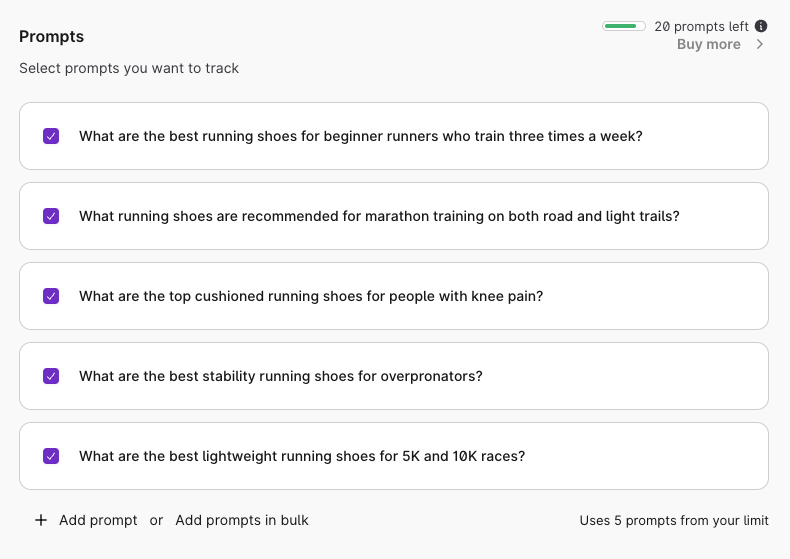

Surfer will suggest prompts to track, but you can also override these and enter them manually:

Either way, it’s worth focusing on queries where AI doesn’t just cite brands, but actually recommends them. Think “best ___ tools” or “top ___ platforms for ___.”

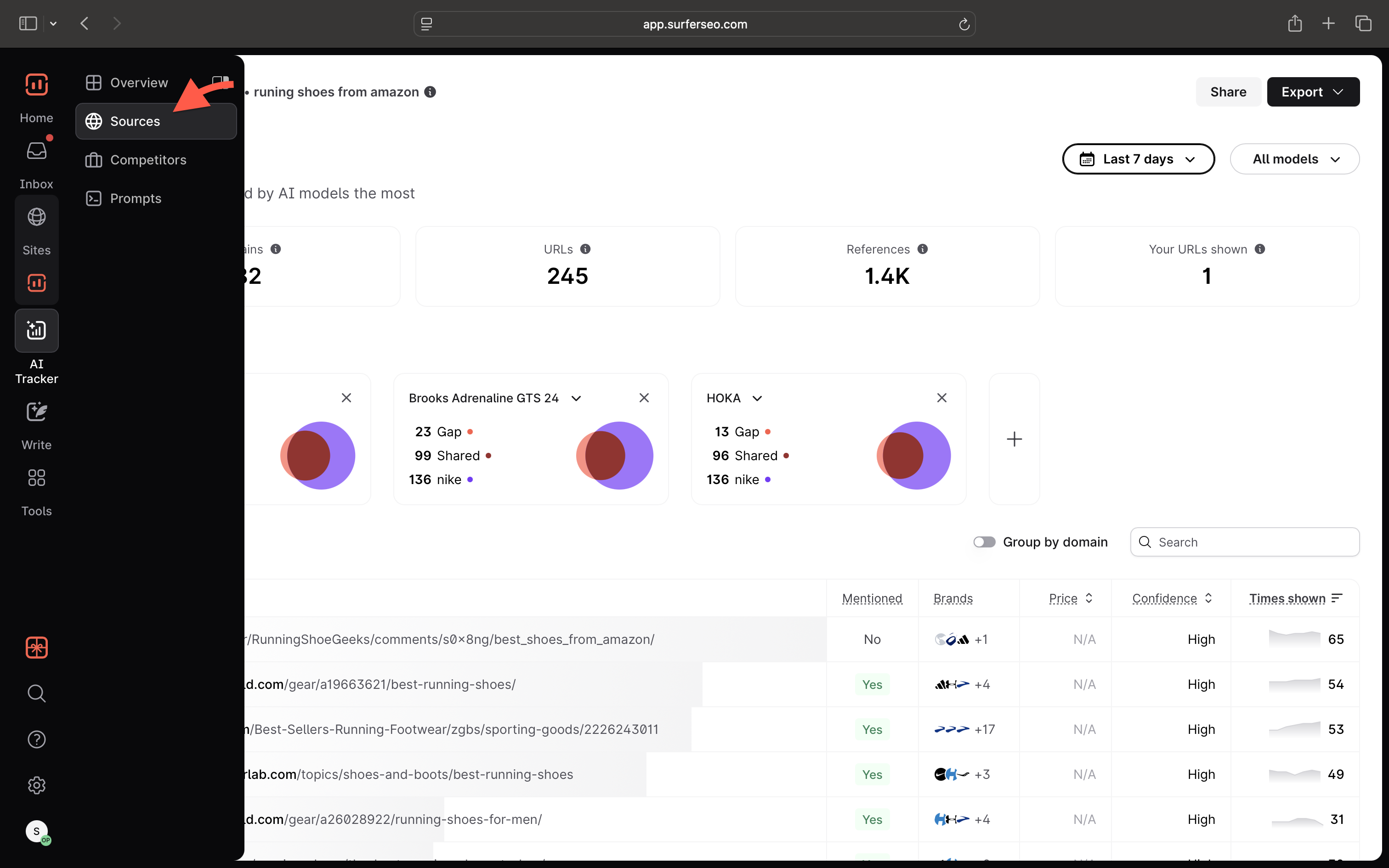

Once the project is running, head to the Sources tab.

Here, you’ll see the pages AI models reference most often for your tracked queries across Google AI Overviews, AI Mode, ChatGPT, and Perplexity.

These pages already have AI’s trust, which makes them valuable targets.

From there, filter by platform or location to narrow the list.

Now comes the important part: outreach.

Paying for inclusion is tempting, but it’s not always worth it—and reputable sites usually won’t touch it anyway.

A better approach is to look for gaps. What’s missing from their list? What would genuinely improve it?

For example, if a “best keyword research tools” page doesn’t include any keyword clustering tools, that’s an opening.

Instead of pitching immediately, lead with curiosity: “I noticed X wasn’t included; was that intentional?”

If they respond, that’s when you show how your product fits naturally. Not as a hard sell, but as a way to make their content more complete.

Is it easy? No.

But it scales far better than chasing individual fan-out queries, and it works because it aligns with how AI actually chooses sources.

Keep learning about query fan-outs

Query fan-out isn’t a trick or a tactic you can game once and move on from. It’s how AI search works.

AI models break queries apart, explore related angles, and pull from sources that already cover the surrounding context well.

That’s why chasing individual fan-outs doesn’t scale, and why topical coverage, clear structure, and genuinely useful content matter more than ever.

If you want to dig deeper into the data behind this, our research on how query fan-outs work in AI search goes into what we’ve observed across thousands of URLs and AI responses.

It’s a useful next step if you want to understand what’s actually happening under the hood.