AI-driven search is evolving so fast that, honestly, it’s hard to keep up even if you work with it every day. AI is changing how we interact with search engines and fundamentally reshaping business strategies. I feel like new revelations pop up every week, constantly changing what we know and how we think about optimizing for AI.

There's a ton of optimization advice out there, but often it doesn't come with the data to back it up.

In Surfer, data is in the middle of everything. When the “AI (r)evolution” began, we obviously started looking for data and kicked off our research.

In this post, I’ll share some of our findings on one of the central aspects of AI transformation: query fan-outs.

The presented data comes from 1600 runs made for a diverse group of keywords and prompts. To clarify the terminology, “run” refers to a query for a keyword or prompt.

What are fan-outs?

I’ll do some explanation before diving into the data. If you feel like it’s not for you, feel free to skip this part and jump to the next part.

Let’s start with the absolute basics. What are fan-outs?

In simple words, fan-outs are related keywords generated by AI in response to your query. Tools like Google's AI Overviews, Google's AI Mode, or OpenAI’s ChatGPT come up with "ideas" related to the keywords (or prompts) to broaden the base for the provided answer.

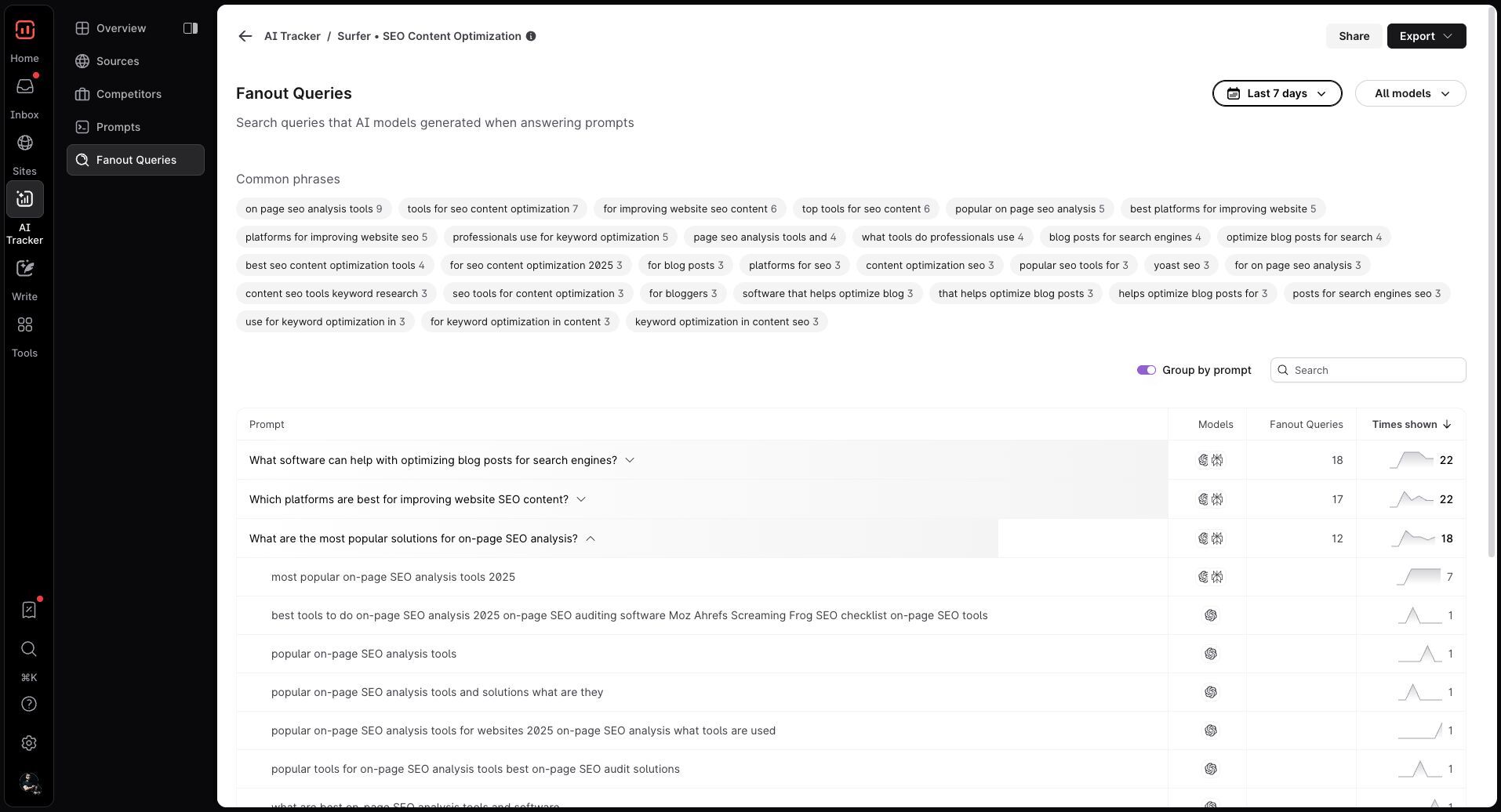

Here's a list of query fan-outs for Surfer.

Why are fan-outs important?

Fan-outs got attention when Google introduced AI Mode. But if you used Gemini with grounding (requiring LLM to use web search while providing an answer) or dive deep into the AI Overview, you could spot them there as well.

Let’s take a look at AI Overviews to explain why fan-outs matter.

When you type in a query, Gemini, which is powering AIO, kicks off a fan-out and generates a few additional keywords related to your original query.

Search results for these additional keywords are influencing the response. It’s highly possible that some facts and sources in the AI Overview are not from the SERP of your original query (one that you typed into the search bar).

They come from another SERP. SERP of a keyword generated by AI.

If you want to increase your chances of getting into sources or getting citations, you should rank for these additional keywords as well.

If you want to learn how query fan-out works, here is a short video from Google.

Gemini as proxy for AI Overviews and AI Mode

As I mentioned before, Google uses Gemini to power its AI products, including AI Overviews and AI Mode.

When we use grounding, Gemini provides us with fan-out queries that are used to formulate (or confirm) the response.

Yes, AIO and AIM may and for sure have additional instructions in system prompts, but vanilla Gemini is the best way to look inside the Gemini’s artificial “brain” and learn how fan-outs really work.

ChatGPT has fan outs too

Google’s AI Mode and AI Overviews are not the only AI Search tools that use query fan-out.

ChatGPT uses the exact mechanism to provide answers.

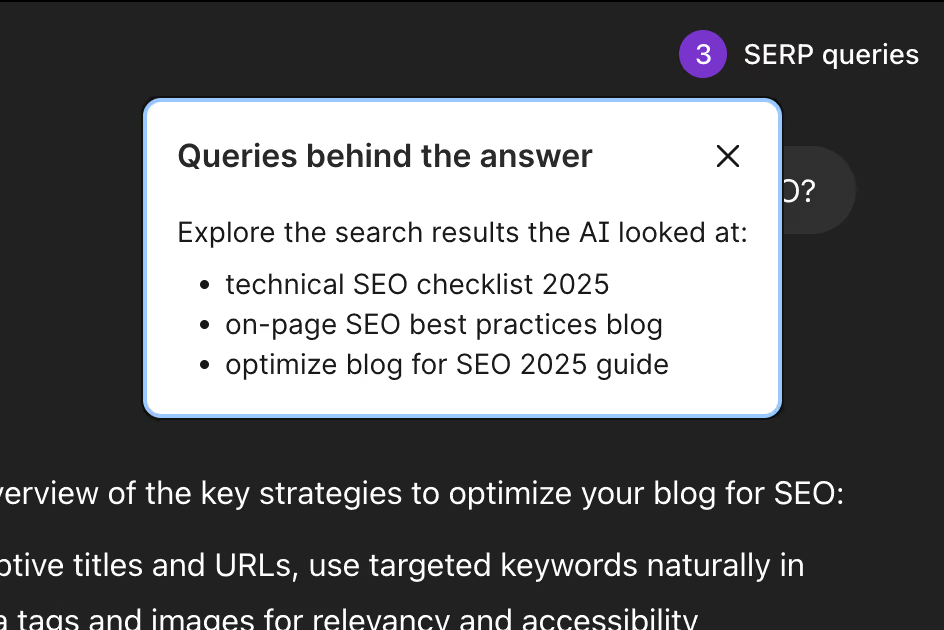

In the screenshot below, you can find queries that ChatGPT (model 4o-mini) uses to answer the question “How can I optimize my blog for SEO?”

These queries were captured by Surfer Keyword Extensions, which you can download here.

If the model supports fan-out access (not all OpenAI's models available in ChatGPT do), we will show you queries. If you don’t have the extension, don’t be shy and grab it. It’s free!

Fan-outs are unstable

LLMs are non-deterministic.

You may ask, “What does it mean?”.

A simple explanation is that even if you give the model the exact same prompt twice, you can get different answers.

This means that fan-outs may vary from one run to another. Before we start suggesting content ideas, we need to understand the level of volatility.

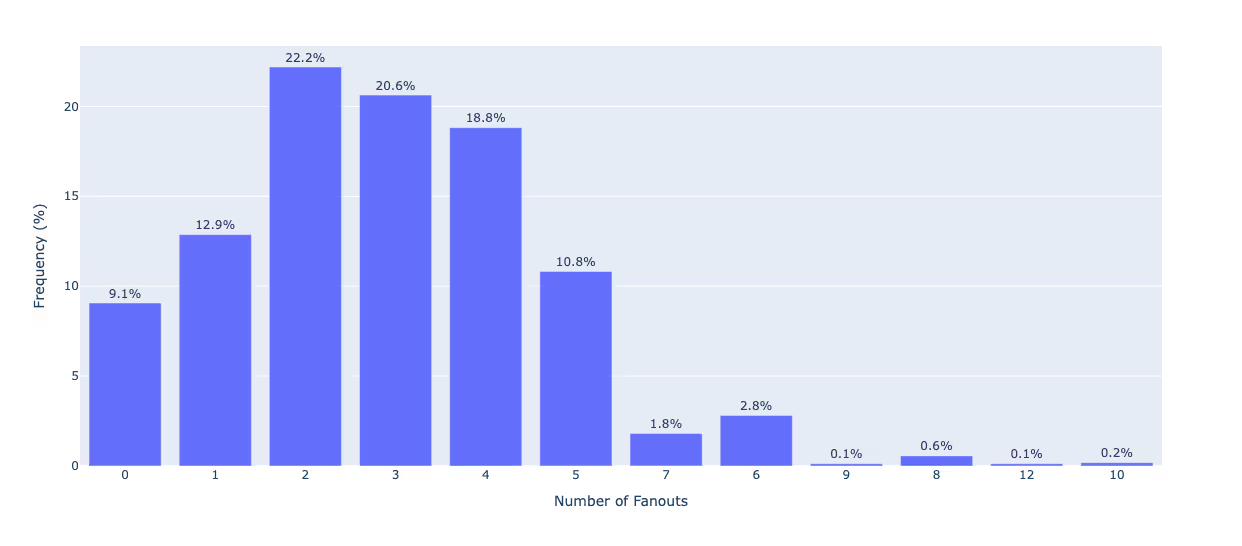

Finding 1: Most queries trigger up to 5 fanouts

Most of the time, when you search for something, the Gemini only expands that search into about five related fan-outs.

Usually 2-4. But the interesting thing is that they are not always triggered.

What does it mean?

Sometimes the SERP of an original query or internal knowledge is enough to provide an answer.

This shows that the LLM tries to keep the results quite focused. Providing you with a comprehensive answer without overwhelming you with too much information.

It's like the LLM explores just enough to give you well-rounded but still easy-to-digest results.

And it makes total sense.

Given the current attention span of the average user, displaying a wall of irrelevant content will likely result in the user abandoning the search. And Google wants us to stay.

Hook us with some additional context and dive deeper into the topic.

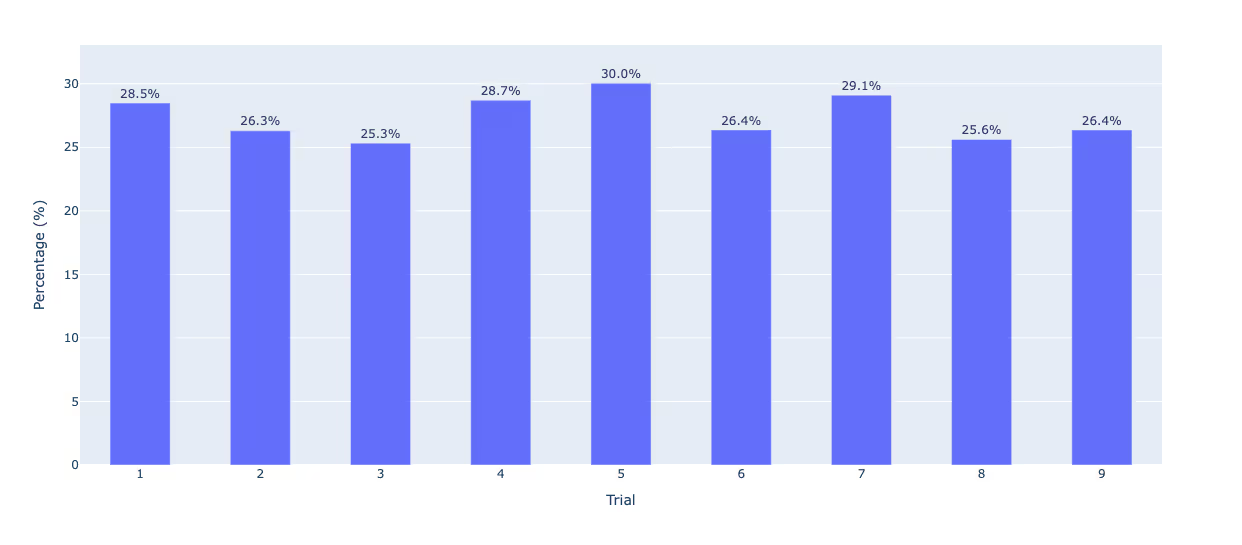

Finding 2: Lack of consistency in generated fan-outs

We measured it on two levels.

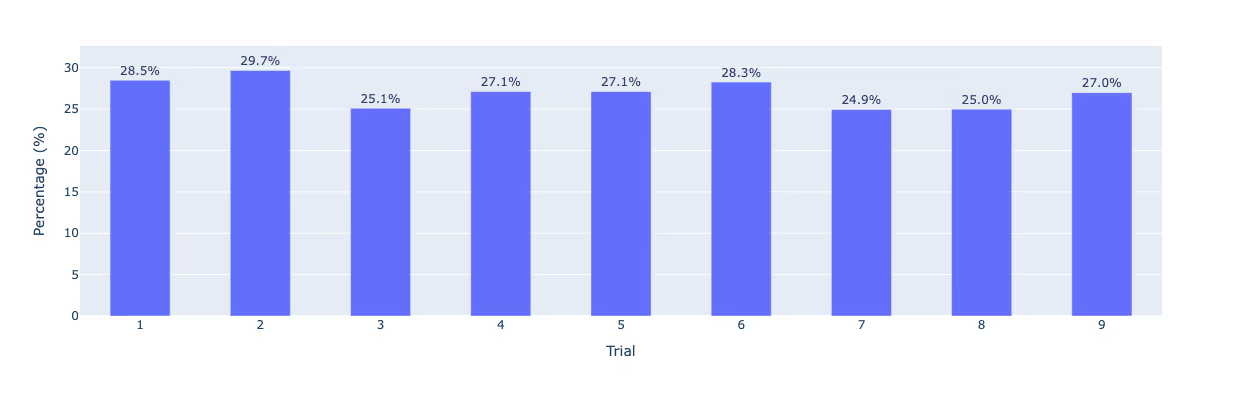

First, we compared the fan-outs from each run to those from the first run. Then, we compared fan-outs from each run to the previous run.

It turns out that in both cases only about 27% of fan out keywords actually stay consistent across different searches.

We named them core keywords.

That highlights the volatility of query fan outs. But there's even more instability.

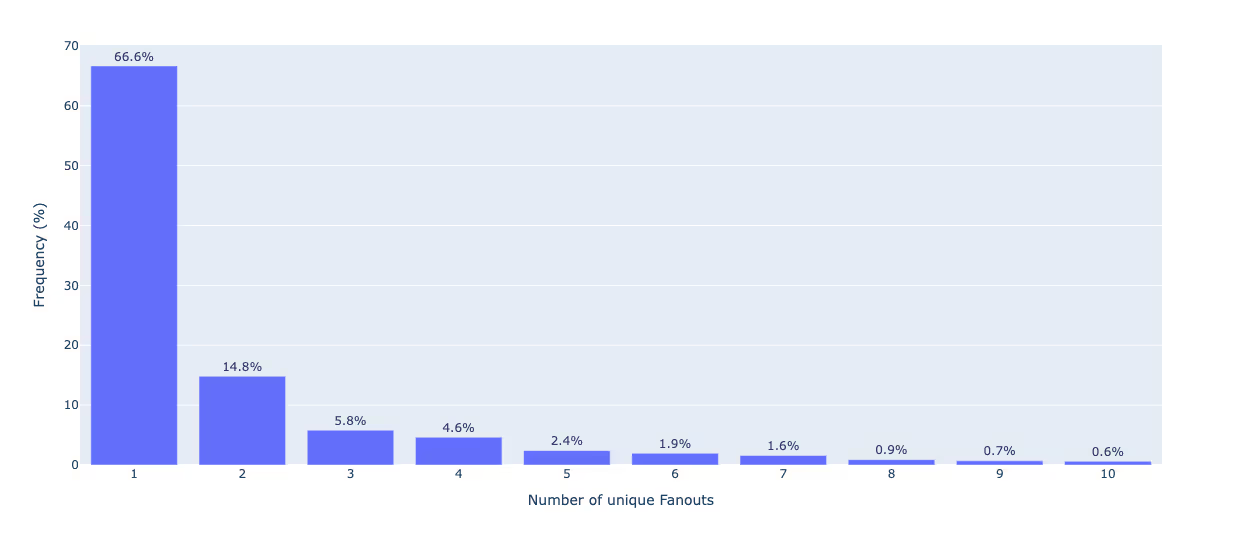

Looking at the whole generated list of keywords, we found out that 66% of fan-out keywords appeared only once.

Only a tiny 0.6% of keywords consistently appeared across all runs!

This perfectly illustrates how LLMs work.

Fan-outs and SERPs

Since fan-outs are basically search terms (AI-generated, but still search terms), we can analyze them as any other query.

From the initial data, we learned that fan-outs are “wobbling”, so we decided that chasing each fan-out separately may not be the best idea.

Instead, we looked at broader themes where the unstable nature of AI may have a lesser impact.

Finding 3: Semantics matter

Semantics is important in SEO for a while now. It’s even more important in AI search optimization.

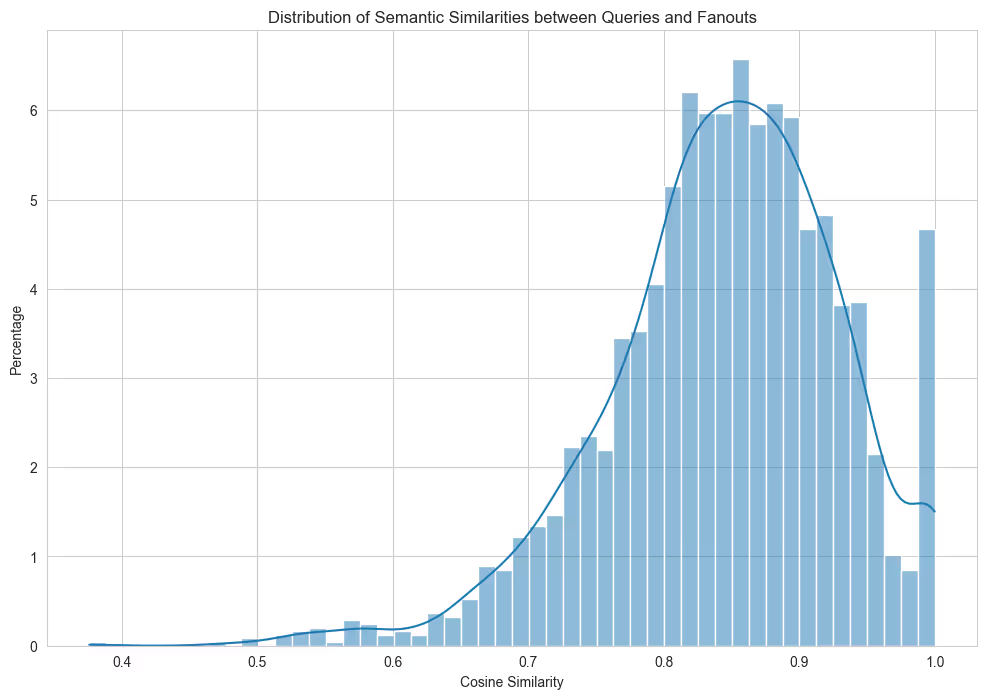

We used cosine similarity to check how close or far from the original input (or prompt) fan-outs are.

If you have never heard about cosine similarity, here's a tl;dr explanation: Cosine Similarity is a measure of how “aligned” two vectors are.

If they are very aligned (1.0), these are the exact same words. If it's somewhere around 0.1, it's a completely different word. To compare alignment, we need to transform words to numbers (vectors) with a special algorithm.

From the research, we found out that fan-outs are semantically similar to the original queries.

Most fan-out queries are located between 0.75 and 0.95 of cosine similarity. This means that keywords generated by Gemini are somewhere between strong similarity (0.75) and almost identical (0.95).

There’s also quite a lot of fan-outs with similarity 1.0, meaning that the generated phrase is the same as the input. This means that when generating search terms, AI is usually not looking for totally different angles, but rather expanding on user input.

Here is an example output for the question "how to use hashtags on Instagram":

- "How many hashtags on Instagram post",

- "How to use hashtags on Instagram"

- "Instagram hashtag best practices 2025"

- "Instagram story hashtags"

- "Instagram reel hashtags"

As you can see, the generated fan-outs look like a traditional search engine query.

Obviously, the more complicated the wording, the lower the similarity; however, this provides clues about how Gemini works and what it looks like for these extra queries.

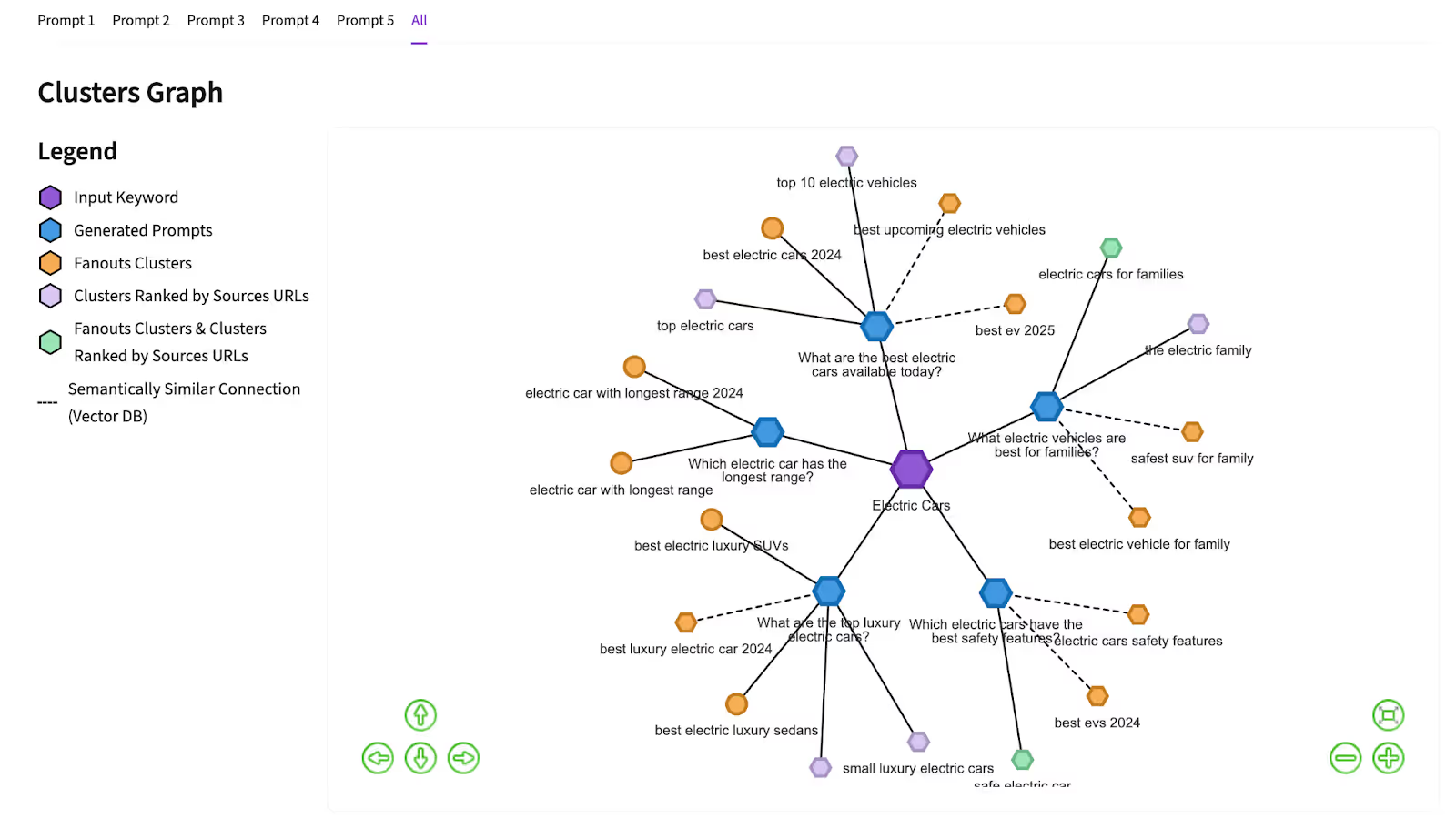

All this gave us the idea that we might need cluster-level optimization to optimize for query fan outs.

Finding 4: Clustering mitigates instability

84% of fan-outs can be considered as “query neighbors” of the original input (or prompt).

What is a query neighbor?

This is a situation where keywords share common URLs.

In our case, we looked into the top 20 results. In our data, we found that 84% of fan-out keywords share at least 1 URL. 56% share 5 URLs!

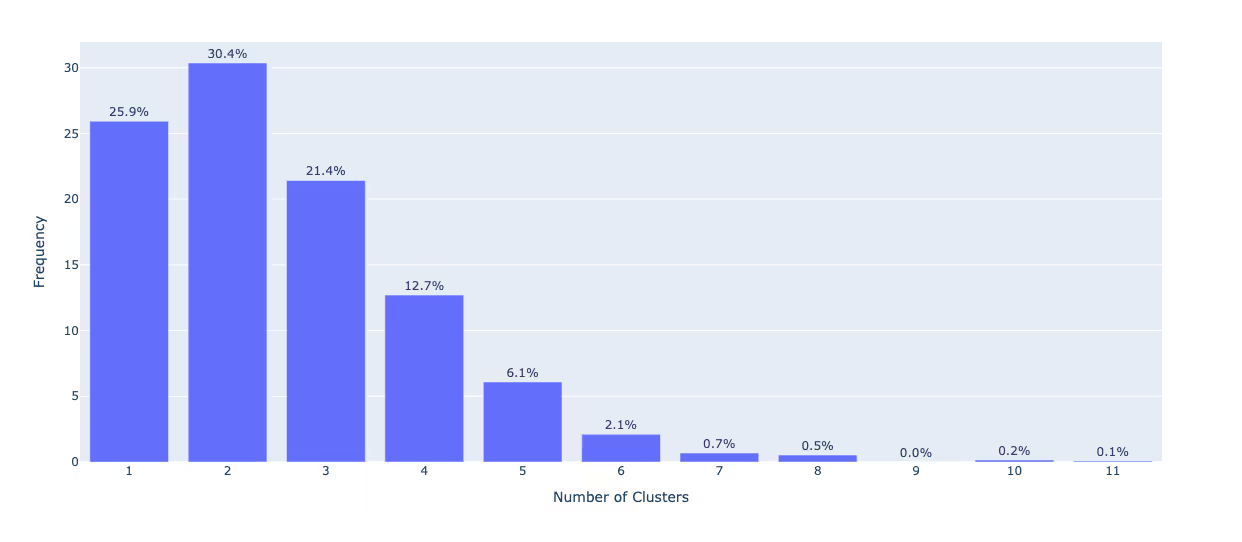

So again, clustering! About 90% of fan-outs can be split into up to 4 clusters.

This is huge considering that 66% of fan-out queries appeared only once. If you want to target a specific query, 4 clusters around it should increase your chances of success.

This is why we are experimenting with ways to research query fan-out.

To give suggestions on what to focus on and remove the guessing. This was the main reason why we started this research in the first place.

How to adapt to fan-out queries?

Here are 3 things you can do to increase your chances of appearing in fan-out queries.

1. Keep an eye on clusters

If you are not already doing so, start targeting wider keyword groups and big-picture themes, a.k.a. clusters.

This builds a stronger content base that’s less likely to get messed up by individual keyword changes and the insatiability of fan-outs.

Pay attention to your data and re-optimize content (e.g., with Content Audit in Sites) to adapt to changes in SERP. By focusing on bigger ideas, your content can attract more AI queries and boost visibility.

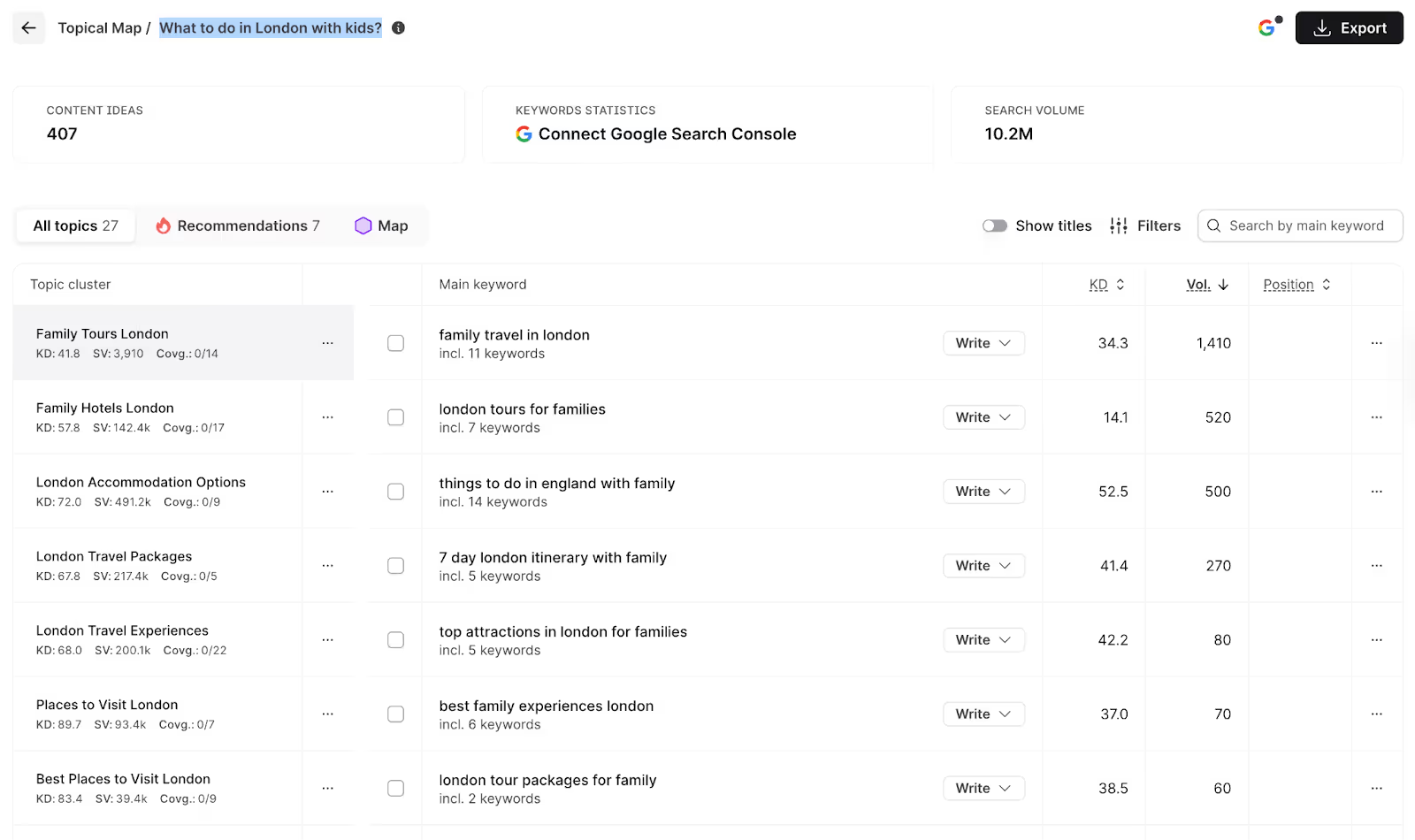

Play around with tools like Surfer’s Topic Research to explore related queries.

Start with a head term (e.g., London, London with kids) or try using the whole prompt as a query (What to do in London with kids?)

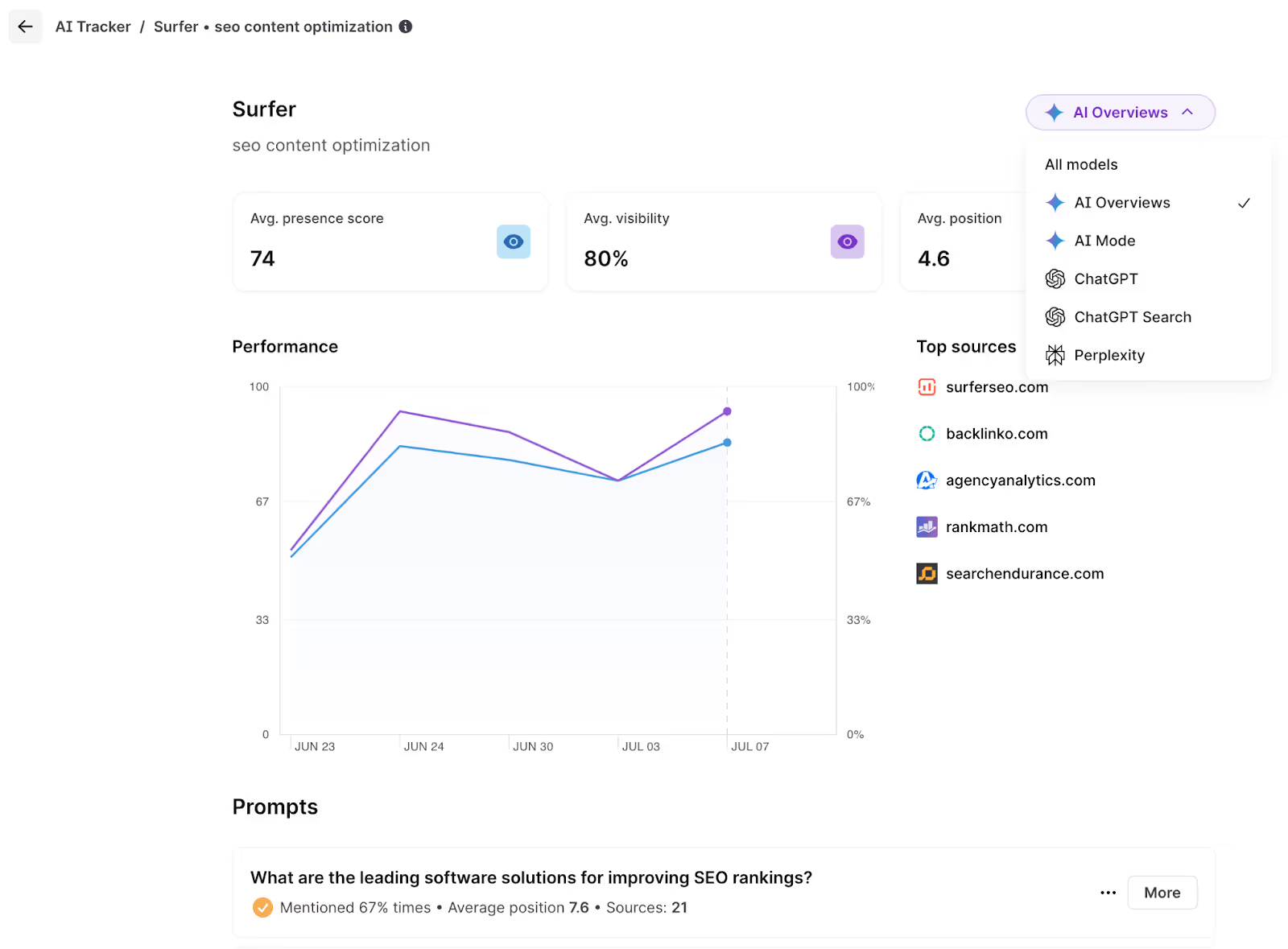

2. Monitor and track sources in AI Search

Pay attention to top sources, especially frequently appearing URLs.

Analyzing the type of content that appears as a source will provide insights into the queries the model considers.

Cross-check this with SERP to uncover where this content is ranking high.

Note: We are working hard in Surfer to make this easier, and soon you should see the first effects of our work inside the AI Tracker.

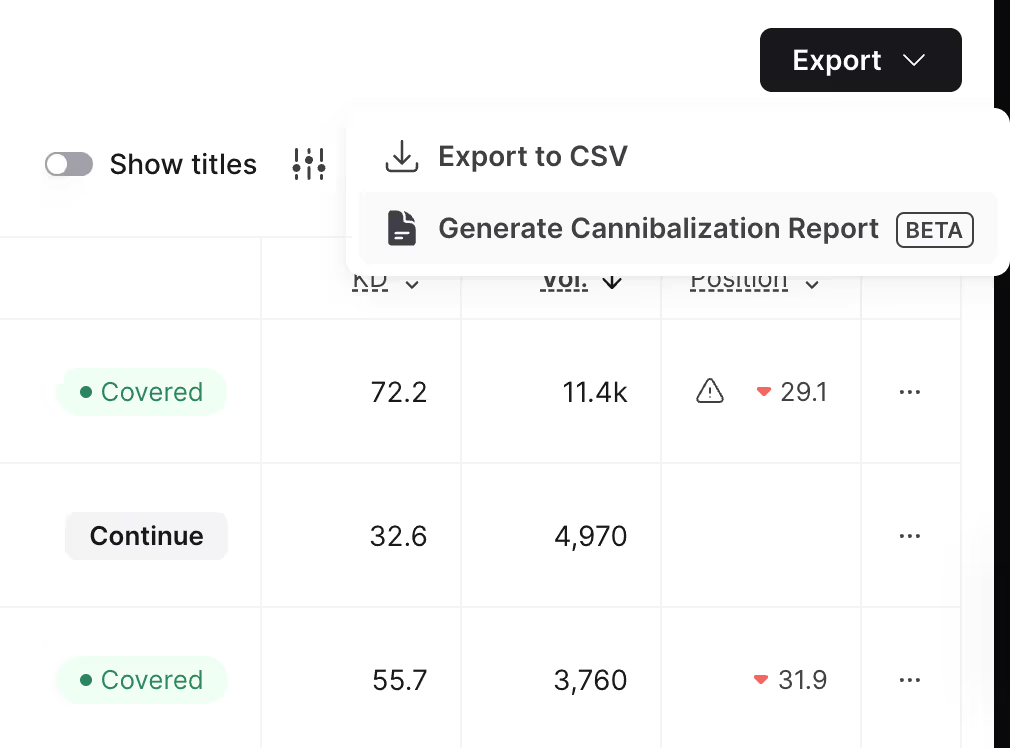

3. Avoid content "eating itself"

While chasing fan-outs, don’t forget about “old-school” SEO.

It’s still super relevant for AI-driven search!

As you create more content, make sure your pages aren’t competing with each other for the same keywords.

This situation is known as cannibalization.

It confuses search engines and waters down your authority. Tools like Topical Map in Surfer’s Site will provide you with insight into cannibalization.

Understanding query fan-outs is essential for the visibility era

In the era of GEO/LLMO/AEO (or whatever name will stick for AI Search Engines Optimization), understanding what impacts the results is crucial.

LLMs are nondeterministic. Asking the same question twice will most certainly result in getting two different answers.

Optimizing for fan-outs is one of many pieces of a puzzle that will help you increase the probability.

.avif)